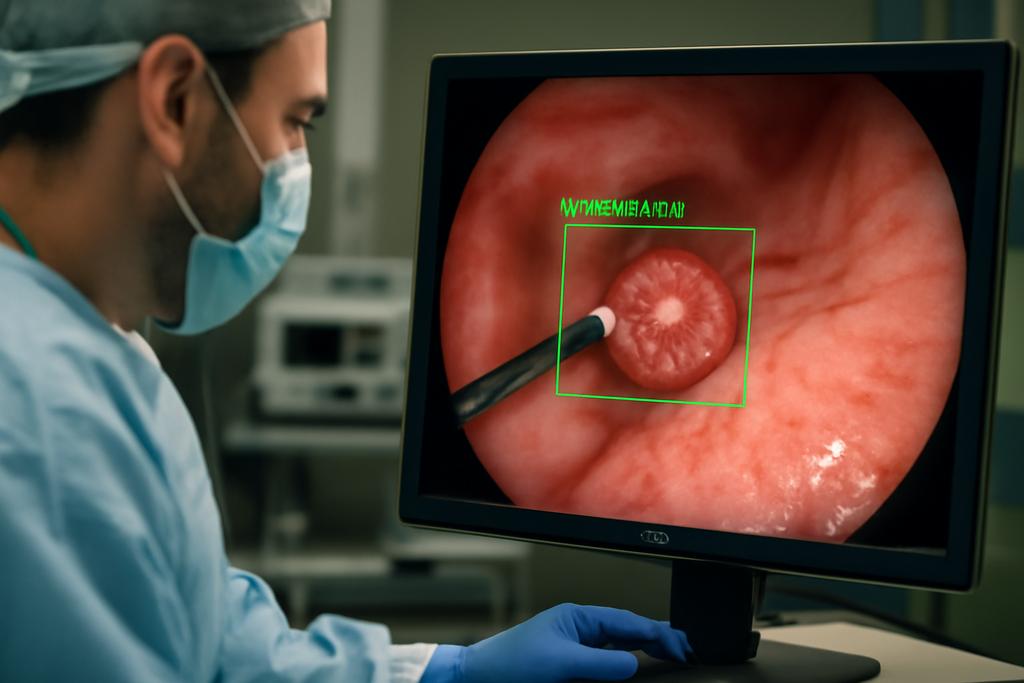

In the quiet, gnawing world of stomach cancer, catching a tumor early can be the difference between a slim chance and a survivable one. Gastric cancer remains a major global killer, and the early, subtle signs are easy to miss. A new study from a consortium led by the University of Macau proposes a bold upgrade to how we detect this disease: a tightly integrated system that pairs artificial intelligence with a hardware device to spot gastric cancer at its earliest stages as it happens. The core idea is not a single breakthrough but a careful marriage of software and hardware that can run in real time, with a level of accuracy that starts to resemble a clinical turncoat—catching what doctors might otherwise miss.

The authors identify the university behind the work and name the lead researchers who steered the project: the University of Macau, with Xian-Xian Liu and Simon Fong as central figures, supported by partners at The Chinese University of Hong Kong, UCLA, and several laboratories across China. The paper presents a new algorithm called One Class Twin Cross Learning, or OCT-X, and it sits inside an all-in-one point-of-care testing (POCT) device. The promise is not only higher accuracy but faster processing, real-time lesion surveillance, and a more streamlined path from data collection to decision in a real clinical setting. If you’ve ever watched a high-stakes medical imaging workflow in a movie, OCT-X aims to bring that precision into a compact, real-world toolbox you could imagine in a hospital hallway or a remote clinic.

And yet this isn’t just clever code. It’s a genuinely integrated system. The software sits on a hardware backbone built around a National Instruments CompactDAQ platform and LabVIEW software, with wireless connectivity and a pathway to 5G networks. The end result is not a single button-push miracle but a chain of components that can sense, reason, and report in near real time. The paper argues this integration tightens the loop between data capture, analysis, and clinical action, a critical improvement when clinicians must decide quickly whether a tissue biopsy is warranted or if a patient can be spared invasive procedures.

The OCT-X idea and how it works

The central idea behind OCT-X is deceptively simple in its aim: teach a computer to recognize the signs of early gastric cancer in endoscopic images, even when labeled data are sparse and imbalanced. That’s a mouthful, but it matters: early gastric cancer (EGC) is notoriously hard to spot, and most available datasets contain far more negatives than positives. OCT-X tackles this problem head-on with what the authors call One Class Twin Cross Learning. In short, the system learns from a single, mostly normal class and uses two parallel learning tracks to separate potential positives (early cancers) from background noise and benign lesions.

To make the learning robust, OCT-X uses a patch-based approach. The endoscopic image is broken into many small patches, each analyzed independently by a patch-level deep fully convolutional network. The idea is to treat each patch as a tiny signal detector, then fuse the results to decide whether the whole image contains a lesion. The patch-level network is designed to be sensitive to the textures and patterns an expert might notice—think of it as a grid of tiny, highly focused detectors working in concert rather than a single, global scanner.

At the heart of the method is a fast double-threshold grid search (FDT-GS) that helps separate likely lesion patches from noise. This preprocessing step creates two streams of data: patches that look like potential positives and patches that look like negatives (or noise). These patches feed two sub-networks that learn in positive-only and negative-only modes, then a fusion mechanism glues their outputs into a final decision. In practice, the network computes an anomaly score and a confidence level for each region, so the model doesn’t just say “cancer” or “not cancer” but also signals how sure it is about its call. The researchers describe a four-sub-network arrangement that echoes a chorus singing in harmony: four instances of a ResNet-50-based DeepLab model operate in parallel, each focusing on slightly different views of the data, and their results are fused to yield a more reliable verdict.

On the hardware side, OCT-X isn’t a purely digital dream. It rides on an all-in-one POCT device that houses high-resolution imaging sensors, real-time data processing, and wireless transmission. The NI CompactDAQ platform and LabVIEW software provide the real-time, multi-rate processing that makes this system adaptable to the noisy, real-world conditions of a clinical workflow. The team even sketches a vision of remote capsule endoscopy where a capsule could beam performance data back to clinicians in real time, aided by 5G networks and robust data modulation schemes. It’s not science fiction so much as a blueprint for when software meets hardware in the hospital corridor, enabling faster, more confident decisions about next steps for patients.

One of the study’s most compelling technical moves is its embrace of multirate learning. Real-world data don’t arrive at the same pace or in the same format, and a robust diagnostic tool needs to bend, not break, under those conditions. Multirate learning, implemented via LabVIEW’s parallel algorithms and the NI hardware, allows the OCT-X system to adjust to varying data rates without losing accuracy. In other words, the system is designed to stay calm and accurate whether it’s analyzing a fast stream of high-quality frames or a slower, noisier batch of images. The result is not just a single accuracy number but a capability: steady performance across speeds and data conditions that mimic actual clinical environments.

Why this could change cancer screening

The headline metric is striking: OCT-X reports a diagnostic accuracy of 99.70 percent for detecting early gastric cancers, outpacing several state-of-the-art one-class methods and showing notable gains in multirate adaptability. That combination—near-perfect accuracy plus reliability across data rates—matters, because it speaks to a system that could actually fit into daily clinical practice rather than sit on a lab shelf. The paper emphasizes that this isn’t just about a better computer vision model; it’s about an end-to-end pipeline in which data are collected, processed, and acted upon in real time, with minimal procedural friction for patients and clinicians alike.

The authors also highlight how OCT-X addresses some stubborn obstacles in gastric cancer detection. First, the one-class learning framework is well suited to imbalanced datasets, which are common in rare but critical medical conditions. Instead of requiring large numbers of examples of every kind of lesion, OCT-X learns from the target class and uses its internal cross-learning mechanism to recognize deviations from a normal baseline. This design reduces the need for enormous labeled cancer datasets, a practical advantage when every patient’s data are precious and diverse. Second, the patch-based approach creates a mosaic of local judgments that, when fused, captures the subtle textures and micro-patterns that define early disease. It’s a bit like diagnosing a painting by studying many small brushstrokes rather than judging the whole canvas from a single glance.

And then there’s the hardware side of the coin. The integrated POCT device—an all-in-one sensor suite with real-time processing, wireless connectivity, and potential 5G-backed remote monitoring—could democratize access to high-quality diagnostics. In remote or under-resourced settings, a portable system that can stream images and results to specialists elsewhere may shorten the time to diagnosis and reduce the number of invasive biopsies required. The authors repeatedly point to the clinical impact: faster, more accurate triage, fewer unnecessary procedures, and a smoother path from detection to treatment planning. That isn’t just a tech boast; it’s a path toward more equitable, patient-centered care.

Beyond the numbers, the OCT-X framework hints at a strategic shift in how AI systems for medicine are built. Instead of chasing ever-larger data hordes, this work leans on intelligent data curation (the FDT-GS preprocessor), robust patch-level reasoning, and a principled way to fuse multiple, small, local judgments into a trustworthy global call. It’s a reminder that in medicine, speed is valuable only if accuracy stays high and interpretability remains within reach for clinicians who must trust the system enough to act on its recommendations.

What lies ahead and what to watch for

Every new diagnostic system must travel from the lab to the clinic, and OCT-X faces the usual but nontrivial journey: broader validation, regulatory scrutiny, and real-world integration. The study reports results on endoscopic images and clinical data donated from Foshan First People’s Hospital and China’s National Institutes, with ethics approvals in place. While those results are striking, they come from a specific set of datasets collected in particular clinical contexts. Generalizing to other populations, imaging devices, endoscopy protocols, and disease subtypes will require additional multicenter trials and open collaboration across hospitals and research networks.

Another question is generalization: could the same OCT-X framework extend to other cancers or other imaging modalities? The authors’ approach—one-class twin cross learning, patch-level analysis, and fast threshold-driven data curation—appears adaptable in principle. If researchers can replicate the performance in diverse settings, we might be looking at a family of tools that brings high-precision, real-time diagnostics to a wide range of diseases, not just gastric cancer. The hardware side—an adaptable, portable, multiplatform data pipeline—offers a blueprint for how clinics could adopt such tools without a total rebuild of their workflows.

There are caveats, of course. The paper notes that the accompanying dataset is not open source, which is common in early clinical AI work but worth noting for reproducibility. The open question is how OCT-X will perform with truly external data—images from different scopes, different populations, and different care pathways. Real-world deployment will also involve user training, integration with electronic health records, regulatory approvals, and ongoing monitoring for drift or bias as datasets evolve. The authors themselves point to continued development, broader datasets, and the incorporation of additional AI techniques to further shrink false positives and improve robustness—an honest reminder that even a promising system is a work in progress, not a finished product.

On the bright side, the paper’s publication also highlights a practical path toward deployment: the combination of a patented AI-based diagnostic system with a real hardware platform, plus an accessible code repository for reproducibility. The authors report that the full OCT-X framework has been patented and that code is publicly available on GitHub, offering researchers and developers a concrete starting point for replication, extension, and perhaps, in time, commercialization. That blend of science, engineering, and accessibility is exactly the kind of ecosystem that can accelerate translation from bench to bedside, rather than leaving breakthroughs trapped in journals and conference slides.

In the end, what makes OCT-X compelling isn’t just the top-line numbers, but the narrative it embodies: a modern diagnostic pipeline that respects the messy realities of real clinical data, uses local “patchwork” reasoning to build a global verdict, and sits inside a hardware-software duet designed for real-time, patient-centered care. It’s a vivid illustration of how AI can be embedded in the everyday tools of medicine, not as a distant oracle but as a partner that helps clinicians move faster, act more confidently, and, hopefully, catch cancer in time to change the story for countless patients.

As the authors point out, this is a collaborative achievement from a network of universities and hospitals, with leadership anchored in the University of Macau and a cadre of co-authors across institutions. It’s a reminder that when engineering ambition meets clinical need, the result can be both technically elegant and practically meaningful. The road ahead will demand broader validation, careful integration into clinical workflows, and continued attention to equity and accessibility. If the next few years deliver more real-world validation and wider adoption, OCT-X could become a staple in the toolbox for early cancer detection—an instrument that helps reveal the hidden early signs of disease with the quiet confidence of a trusted partner in the room.

Author credits and contextual note: The study is led by researchers at the University of Macau, with Xian-Xian Liu and Simon Fong among the principal contributors. The work also involves collaborators from The Chinese University of Hong Kong, UCLA, and other Chinese institutions, reflecting a broad, international effort to bring AI-enabled diagnostics closer to everyday clinical practice. The paper emphasizes the hardware-software integration, foregrounding the NI CompactDAQ and LabVIEW ecosystem as a crucial enabler of real-time, multirate processing in a portable diagnostic device. The project is accompanied by a patent and an open GitHub repository for reproducibility, signaling an eye toward both scientific advancement and practical deployment.