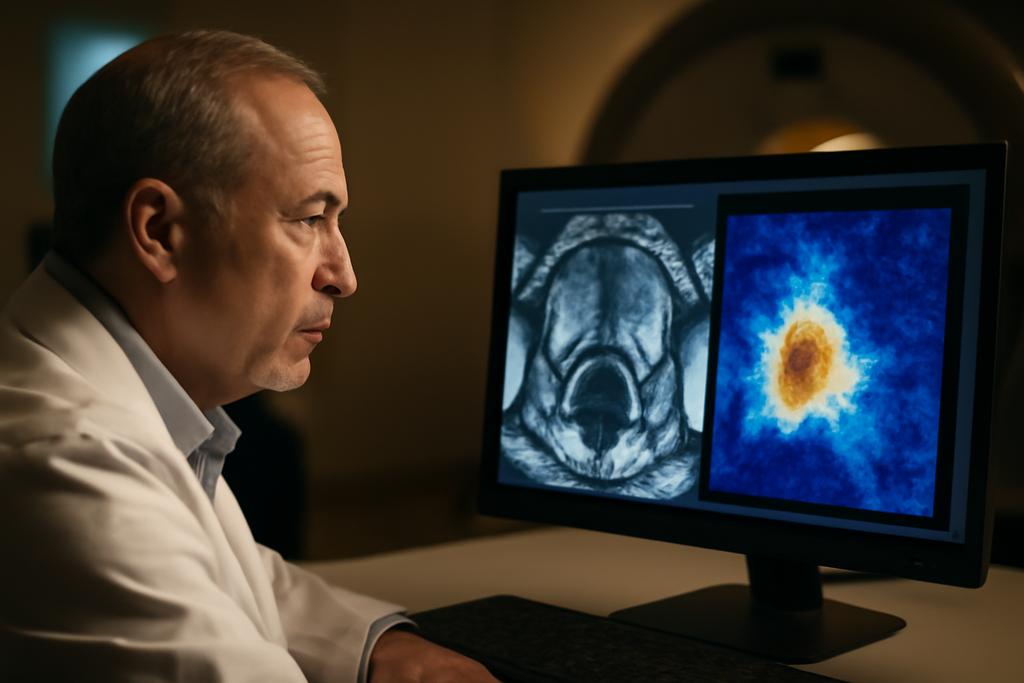

In MRI suites around the world, radiologists parse intricate textures and shapes, hunting for the telltale signs of clinically significant prostate cancer. It’s a careful craft, a blend of pattern recognition and medical intuition, and it can be slow—especially when clinicians must comb through thousands of slices to segment the exact tumor boundaries. A new study from researchers at the University of Amsterdam, the Netherlands Cancer Institute, and Maastricht University (with lead author Alessia Hu) aims to shift that balance. They’ve built an anomaly-driven twist on a familiar AI workhorse, a model that not only looks for cancer but also learns what healthy tissue should look like and uses deviations from that healthy baseline to guide its search. The result is a more focused, potentially more generalizable way to identify clinically significant prostate cancer on bpMRI scans.

The paper, whose authors include Regina Beets-Tan, Lishan Cai, and Eduardo Pooch, centers on an idea that sounds almost intuitive once you hear it: teach the machine what normal tissue looks like, then let it spotlight what doesn’t fit. In practice, the team trains a model to reconstruct a healthy prostate from the input image. The difference between the real MRI and the reconstructed healthy version—after a little smoothing to reduce noise—becomes an anomaly map. Those maps don’t replace a radiologist’s eyes; they augment them, nudging the segmentation network toward regions more likely to harbor cancer. They call this approach adU-Net, short for anomaly-driven U-Net, a useful riff on the standard segmentation architecture that has quietly become a workhorse in medical imaging.

What makes this study stand out is not just the cleverness of the idea but its measured commitment to real-world rigor. The researchers don’t rely on a single image type or a single dataset. They test multiple reconstruction methods to generate anomaly maps, they crop images to focus on the prostate’s region of interest, and they evaluate performance across two datasets—the PI-CAI challenge set and an external test set called Prostate158. The goal is not a perfect metric on a lab tablet but a robust signal that could translate into better, more reliable tools for clinicians. And behind the numbers lies a practical take on how to handle the stubborn problem of data imbalance in medical imaging: use anomaly detection to level the playing field when examples of cancer are fewer than examples of healthy tissue.

Rethinking abnormality in medical imaging

To understand adU-Net, picture a photographer learning what clean, unblemished skin looks like and then comparing a new portrait to that standard. The differences highlight spots, shadows, or textures that don’t belong. In this study, the “photographer” is a reconstruction model, and the target is a healthy prostate image. The team experimented with four reconstruction architectures—two autoencoders, a diffusion model, and a Fixed-Point Generative Adversarial Network (Fixed-Point GAN). Each model takes an MRI slice and attempts to recreate a version that looks like healthy tissue. The anomaly map is simply the absolute difference between the original image and this healthy reconstruction, blurred slightly to reduce noise. The more faithful the healthy reconstruction, the crisper the anomaly map’s spotlight on unusual regions.

Why go through this trouble? Medical images are notoriously imbalanced: cancers occupy only a small portion of the data, and lesions come in a range of sizes and shapes. If you train a network to recognize cancer by seeing a lot of cancer examples, you still risk missing rare presentations or failing to generalize to a new scanner or population. Anomaly detection sidesteps part of that problem by focusing on deviations from normal, which can be more consistent across different cohorts. In this project, the researchers crop to the prostate’s bounding box and apply masks to isolate the relevant tissue (the peripheral zone and transition zone). This isn’t mere housekeeping; it’s a deliberate move to reduce background noise and keep the signal where it matters most—the tissue architecture most likely to betray csPCa (clinically significant prostate cancer).

Among the four reconstruction methods, Fixed-Point GAN stood out. It doesn’t just learn a simple mapping from diseased to healthy; it performs a two-stage dance: an identity-like mapping that preserves essential attributes and a cross-domain translation that nudges the diseased image toward healthy realism. The result is an anomaly map that seems to trace subtle, clinically meaningful deviations more faithfully than the other approaches. In the paper’s comparisons, FP-GAN repeatedly outperformed the other architectures on cropped prostate images, yielding higher structural similarity (SSIM) and sharper detail—a crucial advantage when every millimeter can matter for a tumor’s boundaries.

How adU-Net channels anomalous hints into segmentation

The second pillar of the study is the integration trick: feed the anomaly map alongside the original bpMRI data into a segmentation network. The baseline is nnU-Net, a self-configuring workhorse that has become a standard for medical image segmentation. AdU-Net adds the anomaly map as an extra input channel, letting the network weigh both the raw MRI data and the highlighted deviations when predicting segmentation masks. It’s like giving the model a built-in “hotspot” layer that points to suspicious regions while still letting it consider the broader anatomy.

The data backbone is multi-parametric MRI, specifically biparametric MRI sequences (T2-weighted, diffusion-weighted imaging, and the apparent diffusion coefficient map). The team shows that combining these sequences enhances segmentation robustness, and the anomaly maps offer an extra lift. Importantly, the ADC-based anomaly maps—derived from diffusion-derived contrast—proved particularly valuable. Across validation, the adU-Net variant that used ADC anomaly maps achieved the best lesion-level detection score (AP) and kept pace with the strongest overall performance. On the external Prostate158 test set, the adU-Net using ADC maps continued to shine, delivering the highest AUROC among configurations and contributing to the best overall average score when all bpMRI sequences were used together.

To put numbers on the improvement, the external test demonstrated that adU-Net with ADC anomaly maps reached an AUROC of 0.8026 and an AP of 0.4306, yielding an overall average close to 0.62. The multi-sequence adU-Net variant (all bpMRI) achieved an even higher average (about 0.6181) on the external data, underscoring the value of fusing information from multiple MRI sequences. The results aren’t just about pushing a single metric higher; they point to a more reliable, generalizable approach that holds up when scanned data come from different sources—a perennial hurdle in medical AI.

The authors were candid about variability across datasets. While the multi-modal adU-Net configurations generally outperformed the nnU-Net baseline, some performance gaps persisted between the validation and external test sets. That’s a reminder that real-world deployment involves parsing quirks from different scanners, protocols, and patient populations. Still, the direction is clear: incorporating anomaly information helps the network generalize beyond the precise conditions of a single dataset, a lifeline for tools intended to assist radiologists in busy clinics around the world.

Why this could change medical imaging—and its caveats

The practical upshot of this work is inspiring for anyone who watches the gap between research and clinical care. If anomaly-driven segmentation can reliably improve performance across diverse data sources, it could reduce the time radiologists spend outlining tumors and standardize a level of precision that’s hard to achieve with manual segmentation alone. The approach also hints at a broader design principle for medical AI: teach the model what normal looks like, then use the abnormal to guide the search. That could translate to other organs and diseases, potentially offering more robust performance when annotated data are scarce or heterogeneous.

There are caveats worth keeping in mind. The ADC channel’s prominence isn’t universal; diffusion-weighted imaging is noisier in some contexts, which can dampen the usefulness of DWI-based anomaly maps. The improvements often hinge on the quality of preprocessing, including accurate cropping of the prostate ROI and masking of irrelevant tissue. In other words, the pipeline’s gains owe as much to careful data handling as to clever modeling. The study also reveals that anomaly maps are not a silver bullet. They help, but they don’t replace the need for diverse training data, better anomaly detection techniques, or smarter fusion strategies that can extract complementary cues from different MRI sequences without amplifying noise.

Looking ahead, the authors propose refining anomaly-detection methods, exploring more sophisticated multi-modal fusion, and extending the idea to other challenging segmentation tasks in medical imaging. If such work can demonstrate consistent gains across multiple centers and scanner families, it could push AI-assisted radiology from a curiosity in a lab to a routine clinical tool. The broader implication isn’t just faster segmentation; it’s a potential path toward more explainable AI in medicine. Anomaly maps are, by their nature, interpretable: they visually flag where a model thinks something is off. That transparency can help clinicians trust and cross-check AI-driven suggestions—an essential ingredient for real-world adoption.

The study’s backbone institutions deserve credit for tying the work to practical clinical needs. The PI-CAI dataset and the external Prostate158 set, both publicly available, provide a rigorous testing ground that mimics the variability of real-world practice. The paper names the University of Amsterdam, the Netherlands Cancer Institute, and Maastricht University as the research homes behind the effort, with Alessia Hu leading the author list and Regina Beets-Tan, Lishan Cai, and Eduardo Pooch as co-authors. In a field that sometimes feels seduced by ever-fancier architectures, this team’s emphasis on robust evaluation, ROI in the clinic, and the pragmatic steps of cropping and masking is a refreshing reminder: the best AI in medicine may be the kind that learns not just to see, but to see well where it matters most.

In the end, the anomaly-driven approach is less about replacing human expertise than augmenting it. It offers a way to focus attention, reduce the drag of data scarcity, and push segmentation toward the most clinically relevant presentations of csPCa. If the next few years bring broader validation and smoother integration into radiology workflows, adU-Net and its anomaly maps could become a small but meaningful lever for improving cancer care at scale—without sacrificing the human judgment that doctors bring to every image they read.