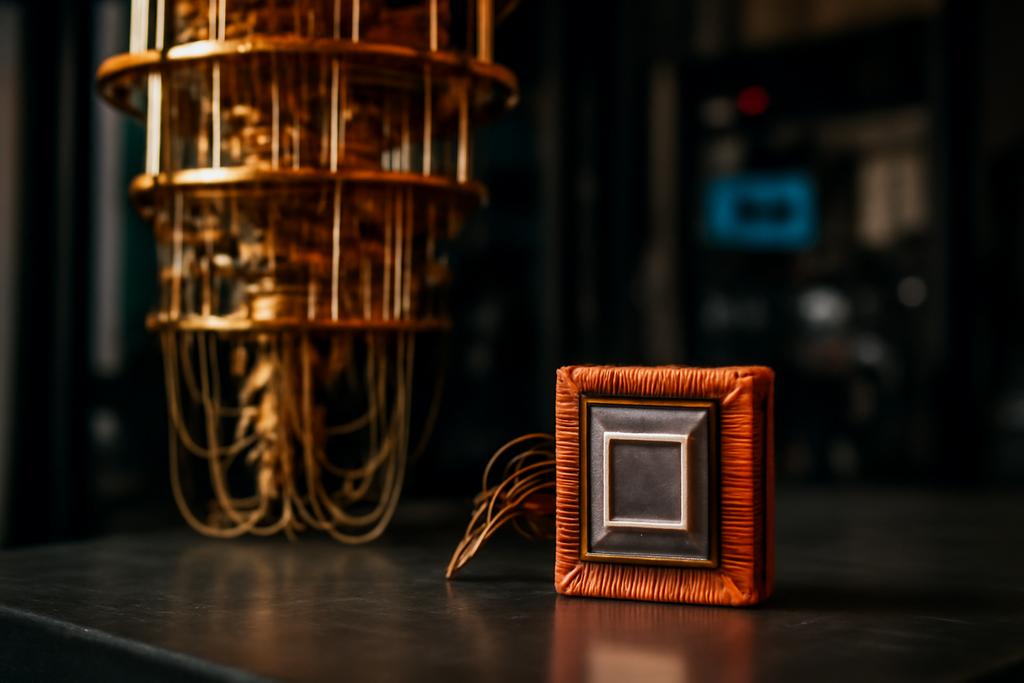

Quantum computers promise to solve problems classical machines can’t crack, but their memory layer—the quantum random access memory, QRAM—has been the stubborn bottleneck. QRAMs are meant to let a quantum processor fetch data from a database in a superposition, enabling powerful operations like searching an unordered database or assembling a desired quantum state directly from classical inputs. The magic lies in coherence: the same qubits must stay in step while many addresses are probed at once. When that coherence breaks, the whole computation falters.

That promise comes with a brutal overhead: protecting every qubit with the same level of error correction soars past what current hardware can sustain. The new Northwestern University work asks whether we can rethink error protection not as a uniform blanket, but as a tailored jacket—heavier where we need it, lighter where it won’t cost us much. The result is a design that could keep QRAM faithful while using far fewer physical qubits than conventional schemes.

What QRAM is and why it matters

QRAM is not your ordinary RAM. In a classical memory, a numbered address returns a single value. In a QRAM, the same address can be put into a quantum superposition of many addresses, and the memory returns a corresponding superposition of data. That capability unlocks new kinds of quantum algorithms—coherently querying a database, preparing superpositions of states that encode a dataset, or orchestrating quantum operations that depend on classical inputs in a wave-like way.

The potential is immense, but the hardware story is a different tune. Decoherence, readout errors, and the sheer footprint of classical data storage in a quantum-friendly form all conspire to make a high-fidelity QRAM expensive. If you try to guard every qubit with equal, heavy error correction, you get a goliath qubit count that would outpace any near-term hardware. The Northwestern paper sets out a middle path: quantify how much protection is really needed where, and you can push performance without blowing up resources.

Heterogeneous error correction flips the script

The core idea is intuitive once you picture the QRAM as a tree. In a bucket-brigade QRAM, address bits propagate down a tree of routers to reach memory cells. Any error in a router near the root can influence a large fraction of the memory layout, while errors near the leaves affect only a small subset. That asymmetry suggests something: protect the root more aggressively and let leaves ride a lighter touch.

In quantum error correction terms, protection is implemented by the surface code, where each logical qubit is encoded on a patch whose size is determined by a code distance d. A larger d means better protection but more physical qubits. By letting d increase as you move toward the root, the architecture becomes “heterogeneous”—hence the name. The leaves, where the branching branches are many but each path carries a smaller risk, can be lighter.

The punchline is resource-aware resilience: you don’t need the same level of protection everywhere; you need the right protection where it matters most for the task at hand, and that can dramatically cut the number of qubits required while keeping queries faithful.

What the math says about fidelity and overhead

The authors model how well a QRAM returns the right data when some routers in the tree are faulty. They track the “query fidelity”—how close the memory’s output stays to the ideal superposition—and how often an error in a router ruins the result. The punchline is that heterogeneity matters: assigning stronger protection to the handful of root routers can dramatically improve fidelity without paying for it everywhere.

Two concrete schemes are explored. In the first, the standard bucket-brigade style is kept, but the code distance at each level increases as you move toward the root. The math shows that the resulting query infidelity can grow only polylogarithmically with the memory size, a meaningful improvement over the uniformly error-corrected baseline. In other words, as you scale up the QRAM, you don’t pay a runaway fidelity penalty—at least not as quickly as before.

In a second scheme, the routers at each node carry more of the address information themselves, allowing the router chain to stay coherent for far shorter times per level and reusing the same time budget in a different way. If you push this further so the routing time scales with the code distance rather than with the overall memory depth, the authors show you can in principle drive the infidelity to a constant that does not grow with N. That is a striking, if contingent, improvement: a memory that stays reliable even as you size it up.

Two practical routes to heterogeneous QRAM

The paper doesn’t stop at theory. It dives into how you might actually build such a heterogeneous QRAM with two concrete schemes. In the first scheme, often described as a Scheme 1 in the literature, each router remains a small unit with a data qubit, an address qubit, and a bus qubit. The bus that carries information down the tree travels in lockstep with the address bits, so you don’t pay a separate, long coherence cost at the end. The upshot is a modest, if real, improvement in fidelity, and a qubit budget that grows roughly linearly with the size of the memory, with a fixed constant factor.

In the second scheme, Scheme 2, each router keeps an entire register of address bits. This is heavier in qubit count—every router carries more information—but it trims the number of routing steps down to a clean d-based complexity. That lets the system achieve a truly superior fidelity profile, sometimes at the cost of longer overall query time and a bigger hardware footprint. The authors quantify the tradeoffs: Scheme 1 might require about 108 N physical qubits, Scheme 2 about 192 N, for large N; the uniformly protected QRAM would need a very different, often larger, qubit budget for comparable fidelity.

Both schemes embody a broader point: clever resource allocation can punch above its weight. You don’t have to throw the entire QRAM under the same heavy guardrail; you can tune the guardrails to the architecture’s real sensitivities. The authors also compare their heterogeneous designs against a baseline of uniformly error-corrected QRAM, showing clear gains in fidelity for roughly the same order of qubits. That’s the practical signal: you may be able to scale QRAM without entering a qubit-anvil regime.

Of course the authors caveat that their analysis leaves out some complexities. They assume the absence of gate errors and omit certain ancillary overheads inherent to lattice-surgery operations. Still, the result is a meaningful map forward: if you want a scalable, high-fidelity QRAM, heterogeneity in error protection is a feature, not a bug.

Why this could change the trajectory of quantum computing

QRAM has long loomed as both a gateway and a bottleneck. It’s the kind of component that could unlock dramatic speedups in data-heavy quantum tasks, but its physical realization has seemed stubbornly out of reach. The Northwestern study reframes the problem as a resource allocation puzzle: the memory’s own structure tells you where to invest protection. The root of the memory tree is the high-leverage choke point; give it robust error correction and you unlock big gains for the entire memory.

That shift mirrors a broader truth about engineering tricky systems: one-size-fits-all protection is often wasteful. In many domains—from cloud computing to urban planning—allocating limited resources where they matter most yields outsized returns. The same logic, translated into quantum hardware, could tilt the balance from the dream of QRAM to the QRAM we can actually build.

The work, led by Ansh Singal and Kaitlin N. Smith at Northwestern University, is a theoretical exploration with clear next steps. It shows that a heterogeneous approach can deliver constant or polylogarithmic improvements in fidelity while costing far fewer physical qubits than a uniformly protected QRAM. It also lays out concrete circuit ideas and overhead estimates that hardware designers can use as a starting point when they begin to build real devices. The authors are careful about what remains to be proven in a lab—gate errors, lattice-surgery realities, and other architectural details—but the central idea is a practical one: tailor the shield to the weapon, not the other way around.

In the end, the paper offers a hopeful signal about the path to scalable quantum memory. If QRAM can be made faithful without bankrupting the hardware, it could accelerate a wave of quantum algorithms that rely on fast, coherent data access. The field already knows that every qubit is precious; this work suggests a smarter way to spend them, focusing protection where it actually matters and letting lighter protection do the rest.