Forget multiple-choice tests. Researchers at the University of Waterloo and the University of Toronto have devised a far more rigorous way to evaluate artificial intelligence: pitting advanced language models against each other in a structured debate format. This isn’t your high school debate club; this is a high-stakes showdown designed to reveal the true depth of an AI’s reasoning abilities, not just its capacity to memorize and regurgitate information.

Beyond the Buzzwords

The current landscape of AI evaluation is, frankly, a mess. Existing benchmarks are often quickly conquered by ever-improving models, leaving researchers scrambling to create ever-more-difficult tests. It’s a bit like an arms race, where the AI gets stronger, the benchmarks get harder, and the whole system is ultimately unsustainable. This is further complicated by data contamination—the AI essentially cheats by memorizing answers from the test set itself.

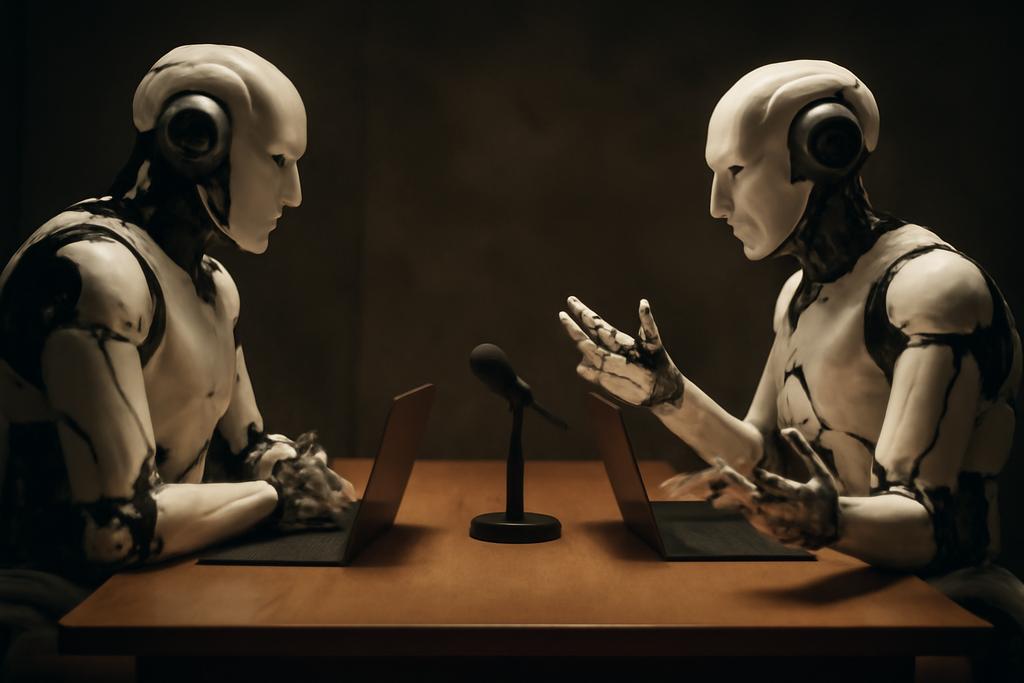

Linbo Cao and Jinman Zhao’s innovative approach elegantly sidesteps these issues. Their method transforms any standard question-answering dataset into a series of adversarial debates. Two AI models square off, one defending the correct answer, the other attempting to refute it with a different answer. A third, “judge” model, completely blind to the true answer, impartially evaluates the arguments to decide the winner. The process repeats over multiple rounds, forcing the models to engage in multi-round reasoning and articulate their stances convincingly.

The Power of Argumentation

The brilliance of this system lies in its inherent ability to expose shallow memorization. A model that simply memorized the answers from a test set will struggle to generate coherent arguments supporting those answers, let alone defend them against a well-reasoned challenge. The multi-round structure, in particular, is key. It’s not just about producing a single, correct answer, but about constructing a persuasive and logical argument that can withstand scrutiny.

Imagine a courtroom setting, where the AI is on trial. One model acts as the prosecution, presenting the official answer and supporting evidence. The other acts as the defense, attempting to undermine the prosecution’s case by providing a counter-argument. The judge, a neutral observer, listens to both sides and delivers a verdict based on the quality of reasoning and argumentation.

Testing the Waters

The researchers tested their system on a subset of questions from the MMLU-Pro benchmark, a notoriously challenging test for AI. They found that the debate-driven approach revealed significant differences between models that were not apparent in traditional multiple-choice evaluations. In some cases, models that scored highly on the original MMLU-Pro test performed poorly in the debate format, indicating that their success may have relied more on memorization than actual understanding.

Perhaps most strikingly, they fine-tuned a Llama 3.1 model directly on the test questions—a deliberate attempt to introduce contamination. This dramatically boosted its score on the standard test (from 50% to 82% accuracy). However, in the debate setting, this “cheating” model performed worse than its un-fine-tuned counterpart. This decisively demonstrates the debate format’s robustness against data contamination.

Scaling Up and Looking Ahead

The beauty of this system is its scalability. The researchers have already created a benchmark of 5,500 debates—over 11,000 rounds of argumentation—involving eleven different models. They plan to publicly release this benchmark, providing researchers with a powerful new tool to evaluate AI. Crucially, the debate format scales with the capabilities of the models themselves. As AI becomes more sophisticated, the challenges posed by the debate format will increase proportionally, allowing for ongoing and more meaningful evaluations.

This research shows us that we need to move beyond simplistic evaluations of AI. The debate-driven approach offers a promising path towards creating more rigorous, fair, and sustainable benchmarks that can truly assess the depth and robustness of AI systems.