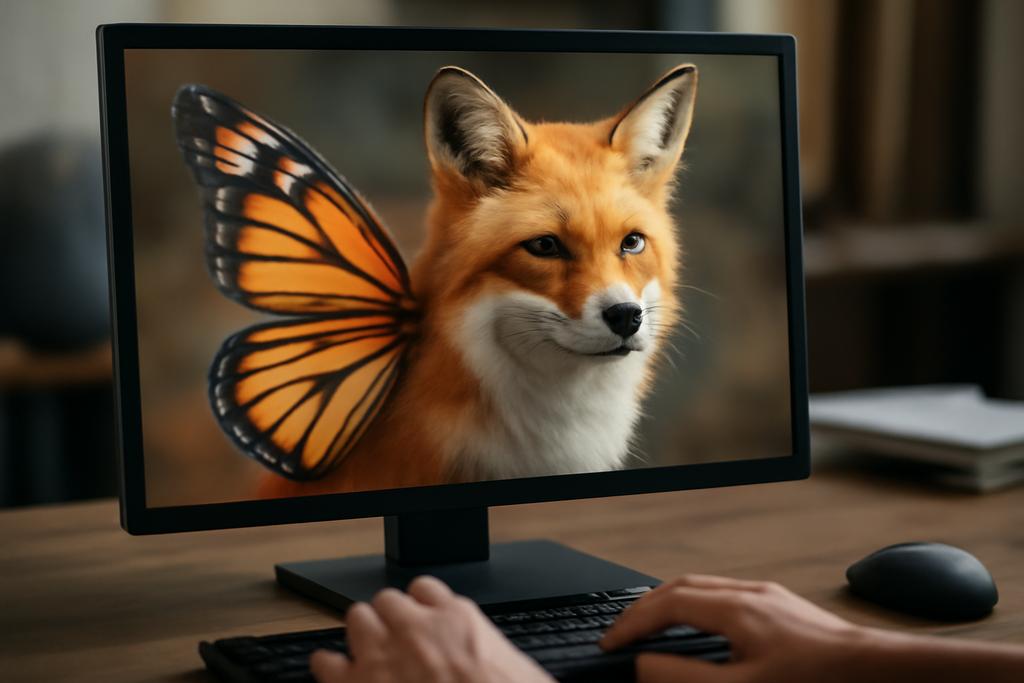

Artificial intelligence has reshaped how we generate pictures from ideas, turning vague thoughts into vivid visuals with a few keystrokes. But what happens when you want to fuse two ideas at once—not merely swap one for another, but blend their essences into a single, coherent image? A team from the University of Milano-Bicocca in Milan, Italy—led by Lorenzo Olearo along with Giorgio Longari, Alessandro Raganato, Rafael Peñaloza, and Simone Melzi—set out to answer that question. Their work probes whether modern text-to-image diffusion models can perform zero-shot concept blending: can these systems mix multiple concepts without additional training, fine-tuning, or clever prompt engineering, and still produce images that feel like real hybrids rather than rough mashups?

Highlights: The study tackles concept blending as a zero-shot challenge, tests four distinct in-pipeline strategies, and grounds its conclusions in a substantial user study. The upshot is a nuanced mix of capability and limitation—diffusion models can blend ideas creatively, but the results depend on subtle input details like prompt order and random seeds.

Diffusion models have become the artists of the modern AI age, translating short prompts into rich, high-resolution imagery. But human creativity isn’t simply about combining two words; it’s about weaving together ideas so the whole feels new yet recognizably grounded. The paper approaches this from a cognitive lens: blending concepts is a core human skill, and it might be mirrored—at least in part—in the latent representations that diffusion models learn during training. The authors’ aim is not to mimic human cognition exactly, but to test how far these powerful generative systems can go when asked to synthesize two ideas into one visual entity without any extra coaching.

Four zero-shot blending methods inside diffusion models

At the heart of the study are four distinct strategies for weaving two prompts together as the image is generated. Each method operates inside the diffusion pipeline, so no new training is required. The first method, TEXTUAL, blends the concepts by interpolating their embeddings in the text encoder’s latent space. Think of it as mixing the two ideas in the model’s own language before the image even starts taking shape. The second, SWITCH, starts denoising with the first prompt and switches to the second at a carefully chosen step in the middle of the process. Here, the early steps sculpt the basic silhouette while later steps add the texture and nuance from the second concept. The third approach, ALTERNATE, alternates conditioning prompts on alternating denoising steps, weaving features from both prompts across the entire synthesis. The fourth, UNET, takes a more architectural route: it splits where in the diffusion network each prompt guides the process, feeding one prompt into the encoder and bottleneck and the other into the decoder, effectively layering structure from one concept with style from the other.

Highlights: TEXTUAL offers symmetric blending in embedding space; SWITCH and ALTERNATE leverage the iterative nature of diffusion; UNET taps into the network’s internal cross-attention blocks to sculpt a more nuanced fusion. The authors provide concrete diagrams and examples showing how each method behaves with simple prompts like lion vs cat, and increasingly complex pairings like a painting style blended with a subject.

The paper’s experiments unfold in a controlled, repeatable way using Stable Diffusion v1.4 as a backbone, with identical seeds and sampling settings across comparisons. By keeping variables like seed constant, the researchers aimed to isolate how much the blending mechanism itself shapes the final image, rather than noise-driven whimsy. In practice, this setup reveals a traded landscape: some strategies are very good at certain blends and weaker at others, and even the same prompt can yield very different results if you tweak the seed or the ordering of prompts.

What makes a blend feel coherent or off-kilter

The study goes beyond cute collage-work. It methodically asks what factors push a two-concept blend toward coherence and where it can stumble. One interesting insight is symmetry versus asymmetry. TEXTUAL is the only method that remains symmetric when you flip the order of the prompts; the other three methods tend to bias the image toward the first prompt. That means if you pair two unrelated ideas, switching the order can yield noticeably different hybrids, and sometimes the same pair becomes a very different creature simply by changing which idea leads the dance.

Another key variable is the blend ratio—the degree to which each concept contributes to the final image. TEXTUAL lets you dial a smooth, continuous mix via interpolation of embeddings. SWITCH, by design, creates a sharp transition at a specific step; ALTERNATE distributes influence more evenly by alternating prompts across steps; UNET achieves ratio control by choosing which layers attend to which prompt. Each method thus encodes a different intuition about how ideas should coexist: a balanced duet, a fast-paced swap, a multi-step tango, or a structural-and-surface split inside the network.

The seed—those random numbers that seed the image generation process—emerges as a stealthy but powerful influence. The same prompts can produce a spectrum of outputs just by starting from different noise. Some methods yield stable, subtle blends across seeds, while others swing wildly, producing surprising, sometimes cartoonish results. The researchers argue that this seed sensitivity isn’t a bug but a feature: in the realm of creative synthesis, it’s a reminder that there are many possible blends for any two ideas, and the “best” one may depend on taste as much as on technique.

Highlights: There is no universal winner. The four methods excel in different contexts, with prompt order, conceptual distance, and seed selection subtly steering the outcome. The study’s nuanced results emphasize creative flexibility rather than mechanical perfection.

Beyond objects: style, emotion, and architecture as blends

One of the paper’s most engaging explorations is moving from tangible objects to intangible modifiers: artistic styles, emotions, architectural moods. The researchers test whether you can blend a concrete subject with the aura of a painting, the sensation of an emotion, or the silhouette of a famous landmark, all in a zero-shot way.

In the paintings domain, they pair a main scene—say, a portrait of a man—with the stylistic DNA of iconic works like Starry Night, Water Lilies, The Scream, Girl with a Pearl Earring, and Picasso’s Guernica. The results vary by method, but the upshot is clear: SWITCH and UNET tend to integrate the painting’s signature look into the subject without losing the underlying content, while TEXTUAL often drifts toward more literal or abstract stylizations. This resembles a style-transfer vibe but happens entirely through prompt-driven diffusion without extra fine-tuning.

Emotions as modifiers are equally revealing. When the second concept is an emotion word such as anger, happiness, or sadness, the blends evoke emotional cues in ways that can be surprisingly subtle or striking. SWITCH and UNET often push the subject’s facial cues and posture toward the chosen mood, while TEXTUAL can reframe the entire scene in a way that evokes emotion through context rather than facial expression alone.

Architectural landmarks present a tougher test, because they require plausible geometry and recognizable silhouette cues. The authors experiment with blends like the Empire State Building fused with the Taj Mahal or the Leaning Tower of Pisa married to Big Ben. The results vary, but in several cases UNET and ALTERNATE deliver blends where the fundamental geometry of one landmark remains legible while stylistic or decorative elements from the other creep in. The findings underline a practical point: some architectural ideas are easier to fuse than others, and the network’s ability to preserve core shapes while overlaying a second identity depends on where in the network the conditioning is applied.

Highlights: Abstract modifiers—styles, moods, architecture—open doors to rapid concept exploration beyond concrete objects. The swapping-in-the-middle and encoder/bottleneck split methods often outperform plain prompt interpolation on these complex blends, suggesting useful pathways for designers and artists seeking hybrid aesthetics without extra training.

Why this matters for creativity, design, and the AI toolkit

So why should curious readers care about these blends? The most practical takeaway is that diffusion models already encode a surprising degree of compositionality. They can generate coherent hybrids by reusing their own learned representations, without retraining or hand-assembling prompts. This could accelerate ideation, fast prototyping, and cross-domain concept exploration in fields ranging from graphic design to game development to product ideation.

There’s a human-centered angle too. The paper’s authors connect concept blending to a cognitive theory of blending ideas, rooted in how humans imagine and fuse concepts in novel ways. Their work doesn’t claim to replicate human creativity, but it does reveal that modern AI systems can participate in a form of visual synthesis that resembles the creative spark—an artificial partner that can propose hybrids we might not have imagined on our own. In practice, this could become a collaborative tool: a brainstorm buddy that offers unexpected hybrids, which a human designer can then refine, critique, and perfect.

Another implication lies in education and accessibility. If you can generate compelling visual blends without specialized training, you lower barriers to experimentation. A student sketching out a concept, a designer exploring branding directions, or a researcher prototyping exploratory visuals could all benefit from rapid, zero-shot blending as a first pass before committing to more expensive workflows.

But the work also offers important cautions. The results are sensitive to input nuances—seed, prompt ordering, and the specific blending method—so outcomes can be unpredictable. This fragility mirrors some aspects of human creativity, where small shifts in context can yield dramatically different ideas. It also raises questions about reliability, bias, and interpretability: if a model’s blends are shaped by training data quirks, what does that mean when we rely on them for design or decision-making?

The researchers are frank about limitations. The study focuses on short, single-word prompts and a particular diffusion backbone. Results might look different with newer models, longer prompts, or domain-specific tuning. They also acknowledge that a true cognitive theory of blending remains out of reach; the AI blends are impressive, but not fully equivalent to human imagination.

Still, the paper’s central message is hopeful: diffusion models already offer a vivid, practical playground for compositional creativity—one that can be explored without extra training or heavy engineering. The work also provides a blueprint for how to study these capabilities rigorously: clear methods, controlled experiments, and human-in-the-loop evaluation through a well-designed user study.

Highlights: The research not only demonstrates creative potential but also maps the terrain of when and why different blending strategies work, pointing toward future refinements in more capable diffusion systems and more nuanced evaluation methodologies.

What comes next for blending, imagination, and AI

The authors sketch a few concrete directions. They want to test these four blending methods in newer diffusion frameworks and higher-resolution pipelines, where fidelity and control may improve. They also envision adding symbolic constraints or partial blending controls so that only certain features of one concept transfer to the other—an idea that could blend the precision of symbolic reasoning with the expressive power of neural nets. Multi-concept blending, beyond two prompts, is another intriguing frontier—could a single image fuse three ideas in a coherent, aesthetically pleasing way?

Beyond technical refinements, the work invites broader conversations about how we understand and nurture creativity in machines. If AI can offer a credible palette of hybrids—stylized, emotional, architectural, or otherwise—how should designers steward that tool? The paper hints at a collaborative future where humans define the rough idea and the machine suggests viable, surprising hybrids, which humans then curate, critique, and push toward new horizons.

In short, the study from the University of Milano-Bicocca shows that concept blending in diffusion models is real, nuanced, and surprisingly rich. It’s not a silver bullet for creativity, but it is a robust, practical indicator that today’s generative AI can be a generous partner in the messy, delightful process of ideation.

Highlights: The path forward is a blend of better models, smarter prompting, and thoughtful human-AI collaboration, with a focus on understanding when a blend works, why it works, and how to steer it toward desired creative outcomes.

From a storytelling perspective, the work reads like a map of creative constraints: you can build a bridge between two concepts, but the bridge’s form—whether sleek and precise or bold and whimsical—depends on where you anchor the support beams inside the diffusion process. It’s a reminder that AI art isn’t just about outputs; it’s about processes—the subtle choreography inside a neural network that makes a strange, compelling new image emerge from two familiar ideas.

As diffusion models continue to evolve, researchers and practitioners alike will be watching closely to see which blending strategies endure, which new strategies arise, and how these synthetic experiments translate into human creativity in the wild. The researchers’ invitation to reproduce and extend their work—sharing code, prompts, and user-study materials—pins the door open for a community effort to test, critique, and improve concept blending across domains.

Highlights: The study closes with a practical, community-minded note: embrace open tools, test across contexts, and keep exploring how machines and people can co-create in vivid, unexpected ways.