Counting the number of distinct elements in a dataset is a deceptively simple-sounding problem. In the wild, it’s a workhorse of modern data engineering: you want to know how many unique IP addresses touched a network, how many unique customers visited a site, or how many distinct words appear in a massive text corpus. The twist is that the data are not sitting in one tidy file but spread across many servers, often across continents. The big question, then, becomes simple in phrasing but thorny in detail: how much information do we need to exchange to estimate that count accurately, without shipping the whole dataset to a single place?

The study we’re looking at reframes this old question with a new compass. Instead of chasing a universal worst case, it parameterizes the problem by a more realistic statistic: how many pairwise collisions occur when you scatter the same elements across multiple servers. In plain terms, if the same item tends to show up on a lot of servers, you’ll pay more to keep the count honest. If most items are rare across servers, you can economize on communication. This shift from worst case to distribution-aware thinking is the paper’s core move, and it unlocks a surprising kind of efficiency for real-world data. The work is led by Ilias Diakonikolas of the University of Wisconsin–Madison, with collaborators from UC San Diego, UC Davis, Carnegie Mellon University, and Texas A&M University. The team’s authors include Daniel M. Kane, Jasper C.H. Lee, Thanasis Pittas, David P. Woodruff, and Samson Zhou, reflecting a broad, cross-institutional effort to rethink distributed statistics.

A new lens for a classic problem

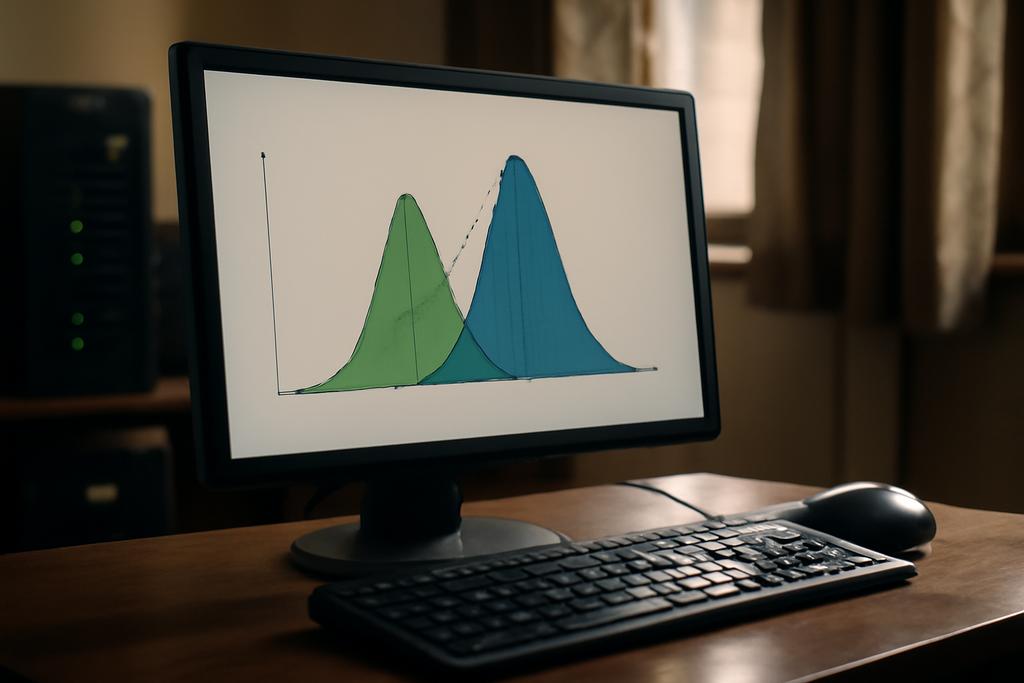

At the heart of the paper is a clean idea: describe the problem not just by the size of the universe or the target count but by C, the number of pairwise collisions. Imagine α servers each holding a fingerprint of the items they’ve seen. A collision happens when the same item lights up on two of those fingerprints. If C is small, the servers are largely talking about different things, and you might believe you can tally the distinct elements with far less cross-talk than the worst-case theory would predict. If C is large, you’re living in a world closer to the old adversarial guarantees, where the cost of coordination stays stubbornly high. This reframing changes the whole game: the same protocol can behave very differently depending on how many collisions actually occur in practice.

To connect the dots to real data, the authors note that many large datasets, from natural language to internet traffic, follow Zipf-like distributions. In such worlds, a tiny handful of items appear very frequently, while the vast majority barely register. That means the same top items show up on multiple servers far more often than the tail items, inflating C. Conversely, in some networks or text corpora, collisions can be rarer than the worst case would suggest. The paper makes this intuition precise by showing that if C is small enough, you can push the total communication well below the classical lower bounds that assume a more uniform, spread-out scenario. It’s a reminder that the math of what can be communicated cheaply often aligns with how data actually behaves in the wild.

As a bridge to practical intuition, consider a network monitoring system. If most IP addresses you’re tracking appear on only a few edges of the network, you don’t need to gossip about every address with every other server. You can safely budget a lean amount of communication in exchange for a tight estimate of the total distinct addresses seen across the whole system. That budget scales with the square root of the collision count, a dramatic departure from the linear or near-linear costs the worst-case theory would predict. The paper formalizes this intuition and shows how to turn it into a concrete protocol with provable guarantees.

The collision parameter and the new protocol

The authors present a general protocol that, given a dataset S spread across α servers and a universe of size n, achieves a (1 + ε)-approximation to the number of distinct elements F0(S). The communication cost is a sum of two parts: a baseline α log n for coordination, plus a second term that scales with the collisions, namely sqrt(β) times log n, where β captures the collision parameter via C ≈ β · F0(S). In plain language: when collisions are few, the second term is small and you pay much less than the classic bounds would suggest. When collisions march up, the second term grows, but the bound remains tight in a precise, quantifiable way. This yields a spectrum of performance that better matches how data actually behaves than a one-size-fits-all worst case.

The essential mechanism is a carefully designed subsampling workflow. The servers agree on a sampling schedule that progressively narrows the universe to smaller and smaller slices. A coordinator then aggregates the presence or absence of items across those slices, and the final estimate is obtained by scaling the observed counts by the inverse of the sampling probability. The analysis shows this estimator is unbiased and its variance can be kept small thanks to the collision-aware design. Crucially, the number of items each server needs to report shrinks when C is small, which is the key source of the improved communication guarantee.

Beyond the basic (1 + ε)-estimate, the paper also shows how to exploit more information when you have a bound on the number of collisions or on the number of distinct elements. In particular, if you know that C is not exceeding F0(S), you can tailor the protocol to achieve even tighter performance. The upshot is a clean, practical message: know the talking points you’re likely to share, and you can talk less overall while still getting a faithful picture of the whole distributed dataset.

Lower bounds that chase the ceiling

No discussion of a new upper bound is complete without addressing whether the bound is tight. The authors pair their protocol with matching lower bounds across regimes of the collision parameter. To prove these, they navigate a classic land of information theory and communication complexity. They construct a problem called GapSet that blends outer and inner challenges: an outer GapAnd-style task controls the aggregate structure, while an inner multiplayer set disjointness task grounds the hardness in the nuts and bolts of coordinating many players with overlapping data. The technical machinery is dense, but the message lands clearly: when you measure complexity by C, the hardness of approximate F0 estimation scales in a way that matches the new upper bounds, up to logarithmic factors.

One important byproduct is a fresh lens on a related task called distributed duplication detection. Here the question is how many coordinates appear on at least two servers. The authors show that the same GapSet-compositional approach yields near-optimal lower bounds for this problem as well, tying the knots between distinct-element counting and the more familiar murkiness of duplicates in distributed settings. The upshot is a parity between what we can do and what we must pay in the worst case, but with the crucial caveat that this parity is governed by C. In the real world, where Zipf-like distributions reign, C is often small enough to let practical schemes escape the worst-case gloom.

To translate the theory into a sense of scale, the authors don’t stop at abstract bounds. They connect their work to streaming models as well, where a single pass or a couple of passes over a data stream must produce a good estimate with limited memory. Parameterizing by the number of items that appear more than once, they derive two-pass and even one-pass strategies whose space usage scales with C rather than the total universe size. It’s a vivid reminder that the same core ideas—sampling, hash-based sketches, and robust estimation—reappear across distributed and streaming worlds, each time benefiting from the same collision-aware intuition.

Streaming, experiments, and why this matters

The paper doesn’t rest on theory alone. It extends the collision-aware viewpoint to streaming algorithms and backs up the claims with experiments on real data. The CAIDA traffic traces, a standard benchmark in network measurement, reveal a surprisingly skewed distribution in practice. In those data, a small handful of items dominate while the rest fade into the background, producing a pattern of collisions that makes the collision-aware protocol outperform worst-case lower bounds by orders of magnitude. The experiments aren’t flashy proofs of superiority; they are a measured demonstration that the theory can translate into tangible communication savings in real systems.

On the practical front, the authors show how the algorithm’s communication footprint scales gracefully with the observed level of collision. In setups where you can tolerate modest accuracy, the method can deliver faithful estimates with far less data movement than traditional sketches would require. And because the framework admits distributional knowledge—even rough upper bounds on C—it can be tuned on the fly as a data center observes changing traffic patterns or shifting language distributions in a stream of text.

Beyond the specifics of distinct-element counting, the paper makes a broader implicit claim that feels timely in a data-rich era: many statistical problems with formal hardness in the worst case can become tractable in practice when you bring realistic structure into the model. Zipfian tails, skewed workloads, and partial overlaps aren’t just quirks of data; they’re levers you can pull to design more efficient algorithms. The work invites researchers and engineers to ask, not just what the worst-case guarantees allow, but what the actual data-generating processes look like in their environment and how those shapes can reshape the cost of computation.

As a closing thought, this is a study that sits at the intersection of theory and practice. It shows how a careful reframing—tracking the ecology of collisions across servers—can turn an ostensibly intractable problem into something workable in the real world. The collaboration spans several top institutions, built on the leadership of Ilias Diakonikolas and a cadre of colleagues from UW–Madison, UC San Diego, UC Davis, CMU, and Texas A&M. The message is hopeful: by listening more closely to how data actually collide across systems, we can design protocols that communicate less while still telling the truth about the world in aggregate.