If you’ve spent time with modern language models, you’ve felt the thrill of their uncanny ability to stitch meaning from streams of words. They seem to understand context as if a room full of conversations were quietly coalescing into sense. Yet beneath that magic sits a core idea that ties two communities in AI together: the world of graphs, where entities connect by edges, and the world of sequences, where order governs meaning. A new perspective from the University of Cambridge reframes Transformers as a kind of Graph Neural Network operating on a fully connected web of tokens, not a fixed map of moments in a sentence. The author, Chaitanya K. Joshi, shows that the attention mechanism is really a way of exchanging messages across every pair of tokens, letting the model learn complex relationships without needing a pre-built graph.

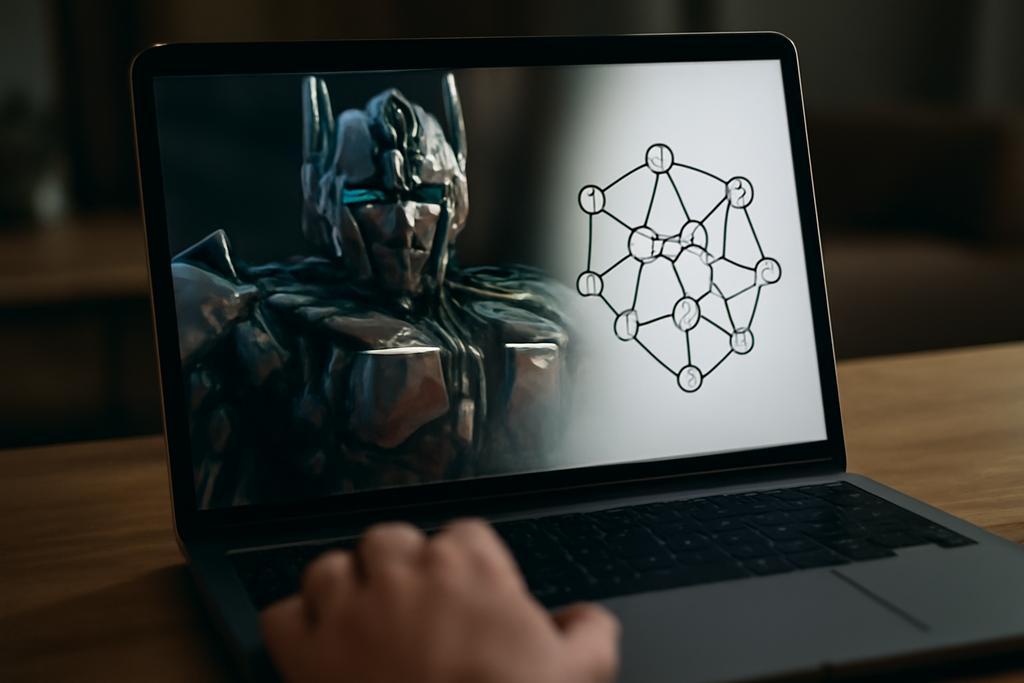

In other words, Transformers are not just good at language because they memorize; they are powerful because they learn to reason about relationships in data. If you imagine each input element as a person at a party, attention is how loudly each person talks to others, and the resulting embedding is the evolving network of conversations. What Joshi emphasizes is that this social network can be viewed as a graph: every token is a node, every attention weight is an edge, and the whole thing can be stacked into deep layers that propagate information across the entire set. The punchline is surprisingly simple: when you treat the Transformer as a Graph Neural Network, you unlock a different lens on what a neural network is doing, and you reveal a bridge between architectures that long stood apart.