Mathematicians often picture a dynamical system as a tiny machine that pushes every point along a path through space. The Point Escape Problem asks a deceptively simple question: if you start inside a closed region A and keep applying a rule f that reshapes the landscape, does your path ever leave A? The trap in this question is that small changes to the rule or to A can flip the answer entirely. In other words, some instances look stable on the surface, but a whisper of perturbation can abruptly change the verdict.

Enter a new study from Swansea University led by Eike Neumann. The work reframes the problem not as a rigid yes-or-no decision for a single exact map, but as a search for robustness. If an instance’s answer stays constant under small tweaks to the function, the authors say the problem is robust—and that robust version can be decided. The paper builds a concrete, constructive algorithm that halts precisely on those robust cases, and it does so in a way that is, in a meaningful sense, optimal among partial algorithms.

But the punchline isn’t merely about an abstract theorem. The authors show that their method works for concrete families that matter in practice: linear systems and the famous Mandelbrot landscape in complex dynamics. The work is a careful meditation on computability when your inputs are not exact, and it translates into a larger claim about what we can and cannot reliably compute when the world is imperfect.

What the Point Escape Problem Is

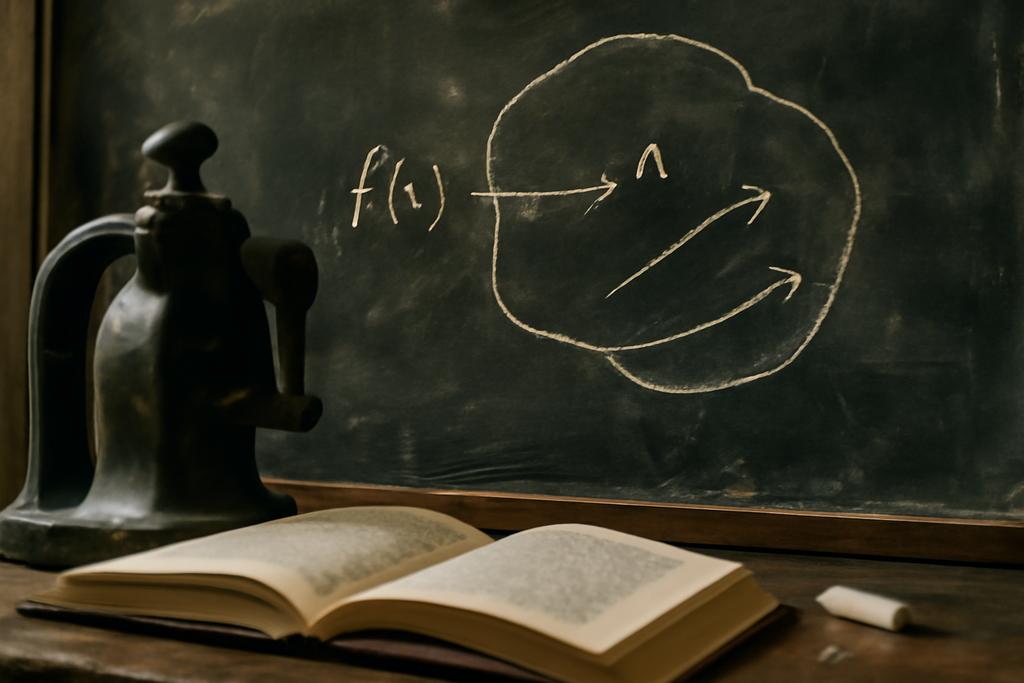

At its core, the Point Escape Problem asks: given a continuous map f: R^d → R^d, a closed region A in space, and a starting point x0 inside A, does there exist a finite number of steps n after which the n-th iterate of x0 leaves A? The problem is deceptively simple, but the mathematics of nonlinear dynamics on infinite spaces makes it slippery. In the standard model of real-number computation, many questions about nonlinear dynamics are undecidable if you demand a single algorithm to work on every possible input. Neumann’s contribution isn’t to solve the entire universe of cases; it’s to carve out a robust slice where a single method can settle the question reliably.

To make sense of this, the paper adopts computable analysis as its guiding framework. Objects are not given as exact numbers but as infinite sequences of ever-tighter approximations. Imagine a real number x encoded as a nested ladder of rational intervals that converge on x; a function f is described by a procedure that, given a finite sketch, can produce a closer sketch of f’s action. In this setting, a decision method is called complete if it halts on every input for which the answer remains stable under small perturbations. That notion—robustness—turns a philosophical worry about precision into a solid computational target.

Neumann, a researcher at Swansea University, shows that a surprisingly simple algorithm can achieve this robustness-driven completeness. The method tracks two things: an overapproximation O of an initial segment of the orbit {x0, f(x0), …, f^N(x0)} and a family of overapproximations Qi for each point in the orbit. If any Qi separates from A, the algorithm declares escape. If the overapproximation O sits inside the interior of A and the image of O can be similarly overapproximated to map inside O, the algorithm declares the point trapped. If neither progress is available, it computes more precise approximations and keeps going.

The Algorithm That Hears the Quiet Boundaries

How can a finite procedure settle questions about an infinite process? The answer rests on a clever idea: the search for a robust invariant. A robust invariant is a compact set V with the property that f maps V into its own interior. If x0 is inside such a V, and V ⊆ A°, then the orbit cannot escape the interior, and the point is trapped in a way that remains stable under small changes to f. The algorithm uses this invariant as a certificate: if it can locate such a V containing f(x0), it halts with the trapped verdict.

Conversely, if no robust invariant sits around f(x0) within A, then tiny perturbations of f can push the orbit outside A. The algorithm then follows a complementary path: it tries to show that there exists a perturbation of f for which the orbit leaves A in finite time, and if that happens, it halts declaring escape. If neither a robust invariant nor a perturbation-based escape can be certified, the algorithm stays in a waiting state. The authors prove that this approach is complete: it halts on every problem instance whose answer remains fixed under sufficiently small perturbations of the function. Moreover, the halting set—the set of inputs on which it terminates—is dense in the space of all problem instances. Robust instances are not rare; they populate the landscape densely enough that the method is meaningful for real-world questions.

To connect theory with practice, the paper also shows how the method extends to very concrete systems. For linear dynamics, the authors give a reduction to the Point Escape Problem that preserves robustness. A key technical move is a compactification: map unbounded Euclidean space into a ball, so that the question about escaping a polyhedron under an affine map becomes a question about remaining inside a compact region after a coordinate change. Once in this compact setting, the same robust-invariant logic applies, and the method can certify trapping in a principled way.

From Linear Systems to the Mandelbrot Sea

The method doesn’t stop at linear systems. It also tackles the quadratic family, the well-known gateway to the Mandelbrot set. The Mandelbrot set M is the set of complex parameters c for which the orbit of 0 under the map fc(z) = z^2 + c remains bounded. It’s a breathtaking object in pure mathematics and a fan favorite in popular culture, in part because it is both deeply mysterious and visually arresting. The paper shows that the decision problem for M can be connected to the Point Escape Problem in a precise way: c maps to a two-dimensional escape problem involving the real and imaginary parts of fc applied to z = x + iy.

The punchline about M hinges on a famous conjecture in complex dynamics—the hyperbolicity conjecture. If every interior point of M is hyperbolic (that is, it has an attracting cycle), then robust instances of the Mandelbrot-related problem exist, and the authors’ algorithm can yield a complete decision method for M. In that scenario, interior points of M induce robust invariants that keep orbits safely inside certain regions, letting the method certify where the dynamics stays tame. If hyperbolicity fails, the reduction maps out precisely where the algorithm loses its grip, offering a structural lens on why M has resisted a full, unconditional algorithmic grasp for so long.

So, the bridge from a general, abstract escape problem to the intricacies of a single complex-analytic family is not just technical flourish. It reframes a major question in computability—the decidability of a cornerstone fractal set—in terms of a robust, constructive procedure. Hertling’s conditional results on the Mandelbrot set, which depended on hyperbolicity, take on a new hue when viewed through Neumann’s robustness lens: the question becomes not only whether one can compute M in principle, but whether the computation can be made robust enough to halt on all robust instances, and how that robustness is tethered to deep conjectures about dynamical structure.

All of this comes with a practical spotlight: the Sturm-like drama of deciding whether a complicated system will eventually escape or remain contained is not hopeless. By focusing on robustness—where the answer doesn’t flip with small nudges—Neumann’s method lights a path for automated reasoning about systems that are known only approximately. And it does so with a careful balance between what is provably decidable and what hinges on the behavior of dynamical systems at infinity, where the usual intuition about bounds and limits can fail.

The paper’s institutional heartbeat is Swansea University, led by Eike Neumann. The work is a disciplined expedition through computable analysis, a field that treats real numbers and functions as objects that must be computed via infinite, gradually refined approximations. The result is not a universal algorithm that solves every possible problem of this kind. It is, instead, a maximally robust decision method: it halts on all inputs for which the answer remains stable under small perturbations of the function, and it does so in a way that is dense in the space of all problem instances. In practical terms, that means a lot of the time, when you’re modeling a real-world system and your data are imperfect, you can still get a decisive answer if the instance is robust enough to endure a little dust in the gears.

The implications extend beyond pure theory. In an era where automated reasoning increasingly interfaces with physical systems, robust decidability offers a pragmatic compass. It suggests a blueprint for building verifiers that are honest about uncertainty, yet still capable of giving strong guarantees whenever the situation isn’t perched on a fragile boundary. The work also invites a broader conversation about which problems we should treat as robustly decidable and how representations of real-valued objects shape what we can prove and compute in practice.

Finally, the study invites future exploration. The authors sketch a broad program: test the method on more structured nonlinear systems that behave nicely at infinity, probe higher-dimensional variants of the Mandelbrot-type questions, and refine our understanding of how different representations of functions and sets influence robustness. If you’re hoping for a final, universal algorithm for dynamical escapes, this work doesn’t claim it. If you’re hoping for a principled way to think about computability under uncertainty, it offers a remarkably clear and generous guidepost.