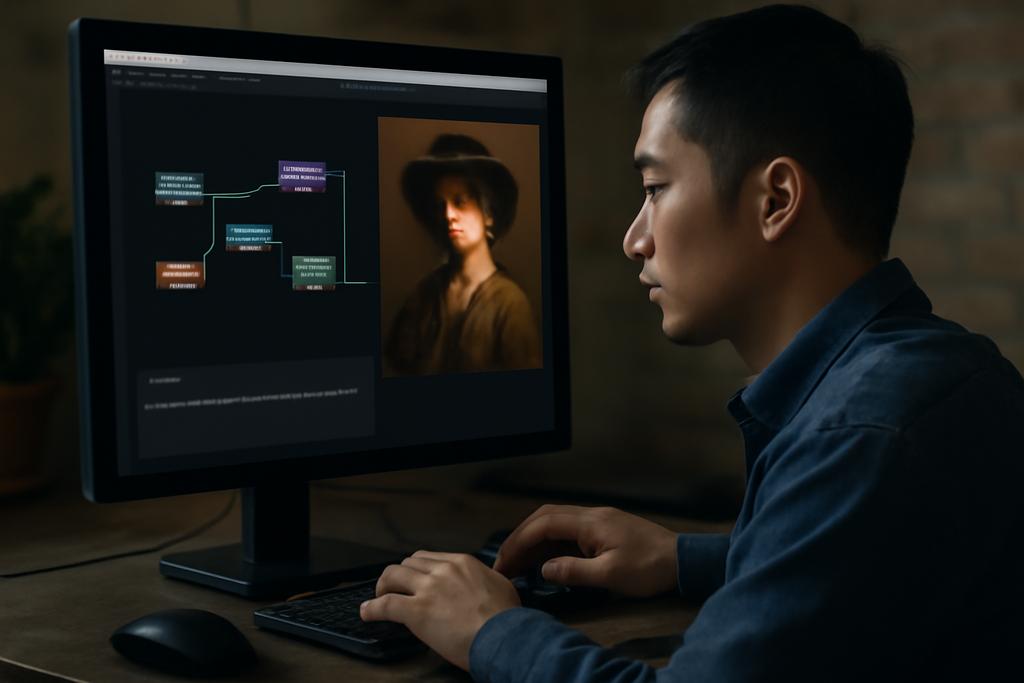

The world has fallen in love with AI art, but loving it often comes with a dare: you have to learn a strange dialect of prompts, pipelines, and knobs that feel more like coding than painting. GenFlow aims to flip that script. Born from researchers at the University of Science, Vietnam National University in Ho Chi Minh City, GenFlow is described as an interactive modular system that promises to let anyone generate images with precision and ease. The lead researchers, Duc-Hung Nguyen and Huu-Phuc Huynh, along with Minh-Triet Tran and Trung-Nghia Le, present a toolkit that blends two seemingly opposite ideas: a visual, node-based editor and a smart, natural-language assistant. The result is not a single app or a gimmick. It’s a design philosophy shift—making sophisticated AI art tools feel almost like playing with a set of Lego bricks, rather than assembling a rocket engine.

Think of GenFlow as a creative workspace where art and engineering meet in the same room. On one side, you have a canvas where you drag and connect nodes, each node performing a task—text-to-image, upscaling, style transfer, or complex filters. On the other, Flow Pilot listens to your words and suggests workflows, then biopsies the web for ready-to-run pipelines and the necessary models. It’s not just about making pretty pictures; it’s about turning the act of assembling a generative art pipeline into something more like gardening—tactile, exploratory, and forgiving of beginners while still rich enough for experts to tinker at a granular level. The paper argues that by removing technical barriers, GenFlow makes cutting-edge generative art tools accessible to a much broader audience—and that’s not just a nice feature, it could redefine who participates in creative AI tinkering.

The researchers are explicit about their goal: lower the barrier to entry without sacrificing depth. In their own words, GenFlow unites two core ideas. First, an Editor, a node-based interface that makes workflow construction intuitive and visual. Second, Flow Pilot, a natural-language powered assistant that helps users describe tasks and discover workflows in a semantic, retrieval-augmented way. Add automated model retrieval, a local database for fast access, and a web-exploration engine that fetches relevant assets from the broader AI-art ecosystem, and you begin to see a vision of a more democratic toolset. The study doesn’t pretend that the entire creative process will be handed to a machine; rather, it offers a friendly, guided way to marshal a rapidly evolving set of tools so that people can focus on ideas rather than infrastructure.

Section 1

GenFlow is framed around two interlocking pieces. The first is Canvas, a drag-and-drop playground where users drop nodes that perform discrete tasks—think text prompts feeding a diffusion model, then a sequence that upscales, stylizes, or composite-images in a controlled way. The second is Flow Pilot, an intelligent assistant that translates plain language prompts into actionable workflows. The combination is powerful because it reimagines the workflow as something you configure visually, then refine with natural language guidance. In practical terms, a user could describe a task like “generate a portrait with a moody atmosphere using this reference sketch,” and Flow Pilot would surface compatible workflows, rank them by relevance and popularity, and propose a pipeline that can be deployed directly onto the canvas. This is a bridging technology: it connects the user’s intent to the best available configurations without forcing them to become a software engineer overnight.

Beyond the interface, GenFlow threads a robust retrieval-enhanced approach through Retrieval-Augmented Generation, or RAG. The system fetches existing workflows from a curated library, semantically matches user descriptions to those workflows, and uses a language model to validate and present them. The result is not just a catalog; it’s an adaptive, guided discovery process. The paper describes a two-stage process: offline indexing of crawled workflows into a vector database for fast search, and online querying that triggers a semantic search, refinement, and, if needed, a targeted web exploration by a team of mounted “agents.” This isn’t just clever software design; it’s a practical blueprint for how to keep a living, growing toolkit usable as the landscape of generative art expands week by week, model by model.

Section 2

The authors don’t pretend GenFlow is a universal antidote to all the pain points in AI art. But they do present a compelling case for why it could matter right now. One striking thing is the claimed uplift in efficiency. Without GenFlow, a typical creator might spend roughly twelve to thirteen minutes hunting for techniques, models, and compatible workflows across multiple platforms. With Flow Pilot and the local workflow database, that clock can drop to around three minutes and change—more than a factor of two, and with less friction to boot. The system also demonstrates a dramatic boost in accessibility. In the user study, participants—13 STEM students with varying levels of experience, including some AI/vision experts—performed five tasks that spanned image super-resolution, text-to-image generation, and more advanced workflows like generating an image based on a facial reference or an outline. Prompts were kept intentionally short, often under 25 words, and the participants could adapt their prompting strategies to the task complexity. The result was not a parade of perfect images, but a clear narrative: novices could produce meaningful outputs quickly, while experts could tweak pipelines with confidence and speed.

The study also highlights how GenFlow’s design supports experimentation. The drag-and-drop canvas makes it easy to visualize a workflow as a flow of operations, and the Flow Pilot reduces the cognitive load of choosing among dozens of possible configurations. Participants repeatedly noted that the interface made iteration faster. They could see the entire workflow, adjust parameters, and compare results without the mental overhead of jumping between disparate tools. In other words, GenFlow doesn’t just streamline generation; it lowers the barrier to exploring more ambitious or experimental ideas. If you’re someone who thinks in timelines, rather than equations, the system’s ability to lay out a process visually is itself a kind of cognitive expansion.

The paper does not shy away from acknowledging room for improvement. Some participants wanted more precise workflow suggestions, especially for multimodal queries that combine sketches, references, or mixed media. Others suggested a stronger emphasis on collaboration—shared workspaces where communities could propose, remix, and refine pipelines. And there’s a practical note about the learning curve: while many users can handle a drag-and-drop interface, others may want more adaptive layouts or personalized recommendations. These insights aren’t complaints; they’re a map for where GenFlow could grow next—toward more context-aware, collaborative, and adaptable experiences that shine as the technology behind AI art keeps evolving.

Section 3

The broader significance of GenFlow isn’t just about making a better tool; it’s about rethinking who gets to participate in the AI-art frontier. The authors point to democratization as a central premise: reduce the friction, broaden participation, and empower people to bring their own ideas to life without being handcuffed by technical slog. That’s not just a nice talking point; it’s a tangible social implication. If a student in a small university lab, a designer exploring new visual vocabularies, or a hobbyist with a vivid concept can piece together a workflow from a library of community-generated pipelines, the entire field stands to gain from more voices, more experiments, and more cross-pollination between disciplines. The study’s inclusion of participants with a range of backgrounds—from AI experts to novices—offers a microcosm of what a broader ecosystem could feel like in practice: a collaborative toolkit that grows wiser as more people contribute, remix, and repurpose what already exists.

But there are caveats that deserve equal attention. The paper’s web-exploration component, while powerful, also raises questions about provenance and safety. If the system automatically fetches models and workflows from the internet, how do we verify licenses, safety filters, and ethical guardrails? How do we prevent the propagation of harmful or copyrighted content, or the misuse of face references in portrait generation? GenFlow’s authors acknowledge the potential value of a collaborative repository and multimodal inputs, hinting at a future where communities curate and improve workflows together. That’s exciting, but it will require thoughtful governance, transparent licensing, and clear signals about the origins and rights associated with each component in a pipeline. The authors’ own acknowledgement of future work—improving language understanding for better prompts, supporting sketches and images as inputs, and cultivating a community-driven repository—reads like an invitation to a broader conversation about responsible creativity in the AI era.

On a practical note, GenFlow’s design is a reminder that the best product ideas often lie at the intersection of disciplines. Node-based visual programming has a long history in 3D modeling, game development, and audio processing. Bringing it together with retrieval-augmented generation and web-enabled asset discovery borrows strength from multiple domains and turns them into something usable for artists who don’t want to become technicians. It’s a language the next generation of creators can speak—one that blends seeing, tinkering, and talking. The implications extend beyond art: imagine education where students assemble AI-assisted projects by wiring together modules, or journalism where researchers assemble source-correct pipelines that fetch, verify, and present information in real time. GenFlow points toward a future where the interface itself becomes a collaborative instrument, not just a tool, shaping how we imagine and produce visual knowledge.

The University of Science, Vietnam National University in Ho Chi Minh City, and its researchers are clear about one thing: GenFlow isn’t the final answer, but a bold step toward making advanced generative art more accessible and controllable. If the project continues to iterate on language-guided discovery, multimodal inputs, and community-driven repositories, we may look back and recognize a turning point: a moment when complexity stopped being a gatekeeper and began to feel like a shared surface for human creativity and machine intelligence to co-create. For readers and creators who have watched AI art from the bleachers, GenFlow offers a spark: a practical, humane way to move from fascination to collaboration, from awe to artwork that people actually build together.

The study’s conclusion carries a quiet optimism. GenFlow represents more than just a better interface; it embodies a philosophy of inclusive experimentation. As the authors put it, democratizing AI art means lowering barriers without flattening nuance. It means giving people the tools to express themselves with a level of technical polish that once required a dedicated studio. If GenFlow’s current arc holds, the future of AI-powered image making could look less like a solitary lab experiment and more like a vibrant workshop where anyone can sketch a concept, assemble the right workflow, and watch it come to life—together.