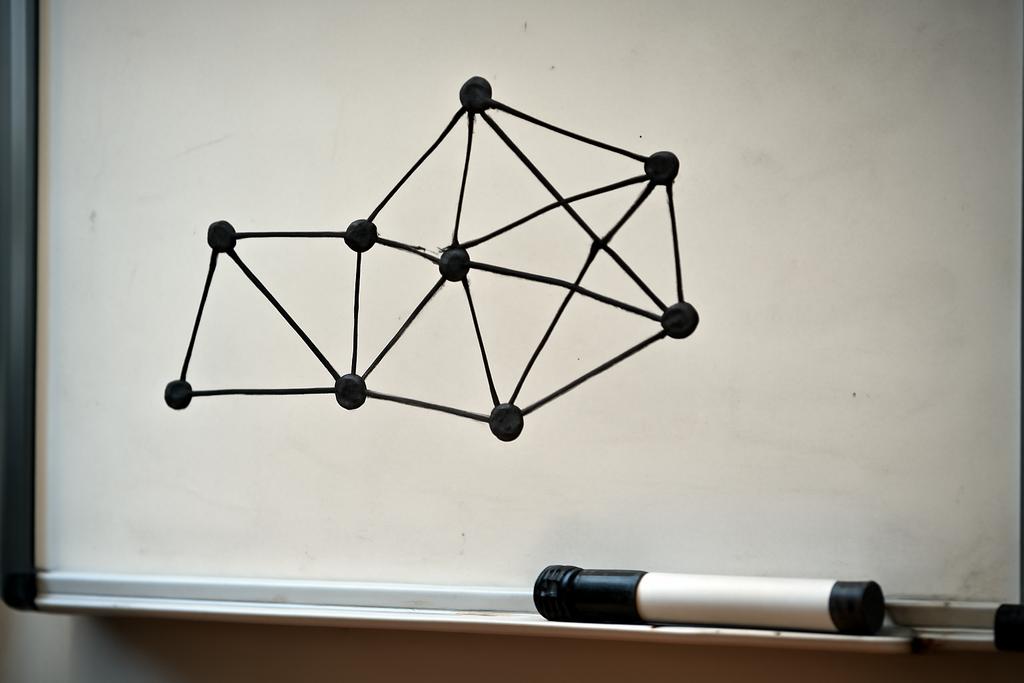

On a whiteboard, a graph can look like a constellation: points (vertices) linked by threads (edges) that braid into patterns we recognize as networks—social circles, transportation grids, or the tangled web of dependencies in software. A clique is a little club where everyone knows everyone else, a dense hub in the middle of a sparse world. Two classical questions in graph theory ask how to cover all the connections with as few of these clique clubs as possible, or how to slice the edges into disjoint clique clubs so every edge belongs to exactly one club. Those questions are the Edge Clique Cover (ECC) and Edge Clique Partition (ECP) problems. In practical terms, they are about grouping relationships into fully connected subgraphs so that the whole network is explained by a handful of tight-knit substructures.

Now a new study from the University of Bergen—led by Fedor V. Fomin with colleagues Petr A. Golovach, Danil Sagunov, and Kirill Simonov—takes the conversation in an unexpected direction. Rather than measuring the minimal number of cliques in the usual way, they ask a subtler question: how far above a natural floor given by the graph’s largest independent set α(G) must we go to cover or partition all edges? In other words, they look at Edge Clique Cover Above Independent Set (ECC/α) and Edge Clique Partition Above Independent Set (ECP/α). The parameter k measures how many extra cliques beyond α(G) we allow. This reframing isn’t just a clever math trick; it mirrors real-world networks where the best possible structure is often surprisingly close to a simple lower bound, and where the “extra work” to reach a full description can be strikingly meaningful.

The lead result is a tale of two problems that look similar from a distance but behave very differently once you measure them against α(G). The team proves a clean dichotomy: ECP/α is fixed-parameter tractable (FPT) with respect to k; ECC/α, however, is NP-complete for every fixed k ≥ 2, though it becomes easy for k in {0, 1}. In other words, allowing a tiny slack beyond the independence floor makes ECP/α tractable in practice, but ECC/α stubbornly remains hard in general—even when the graph class is pretty nice. The Bergen group doesn’t stop there. They show ECC/α becomes FPT when you count not just k but also a second graph parameter—the clique number ω(G)—and they push even further for sparse graphs and minor-free families, delivering subexponential-time algorithms in key cases. It’s a tour of how a small shift in what we optimize against can flip the computational landscape in surprising ways.

To appreciate what this means, it helps to ground the ideas in intuition. ECC asks for a small family of cliques whose union covers every edge in G. ECC/α asks for such a family whose size is at most α(G) plus a little extra k. Why α(G)? Because no edge can join two vertices that sit inside the same independent set, so you need at least as many cliques as the largest independent set has vertices—one clique per possibly independent vertex, in the worst case. ECP/α adds the twist that edges must be partitioned into disjoint cliques, not merely covered. The subtle difference matters profoundly for complexity: letting edges belong to multiple cliques (ECC) vs forcing a clean partition (ECP) drives the two problems onto different computational terrains when you measure them relative to α(G).

The authors’ joint contribution has a practical flavor. They lean into the observation that many real networks are sparse enough that α(G) is large relative to the graph’s size, and in such regimes the above-α lens lines up with how much room you really need to describe the network with cliques. The work builds a bridge between classical intractability results and contemporary parameterized complexity, showing how subtle changes in how you measure the problem can either unleash powerful algorithms or leave you with stubborn hardness. The study’s implications ripple through fields that rely on clique-like decompositions—bioinformatics, social networks, networks design, and data clustering—offering a principled way to gauge when exact algorithms are feasible and when you should pivot to approximation or heuristics.

The paper acknowledges the broader arc of ECC and ECP as long-studied problems with a practical side: they appear in computational geometry, statistics, networks, compilers, and bioinformatics. Yet the new above-α parameterization reframes a familiar landscape. The University of Bergen team’s results carve out a clear hierarchy: ECP/α is tame in the fixed-parameter sense; ECC/α is wild in general but becomes tame under specific, meaningful extra levers (like ω or degeneracy) or in particular graph families (minor-free graphs). The upshot is not a single algorithm to solve every case, but a map of when to expect fast, feasible exact solutions and when the problem will resist them, even for graphs that look modest at first glance.

What ECC and ECP really do in graphs

ECC and ECP distill to a single, striking idea: can we explain all the connections in a network by a handful of fully connected subgroups? An edge is covered by a clique when both endpoints sit inside that clique. In a partition, each edge belongs to exactly one clique. These are simple definitions on the surface, but they encode a powerful form of structure: a small set of dense clusters that, collectively, account for every connection in the network. In sprawling graphs with thousands or millions of edges, finding such a compact summary feels like compressing a complicated map into a few well-lit landmarks.

Yet in practice this compression runs headlong into computational complexity. The classical results show ECC and ECP are fixed-parameter tractable (FPT) when you parameterize by the number of cliques you allow. That’s great when you have a small target number of clusters. The catch is that for sparse graphs—think social networks with long, lacy connections or geometric networks—the natural, meaningful parameter is not the number of cliques but how close you can stay to a natural lower bound given by α(G).

α(G), the size of a largest independent set, is a straightforward measure of a graph’s sparsity in a global sense. It’s the number of vertices you could pick so that no two are adjacent; in a sense, it’s the number of “lonely” vertices you could stack without forming any edge. Because an edge clique cover or partition must “touch” every edge, you can’t hope to do better than α(G). So ECC/α and ECP/α fix a baseline: you need at least α(G) cliques, and k is how far above that floor you are willing to climb. That reframing captures a lot of practical reality: you might be able to cover a large part of the network with the natural, maximal independent set, but the remaining structure forces you to add a handful more cliques to make the picture complete.

The big split: ECP/α is tractable, ECC/α is not (in general)

Here is the paper’s striking dichotomy. Edge Clique Partition Above Independent Set (ECP/α) can be solved in time 2^{O(k^{3/2} log k)} times a polynomial factor in the graph size. In other words, if you’re allowed a small slack k above α(G), there’s a clean, fixed-parameter algorithm whose running time grows sub-exponentially with k. The method is robust enough that it works without the input giving you α(G) directly; the algorithm can determine α(G) as a subroutine. This makes ECP/α a genuine “feasible in practice” target for sparse-ish graphs where k stays small and the network isn’t drowning in density.

In contrast, Edge Clique Cover Above Independent Set (ECC/α) behaves very differently. For every fixed k ≥ 2, ECC/α is NP-complete. That means there is no known algorithm that solves all instances quickly as the size of the graph grows, unless P=NP. The authors push a crisp boundary: the problem becomes easy again for the tiny edge-case k ∈ {0, 1}. In other words, a hair’s breadth of slack over α(G) is enough to push ECC/α from tractable territory into intractable territory for general graphs. The authors even show this hardness holds on perfect graphs, which are, informally, graphs with a clean, well-behaved structure—adding a professional salt to the wound: the difficulty is not simply tied to rough, pathological inputs.

Why this matters is not just about having another taxonomy of computational hardness. It reveals a subtle, almost philosophical truth about two seemingly close problems: allowing overlap (ECC) vs forcing a partition (ECP) interacts with a natural lower bound in very different ways when you measure against α(G). The difference is sharp and robust across a wide landscape of graph classes. For macroscopic intuition, think of ECC as a clubbing process that can reuse people across many clubs, while ECP insists each edge is assigned to one single club. That one-sample difference—shared membership vs exclusive assignment—creatively alters what counts as a small, manageable description of the whole network when you’re allowed to drift a little above a trivial baseline.

From theory to practice on sparse graphs

The Bergen team doesn’t stop at the abstract boundary. They show that in several sparse graph families the ECC/α problem becomes approachable again, thanks to parameterized algorithms that lean on other structural graph properties. If the graph has a small clique number ω, or if it is highly degenerate (a measure of how sparse the graph is locally), or if it belongs to the family of H-minor-free graphs (a broad and important family that includes planar graphs and many sparse networks), then ECC/α admits fixed-parameter or even subexponential-time algorithms with respect to k and the other parameter (ω, degeneracy, or the minor structure). The running times are carefully bounded and, crucially, they scale well when those graph parameters are small—a common situation in real-world networks where you don’t see gigantic cliques or dense cores everywhere.

One of the technical ideas behind these results is a clever way to separate the graph into two layers: simplicial cliques, which are forced by simple local structure, and the rest, which must be covered by the more complex, non-simplicial cliques. By peeling off the simplicial pieces and exploiting how many non-simplicial cliques could appear (bounded by roughly 2k in Key lemmas), the authors reduce the problem to smaller, more tractable subproblems. In sparse, minor-free graphs, they can push further, using structural decompositions and dynamic programming on tree-like representations that sparsify the combinatorics. The upshot is a path to practical exact algorithms for ECC/α in settings where many real networks live: networks with limited density and controlled local complexity rather than wild global chaos.

Beyond the mathematics, these results offer a pragmatic lens for researchers who work with social networks, biological networks, or software dependency graphs. When you know your network is not terribly dense and you can bound ω or the graph’s degeneracy, ECC/α and ECP/α aren’t just abstract curiosities—they become computable, with predictable performance as you dial k. In a field where people often settle for heuristics because worst-case intuition is bleak, this work carves out honest, principled guarantees for certain natural network classes, showing exactly where the brakes come on and where the engine can purr.

Why this matters for the future of graph algorithms

At the core, the study is about pushing the frontier of parameterized complexity in a natural, graph-theoretic setting. It asks and answers a deceptively simple question: what happens when you measure complexity not by the raw size of the solution but by how much you exceed a natural lower bound? The answers are not only mathematically elegant but practically resonant. They chart a map of where exact, guaranteed-optimal solutions to edge clique problems are feasible, and where we should lean on approximation, heuristics, or special-case algorithms tailored to particular graph structures.

It is also a reminder that the choice of parameter can flip the computational coin. The same problem that becomes tractable when you parameterize by the solution size k can flip to NP-hardness when parameterized by a related, but different, natural target. The ECC/α vs ECP/α split becomes a lens on how subtle structural constraints—like disjointness of edges in a partition or the lurking presence of independent sets—shape computational reality. For researchers and practitioners, the message is clear: in network design, data clustering, or bioinformatics pipelines that rely on clique decompositions, you should ask not just how many cliques you need, but how far you are willing to drift above a simple baseline and what the graph’s local structure permits. The answers could determine whether you should pursue exact, guaranteed-optimal schemes or pivot to scalable approximations and heuristics tailored to your graph’s sparsity profile.

As for the people behind the work, this is a collaboration anchored in the University of Bergen, with Fomin, Golovach, Sagunov, and Simonov at the helm, bridging diverse perspectives in graph theory and algorithms. The paper situates these ideas within a broader literature that connects classic problems with modern parameterized techniques and computational hardness bounds. It’s a snapshot of a vibrant research frontier where deep theory meets the messy realities of real networks. And even as the results settle a few long-standing questions, they open new questions about how far above natural bounds we should push, and which graph families will stay friendly when we do.

In the end, the edge clique mystery is not a single riddle but a spectrum. It asks us to look at a network and ask, with a wry smile, how much structure we truly need to explain it. The answer, it turns out, depends on whether we insist edges belong to one clique or allow them to be shared across many. It depends on the graph’s hidden geometry—the clique number, the degeneracy, the shoestring of minor-closed structure. And it depends, perhaps more than anything, on how close we are willing to get to a mathematical floor that is as simple as independence itself.