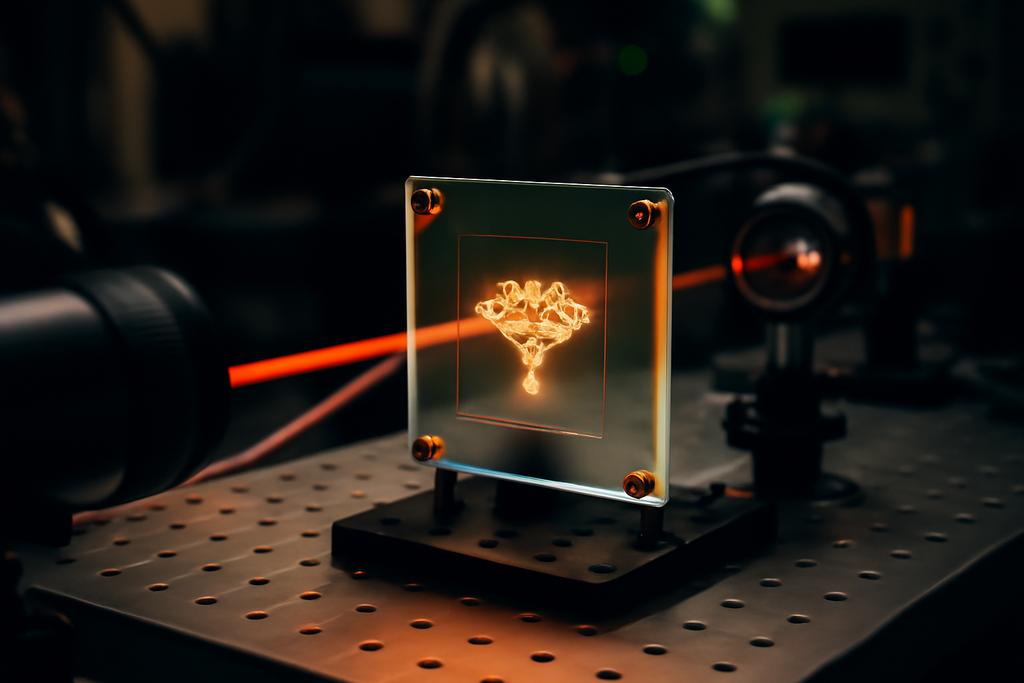

Light has always carried information, but only recently have we tried to choreograph it as a learning partner. Traditional AI training relies on electricity and silicon, grinding through colossal amounts of data on power-hungry hardware. It’s a race against heat, latency, and the planet’s tolerance for energy use as machine learning models grow hungrier and hungrier. Diffraction-based optical processors offer a tantalizing alternative: compute with light, in parallel, with astonishing energy efficiency. The catch has always been training. If you can’t train an optical network efficiently, its promise remains more about potential than practice.

From Tulane University’s Department of Physics and Engineering Physics, a team led by Manon P. Bart with coauthors Nick Sparks and Ryan T. Glasser has crossed a critical threshold. Their paper on efficient training for optical computing introduces a Fourier-based backpropagation method that dives straight into the physics of light. In practical terms, they’ve built a way for an optical network to learn faster by exploiting how light propagates, rather than fighting that physics with brute-force digital computation. The result isn’t just a clever trick; it’s a blueprint for scaling a class of light-based processors from toy experiments to something closer to real-world AI tooling.

Fourier magic cuts training time

At the heart of the diffractive optical network is a simple, powerful idea: information moves through a stack of layers, each layer reshaping a light field with a phase mask and then letting that light propagate to the next plane. The math that underpins these layers boils down to products of diagonal matrices (phase shifts) and circulant matrices (free-space propagation). In theory, any linear transform can be built from those pieces, but real hardware imposes a ceiling. The number of phases you can implement, the spacing between layers, and the finite resolution of a phase mask all constrain what you can realize. Previous training approaches often tried to compensate with heavy numerical optimization—pulling levers in a high-dimensional space that is both expensive and brittle.

Bart, Sparks, and Glasser flip the script by introducing a backpropagation algorithm that uses plane wave decomposition via the Fourier transform. In the Fourier domain, convolutions become simple element‑wise multiplications. That means you can compute the gradients with respect to all trainable phase elements in a layer at once, without constructing and differentiating the full, gigantic transformation matrix that would otherwise make training intractable. It is a physics‑aware shortcut: you stay faithful to how light actually moves, but you do the heavy lifting with fast Fourier operations instead of sprawling numerical matrices.

Plane-wave decomposition becomes the backbone of their method. The gradients are not dumped into a giant Jacobian to be squeezed through a maze of linear algebra; they’re streamed through in the Fourier domain with straightforward, element‑wise math. This is a big shift from standard backprop in electronic neural networks, but the math aligns surprisingly closely. The authors show that, after accounting for the optical phase term, the gradient equations resemble familiar backpropagation formulas, just dressed in a complex, wave-based outfit. In other words, the same intuition you have for adjusting a weight in a neural net still applies, but the conduit is light, not copper and silicon.

To prove the idea, the authors ran a classic AI test—MNIST digit classification—on a six-layer optical network with layers spaced tens of centimeters apart. They demonstrated that a handful of trainable phase masks can yield high performance: about 98% training accuracy and 97% test accuracy after 30 epochs, using a setup consistent with feasible diffractive optics. Beyond classification, the team showed that two phase masks could implement arbitrary linear transformations on input–output pairs even when the input is a 1000 by 1000 image. That’s a demonstration of substantial expressivity with a surprisingly small, physically realizable set of trainable parameters.

To keep training stable, the authors add a few pragmatic refinements. A logical detector layer aggregates the network’s final intensities into class scores, producing a robust signal for learning when combined with a cross-entropy loss. They also apply learning rate decay and a normalization step to keep the phase values within a manageable range, preventing the familiar problem of vanishing or exploding gradients. These touches are less glamorous than the core Fourier insight, but they’re essential if you want a learning process that doesn’t stall partway through training.

Speedups that could change how we build AI hardware

The practical punchline is not just that training is faster in a lab notebook, but that the method scales with model size in a way that makes large optical nets plausible. The team quantifies the speed advantage: about eight times faster per image for non‑power‑of‑two input sizes and roughly three times faster for power‑of‑two sizes, compared with standard auto‑diff backpropagation on conventional hardware. The underlying scaling follows a familiar FFT-friendly pattern, roughly N squared times log N, which is a big deal when you’re handling high‑resolution data in parallel across many optical channels.

Crucially, these improvements came without requiring a GPU. The authors measured per‑iteration times on a modest MacBook Pro CPU and found that a six‑layer network processing a 128 by 128 field could complete a forward and backward pass in about 10.5 milliseconds. They also compare their CPU-based Fourier backprop to existing in‑situ training schemes that rely on capturing optical measurements and phase retrieval; those approaches can run into hundreds of milliseconds per iteration due to sensor and imaging bottlenecks. In other words, the Fourier backprop not only aligns with the physics of light but also makes the computational side of learning feel light itself, at least on conventional hardware.

Beyond raw speed, the paper makes a broader case for why this matters. Diffractive optical networks are already valued for their energy efficiency and parallelism; they shine when you can trade a lot of computation for a little energy, and when you need to process multi‑dimensional data in real time. Training has been the stubborn bottleneck—the phase masks are clever, but figuring out which masks to use for a given task could burn through compute time. The Fourier backprop approach changes the math of how we learn these masks, not just how we apply them. It’s a step toward scalable, learnable optical processors that could, in principle, train themselves with far less energy than a traditional AI stack requires.

Of course the work is not a magic wand. The researchers are candid about the constraints: a real optical device has finite resolution, noise, wavelength dependence, and a limited number of layers. The backprop algorithm itself assumes a differentiable forward model that accurately captures light’s behavior through the layers. Still, the authors argue that, because their method targets the trainable diagonal factors and uses a fixed number of factors per layer, it remains broadly applicable even when you don’t hit the ideal algebraic decomposition exactly. And because it hinges on the Fourier basis, it bakes in a universal computational tool that hardware teams already know how to implement and optimize.

For people who care about the planet, there is a practical resonance here. Training today is a major driver of energy use in AI. If optical processors can reach maturity not only for inference but for learning as well, we could see substantial energy savings—especially for edge devices or data centers that demand massive parallel throughput. It’s not a panacea, but it’s a compelling path toward a world where AI learns more with less power, using the physics of light as a natural resource rather than another data‑center electricity bill.

The bigger picture: what this means for science, engineering, and imagination

The Tulane study is more than a clever speed‑up; it hints at a broader way to think about learning in physical systems. If your linear transformations are built from a known, constrained set of blocks—diagonal phase edits and circulant propagations—then you can tailor the learning algorithm to the physics rather than bending physics to fit a generic neural network optimizer. The Fourier‑based backpropolv indicates a general recipe: identify the natural basis in which your system’s operators simplify, and carry the learning in that basis. This idea isn’t unique to optics; it resonates with signal processing, metamaterials, and even some quantum information tasks where linear maps dominate and structure can be engineered rather than brute‑forced.

The paper also demonstrates a practical, incremental path to more capable optical machines. The ability to realize arbitrary linear transformations with just a couple of phase masks—and in a way compatible with high‑resolution, real‑world inputs—lowers the barrier to building optical processors that can handle the kinds of tasks that today sit on big GPUs. Think high‑throughput image processing, real‑time denoising, or scene understanding directly in optical hardware, with learning happening on a timescale that doesn’t exhaust energy budgets or cooldown periods.

There is a human story here as well. A small team at Tulane, working within the discipline of physics and engineering, reframes a problem that had been bottlenecked by the digital brainwork required to train optical networks. They lean on a century‑old mathematical tool—the Fourier transform—and repurpose it as a modern engine for learning. It’s a reminder that innovation often comes not from inventing a brand new gadget, but from rethinking how we use the tools we already have, in a language that suits the physics we want to exploit. If the next decade brings optical processors into real, everyday AI workloads, papers like this will be cited as the moment when training finally caught up with inference in the world of light.