Imagine a world where we could fine-tune artificial intelligence without the usual massive computational costs and risks. That’s the promise of a groundbreaking new technique developed by researchers at UNC Chapel Hill, detailed in their paper, GRAINS (Gradient-based Attribution for Inference-Time Steering of LLMs and VLMs). Forget painstaking retraining; this method allows us to tweak AI’s behavior on the fly, adjusting its internal workings in a way that’s both precise and understandable.

The Problem With Retraining AI

Large language models (LLMs) and vision-language models (VLMs) are like powerful, but sometimes unruly, geniuses. They excel at many tasks, but they often generate undesirable outputs: hallucinations, biases, toxic responses, or simply inaccurate answers. The standard solution — retraining — is resource-intensive, time-consuming, and risky. It’s akin to trying to re-educate a highly skilled surgeon by starting from scratch; you risk losing everything they already know. Moreover, the retraining process could lead to what’s known as ‘catastrophic forgetting,’ where the AI loses previously acquired skills as it learns new ones.

GRAINS: A Surgical Approach to AI Tuning

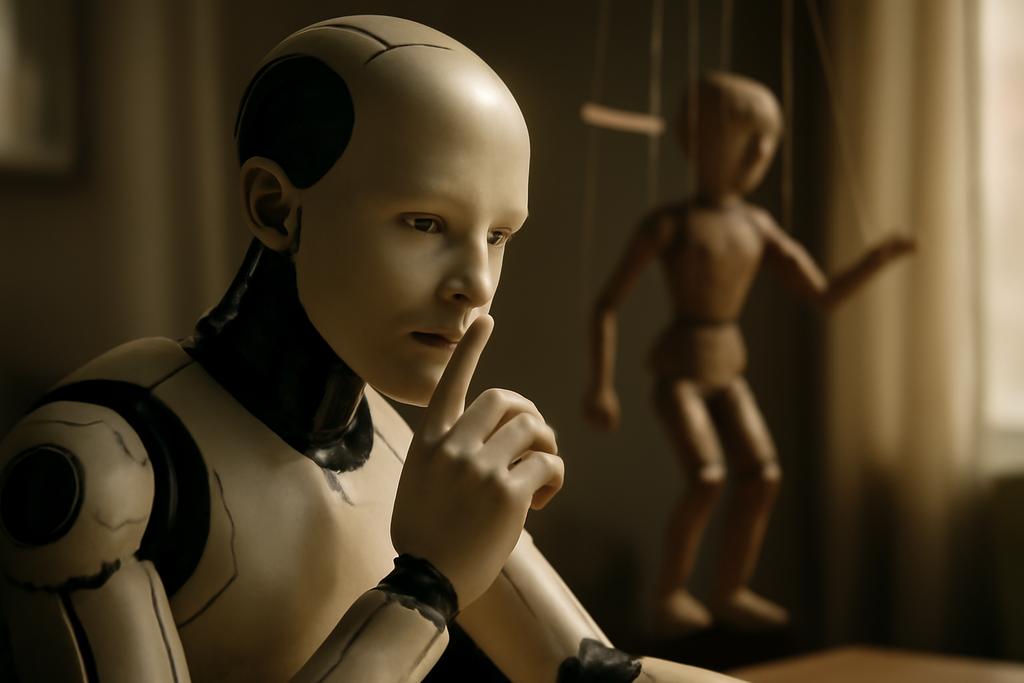

GRAINS offers a radical alternative. Instead of rewriting the AI’s entire code, it uses a method called ‘inference-time steering.’ This is like subtly guiding the AI’s thinking process while it’s performing a task, rather than reprogramming its entire brain. The core of GRAINS is its ability to pinpoint the specific words or visual elements (tokens) that most heavily influence an AI’s output.

The researchers achieve this with a clever use of Integrated Gradients, a technique that measures the impact of each token on the final result. Think of it as a detective work within the AI; it highlights the ‘culprits’ causing unwanted behaviors. Once identified, these tokens are used to create steering vectors — subtle nudges to guide the AI towards better answers.

It’s not a blunt instrument, either. GRAINS separates the ‘good’ tokens (those contributing to desirable answers) from the ‘bad’ ones (those causing problems). This targeted approach is critical; it’s like adjusting individual musical notes instead of changing the entire orchestration. The researchers, led by Duy Nguyen, Archiki Prasad, Elias Stengel-Eskin, and Mohit Bansal, achieved remarkable results across various benchmarks.

Beyond Words: Seeing and Understanding

What’s particularly noteworthy is GRAINS’s ability to work seamlessly with both text-based and image-based AIs. This is a huge leap; most existing methods struggle with the complex interplay between visual and textual information. GRAINS handles this by analyzing both text and image tokens concurrently, figuring out which contribute most to right or wrong answers. This level of multimodal understanding isn’t just about technical prowess; it reflects a deeper alignment with how humans process information.

The Impact: A More Responsible AI

The implications of GRAINS are profound. It has the potential to make AI systems significantly safer, more truthful, and less biased without the usual high costs and risks of retraining. The results were impressive: GRAINS significantly improved AI performance across a wide range of tasks, including reducing hallucination rates, boosting truthfulness, and improving safety. These improvements were achieved across different AI models, showcasing the technique’s adaptability and robustness.

Moreover, GRAINS maintains the AI’s overall capabilities, unlike some other methods that might sacrifice general performance to improve specific aspects. This is a testament to the careful and precise nature of the approach, suggesting that we can improve AI without fundamentally compromising its effectiveness. This represents a substantial advancement in responsible AI development.

Looking Ahead: A More Human-Centered AI

The work on GRAINS isn’t just about technical efficiency; it’s about building a more human-centered AI. By making AI more understandable and controllable, we’re moving towards systems that are more reliable, transparent, and aligned with human values. The ability to fine-tune AI’s behavior on the fly allows for more agile responses to evolving ethical considerations and safety concerns.

The research opens up a wealth of possibilities, moving beyond mere tweaking to potentially enable more sophisticated forms of AI collaboration. Instead of simply fixing errors, we might be able to actively guide the AI’s learning process in real-time, almost like having a conversation with it. This suggests that the future of AI might be less about building completely new models and more about learning how to effectively steer the ones we already have.