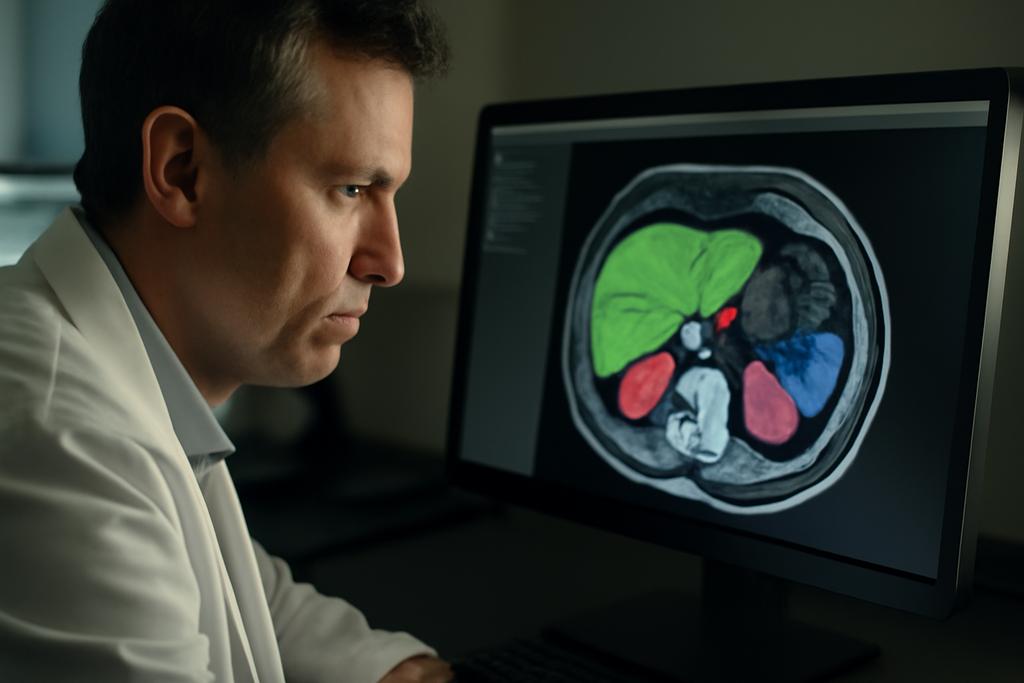

Medical imaging is undergoing a quiet revolution. For decades, radiologists have painstakingly traced the outlines of organs on MRI scans, a process that’s both time-consuming and prone to human error. But now, artificial intelligence is stepping in, offering the potential to automate this crucial task and vastly improve the speed and accuracy of diagnoses.

The Challenge of Abdominal MRI Segmentation

The human abdomen is a complex landscape of organs, each with its own unique texture and shape. Segmenting this area on MRI scans—meaning precisely outlining each individual organ—is exceptionally difficult. Unlike the more uniform signals of CT scans, MRIs show significant variability depending on the scanner, imaging parameters, and even the patient’s individual physiology. This ‘domain gap,’ as researchers call it, makes it difficult to train AI models that can reliably generalize across different types of scans. The variability also makes it incredibly time-consuming and expensive to manually annotate training datasets, which are essential for teaching these AI models.

A Benchmark for Better AI

Researchers at Brigham and Women’s Hospital, Harvard University, along with collaborators at Isomics, Inria, and Indiana University, have tackled this challenge head-on. Led by Deepa Krishnaswamy and Cosmin Ciausu, the team developed a comprehensive benchmark for evaluating state-of-the-art AI models designed for abdominal MRI segmentation. This benchmark allowed for objective comparisons across different models and datasets. Their work has provided much-needed clarity and rigor to an area rife with claims of great advances but without solid benchmarking.

Four Models, Three Datasets

The researchers evaluated four different AI models, three of which used traditional deep learning approaches. These models were trained on large, manually annotated MRI datasets, a process that requires significant time and effort from radiologists. The fourth model, however, took a radically different approach. This model, named ABDSynth, leveraged a technique called SynthSeg. Instead of being trained on real MRI images, ABDSynth was trained on CT scans, using a clever algorithm to generate synthetic MRI scans and their corresponding segmentations. This significantly reduced the reliance on expensive and time-consuming manual annotations of MRI data.

The models were tested against three publicly available MRI datasets (AMOS, CHAOS, and LiverHCCSeg), chosen for their diversity in terms of MRI scanners, sequences, and subject populations (healthy individuals and those with various diseases). This testing process ensured that the benchmark wasn’t just measuring the models’ abilities to perform well on specific types of data, but their ability to generalize to new and unseen data – a crucial measure for any AI system to be widely deployed in clinical settings.

The Results: A Tale of Trade-offs

The results revealed a fascinating interplay between training data, model performance, and computational efficiency. The models trained on extensive, manually annotated MRI datasets (MRSegmentator, MRISegmentator-Abdomen, TotalSegmentator MRI) generally outperformed ABDSynth in terms of accuracy. This reflects the inherent advantage of learning directly from real images. However, ABDSynth demonstrated impressive generalizability despite using only CT data for training. This method is significantly faster and requires less memory than the other models — a vital aspect for deployment in resource-constrained environments.

The study underscores the importance of carefully evaluating and benchmarking AI models in medical imaging. What might seem like a simple task — automatically segmenting organs on an image — actually requires solving many complex issues related to data variability, annotation, and computational efficiency. The trade-offs highlighted in this work will inform the development of future AI models, leading to more robust and clinically useful tools.

Beyond the Benchmark: Implications for the Future

This research has important implications beyond the immediate goal of improving abdominal MRI segmentation. The work provides a clear example of how clever algorithmic techniques, such as SynthSeg, can alleviate the burden of manual annotation, a major bottleneck in AI development. This has the potential to dramatically accelerate the adoption of AI in medical imaging, democratizing access to advanced diagnostic tools that were previously inaccessible due to limitations in data availability.

The researchers also highlight the importance of considering the broader context of clinical practice, such as consistency in anatomical definitions. The different models, even those exhibiting excellent accuracy in isolation, can sometimes yield slightly different segmentations because of nuanced differences in how organs are defined. Addressing such discrepancies will be crucial for seamless integration of AI tools into clinical workflows.

In essence, this study serves as a valuable guide for researchers and clinicians alike. It showcases the remarkable potential of AI to revolutionize medical imaging, while simultaneously emphasizing the necessity of rigorous evaluation and careful consideration of clinical context.