Beta decay is one of those quiet keystones of physics. It’s the process by which a neutron turns into a proton, an electron, and a ghostly neutrino, a tiny rearrangement that reverberates from the heart of atoms to the fabric of the cosmos. For decades, scientists have used clever tricks to predict how fast these decays should happen in different nuclei, but nailing the numbers from first principles has remained stubbornly hard. The reason is simple and stubborn at the same time: the nucleus is a many-body quantum system, a crowded orchestra of protons and neutrons dancing under the rules of the strong force, with a weak force whispering in to flip spins and flip identities. Getting that whisper right requires a precise accounting of how every piece of the nucleus talks to every other piece, not to mention how the external weak field nudges the whole system just right.

The study we’re talking about, conducted by researchers from Peking University in Beijing, led by Teng Wang and Xu Feng with Bing-Nan Lu, tackles this head-on using the nuclear lattice effective field theory (NLEFT). Think of it as a way to put the whole nuclear problem onto a spatial lattice—like discretizing a map of the countryside so you can run Monte Carlo weather simulations on it. But this isn’t a crude grid; it’s a carefully constructed, ab initio framework that builds the nuclear forces directly from chiral effective field theory. The team couples next-to-next-to-leading order (NNLO) two- and three-body interactions with one- and two-body axial currents, all derived consistently within the same effective theory. The result is a self-contained, first-principles approach to weak processes in nuclei, not a patchwork of fitted adjustments.

At the heart of the work is a two-pronged strategy to tame the quantum sign problem that plagues Monte Carlo methods for fermions. The authors introduce a non-perturbative anchor, an H0 Hamiltonian with an approximate Wigner-SU(4) symmetry, which suppresses the sign problem enough to let the calculations breathe. They then treat the full, physically richer Hamiltonian as a perturbation, allowing the Monte Carlo simulations to crawl toward physical realism without being buried under statistical noise. It’s a bit like training a racehorse to run on a treadmill: you start with a simpler, friendlier course and gradually add the real-world details, watching for stability as you go.

These are not just technical tricks. They are the enabling steps that could let us predict weak processes across light and medium-mass nuclei with genuine, quantifiable uncertainties. That matters because beta decay is not just an ancient curiosity; it’s a key probe for physics beyond the Standard Model, a lens into how the weak force communicates with the complex structure of a nucleus, and a crucible for testing our most trusted nuclear theories. The work is anchored in a real place—Peking University in Beijing—and the authors clearly name the team behind the effort: Teng Wang, Xu Feng, and Bing-Nan Lu, among others. It’s a reminder that big leaps in theory often ride on patient, collaborative craft at world-class institutions.

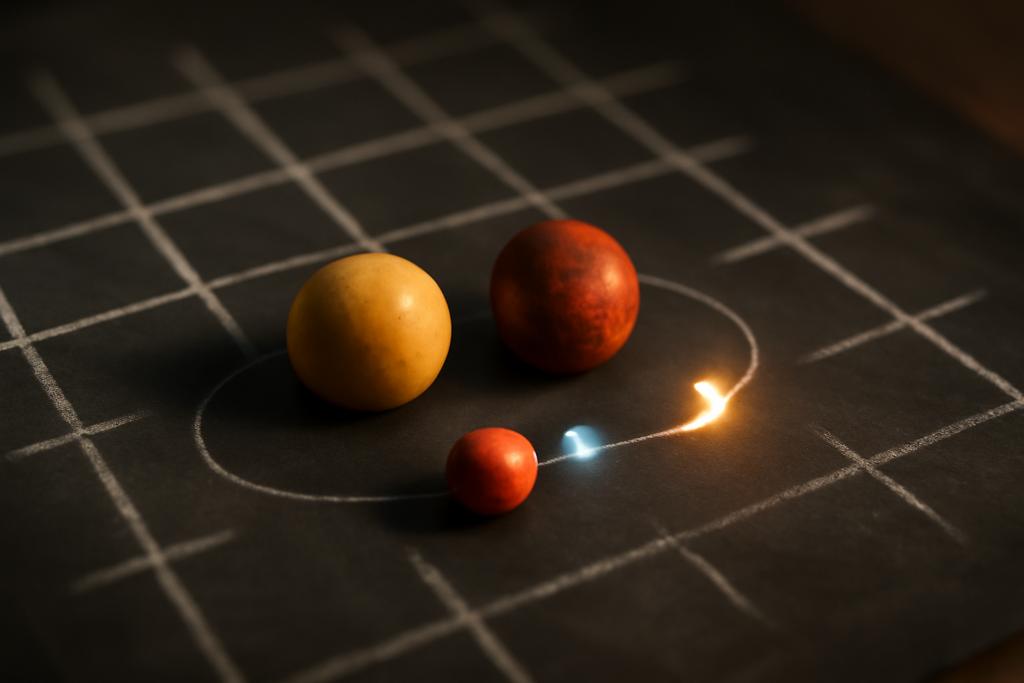

A Lattice View of the Nucleus

To see how the calculation unfolds, it helps to picture the nucleus as a quantum lattice, a grid where nucleons hop between lattice sites much like beads on a string vibrating in a concert hall. The force that binds the nucleons—unknown in exact form but governed by the symmetries of quantum chromodynamics—appears as a set of interactions laid out on the grid. The team uses NNLO chiral interactions to describe the two-body and three-body forces that knit protons and neutrons together, and they couple these forces to axial currents that mediate beta decay. All of this is embedded in the same chiral effective field theory, which means the same language and the same small set of constants govern both the forces inside the nucleus and the way it feels the weak probe from outside.

One of the central technical moves is to work with an interaction that respects an approximate SU(4) symmetry—an idea from Wigner that, at low energies, spin and isospin can behave in a surprisingly harmonious way. The researchers build a non-perturbative H0 that preserves this symmetry and keeps the notorious sign problem at bay. They then add on the real-world, symmetry-breaking pieces—the isospin-breaking terms and the explicit one-pion exchange part—so that the final calculation still speaks the language of the real world. The result is a two-step battle plan: master the simple, sign-problem-friendly core, then perturb around it to capture the details that matter for actual nuclei like helium and lithium isotopes.

On the lattice, nearly everything is a sum over many-body configurations, which is where the auxiliary-field quantum Monte Carlo method comes in. Yet Monte Carlo only sings if the sign problem stays quiet enough for the statistics to converge. That’s the heart of the methodological achievement here: by tuning the leading-order Hamiltonian to be SU(4)-symmetric and by regulating the pion-exchange piece with a lower cutoff in the perturbative part, the team keeps the math from turning into a shouting match. They solve the initial, simpler problem non-perturbatively and then add the perturbative corrections for the currents that carry the weak force, all the while checking that the results hold up when a more exact method—Lanczos diagonalization—is feasible, as in the 3H benchmark case. The net effect is a robust framework that blends non-perturbative power with controlled approximations.

Benchmarking with 3H and 6He

The paper uses two beta-decay showcase stars to test the method: tritium, 3H, decaying to 3He, and helium-6, 6He, decaying to 6Li. The first is a well-trodden proving ground; the second is a more challenging, halo-like nucleus where the weak transitions probe how the method handles extended spatial structures and delicate correlations. In both cases, the axial current is built up to N3LO in a chiral expansion, with the two-body (and in some sense, many-body) current contributions treated alongside the one-body pieces that have traditionally dominated weak transitions.

For 3H beta decay they first determine the three-nucleon low-energy constants cD and cE by fitting to two observables: the 3H binding energy and the Gamow-Teller matrix element itself. Their approach is careful: they use both a Lanczos diagonalization in the smaller, fully loaded space to nail down the exact ground-state wave functions, and a two-channel Monte Carlo calculation that scales to larger systems. The experimental Gamow-Teller value is GTexp = 1.6473(41). When they plug in the lattice wave functions and the chiral currents, they obtain a near-consistent chain of results across methods, lending confidence to the cD, cE extraction and to the overall approach.

One striking outcome concerns how the different orders of the axial current contribute. The leading-order (LO) current is the workhorse, providing the bulk of the transition strength. Higher-order pieces—NNLO and N3LO, including two-body axial currents and one-pion-exchange modifications—make corrections that are small in magnitude, often under 5% of the LO term. That’s reassuringly modest, but it’s not a trivial check: it confirms that the chiral EFT power counting is doing its job inside a discretized, many-body nucleus. It also helps explain historical puzzles in nuclear beta decay, such as the sometimes puzzling behavior of the axial charge “gA” in nuclear media. In this calculation, the full RGTME (the reduced Gamow-Teller matrix element) lands in the right ballpark, and the breakdown by order helps illuminate where the theory is strongest and where it remains sensitive to details of the currents and the regulator choices.

The 6He case pushes the method further into the realm of weakly bound systems. The lattice energies and charge radii for 6He and its mirror, 6Li, show reasonable agreement with experiment, a nontrivial check given the halo character of 6He. The beta-decay matrix elements for 6He to 6Li again show LO dominance with small but meaningful corrections from higher-order currents. Importantly, the lattice results are consistent with, and complementary to, the experimental data, reinforcing the claim that the NLEFT framework can indeed handle medium-mass nuclei in weak processes. In their analysis, the authors emphasize that the first-order perturbative corrections to LO remain modest—suggesting that even with the relatively modest computational load at this stage, the truncation errors stay under control.

Two technical notes deserve emphasis. First, the way the OPE (one-pion-exchange) current is implemented differs in their lattice setup from some continuum treatments, leading to a sign in the N3LO OPE contribution that may appear opposite to what is found elsewhere. The authors attribute this to their different CS (configuration-space) versus MS (momentum-space) handling on the lattice, where the exact mapping between the two is numerically clean. Second, the determination of cD and cE, the three-nucleon LECs, hinges on the 3H data; this is a sensible and necessary funneling of information from a three-body system into the three-body sector of the theory, and it provides a pathway to calibrate the theory before attacking heavier nuclei. The overall message from this benchmarking is encouraging: a carefully calibrated NLEFT setup can reproduce known weak rates and radii in light and slightly heavier nuclei while remaining systematically improvable.

Why This Matters Now

The significance of this work goes beyond reproducing a handful of beta decays. It demonstrates a coherent, first-principles route to weak processes in nuclei that does not rely on purely phenomenological tweaks to fit data. That is a big deal for several reasons. First, beta decay tests are one of the frontlines where new physics could reveal itself. If we can predict decay rates and Gamow-Teller strengths with quantified uncertainties, then deviations from experiment could signal physics beyond the Standard Model, or at least point to subtle issues in our understanding of nuclear many-body dynamics. Second, the results touch a long-standing issue in nuclear physics known as gA quenching—the empirical observation that the axial coupling in nuclei appears reduced relative to its free-nucleon value. A fully consistent, ab initio calculation of axial currents in nuclei is the kind of tool that can help disentangle genuine many-body dynamics from artifacts of model space or regulatory schemes.

Beyond the interpretive payoff, there is a practical one. Lattice EFT, as demonstrated here, is not a one-off stunt for two light systems. With a disciplined approach to SU(4) symmetry, regulator choices, and higher-order currents, the method scales toward medium-mass nuclei. The authors spotlight the possibility that the full beta-decay landscape—a topic that intersects nuclear structure, astrophysical processes like supernovae nucleosynthesis, and fundamental tests of weak interactions—could eventually be mapped with controlled errors across wider swaths of the nuclear chart. In other words, this is a foundational advance that could turn the weak core of the nucleus into a calculable, testable laboratory rather than a field of difficult-to-interpret data points.

There’s also a deeper, almost philosophical payoff: a bridge from the quarks and gluons of QCD to the emergent, complex behavior of nuclei, all through a single, shared framework. The study sits at the intersection of high-performance computation and high-energy theory, aligning with a broader trend in physics toward unified, ab initio descriptions of matter. The authors themselves point to the ongoing work with newer, higher-precision NNLO/N3LO interactions and to extending the approach to more nuclei and more weak processes. If those efforts come to fruition, we could be witnessing a steadily tightening loop: theory guiding experiment, experiment informing the theory, and a more predictive view of the weak force in the dense, wonderfully complicated world inside the atomic nucleus.

The work, conducted by researchers at Peking University, stands as a testament to what modern computational nuclear physics can accomplish when careful mathematics meets patient engineering of algorithms. It also serves as a reminder that the most stubborn problems in physics are often solved not by one super-idea, but by a chorus of pragmatic innovations: a symmetry-driven anchor to calm a noisy Monte Carlo, a consistent EFT-derived current to tie the nucleus to the weak signal, and a disciplined calibration against light nuclei before venturing into the more complicated territories of heavier systems. In that sense, this is not just a study of beta decay; it’s a blueprint for how to build trustworthy, transparent, and ultimately useful theories of the many-body quantum world.

Lead researchers and institutions: The study is credited to a team at Peking University in Beijing, with lead authors Teng Wang and Xu Feng and co-author Bing-Nan Lu, among others, advancing the nuclear lattice EFT program with this beta-decay focus. The work exemplifies how university-driven, collaborative science can push the boundaries of ab initio nuclear theory and set the stage for future explorations of weak processes in nuclei across the chart of nuclides.