Sensor data pours in from traffic cameras, weather stations, drones, and countless other listening devices. The challenge is not just to collect it, but to extract the signal that matters from a chorus of noise, distortion, and missing pieces. In the world of nonlinear filtering, a family of methods known as particle filters has become a trusted workhorse for that job. They look like a crowd of little detectives, each particle holding a guess about the current state, all marching through a probabilistic landscape. The trick is guiding them so that together they tell you the right story about X t, the hidden state you care about, given every noisy observation Z s up to time t.

One popular flavor is the feedback particle filter, or FPF, which fuses the calm logic of a Kalman filter with the flexible, ensemble-based approach of particle filters. In the FPF, every particle is nudged by a feedback gain as new observations arrive. The catch is that this gain function, a matrix valued object K that ties the particles to what you just saw, must satisfy a Poisson equation tied to the unknown posterior density. Not knowing the true posterior makes K hard to pin down, and that difficulty has been the bottleneck of deploying the method in real time or in more complex scenarios.

The paper by Ruoyu Wang, Huimin Miao, and Xue Luo from Beihang University in Beijing—with collaboration from Henan Polytechnic University—takes a strikingly concrete swing at this problem. Led by Wang and with Luo as the corresponding author, the team develops a decomposition approach that turns the stubborn Poisson equation into two pieces that can be solved exactly, at least when the observation function is a polynomial. The idea is elegant in its restraint: replace the elusive posterior with a Gaussian mixture, then ride a two step path to an explicit gain. The payoff is meaningful: the method scales linearly with both the number of particles and the degree of the polynomial describing the observation, a big win over many existing approaches.

Why does this matter beyond the math papers and the conference slides? In a world where machines are increasingly perceptual—tracking cars, steering drones, predicting weather, or interpreting radar echoes—the ability to run accurate nonlinear filters quickly can widen the envelope of what’s possible in real time. The authors don’t just claim a faster method; they show that when the gain function is computed with their decomposition, the resulting filter outperforms conventional particle filters and kernel based approximations in accuracy, while also slashing compute time. It’s a reminder that sometimes the bottleneck isn’t data or computation alone, but the clever way we pose and solve the core equations that connect observations to hidden states.

The heart of the feedback particle filter

To appreciate the breakthrough, we need to linger on what the feedback particle filter is doing under the hood. Imagine you’re watching a scene unfold and you have a swarm of agents, the particles, each with its own guess about the actual state X t. These guesses evolve according to a stochastic rule that gently steers each particle toward what you just observed. The steering knob is the gain function K. In the FPF, K is not just a fixed knob but a field: for each X t and time t, it tells a particle how strongly to react to the latest observation Z t.

The mathematical heart of K is a Poisson equation tied to the current posterior density p t star. This is where the challenge lives. In general, you don’t know p t star, so you don’t have a closed form for K. The literature has offered several approximations: a simple constant gain, a Galerkin type approach with pre chosen bases, or kernel based schemes that try to learn K on the fly. Each method trades off accuracy and cost in various ways, and the curse of dimensionality often rears its head as the state space grows.

The authors acknowledge this landscape and set out a different route. They embrace a practical assumption they call As: the observation function h is a polynomial in x. If h is not a polynomial, you can approximate it with one. With that in place, they propose a two step decomposition that converts the original equation into two pieces that can be solved exactly. They treat the posterior density p t star as a Gaussian mixture p Np, Σ t, which plays the role of a tractable surrogate for the true density. The first piece handles the bulk of the work through a classical object in stochastic analysis, and the second piece captures the remaining tail via a carefully controlled auxiliary function. The result is a gain function K that can be written in a compact, explicit form.

Crucially, this two step decomposition brings the benefit of exact solvability for the subproblems, given the boundary behavior they impose. The boundary conditions are chosen so that the solution behaves nicely at infinity, a practical move that keeps the math well posed while remaining faithful to the way real world filtering behaves. The upshot is a gain function that you can compute with real time cost, rather than an opaque iterative procedure whose cost balloons with dimension or particle count.

A decomposition that changes the game

Let’s unwind the two step decomposition in more concrete terms, without drowning in symbols. Start with the particle ensemble that represents p t star, approximated by a Gaussian mixture p Np, Σ t, which is conceptually close to how one might represent a complex distribution with a handful of Gaussian bumps. The authors then recast the Poisson equation for the gain into a system of two sub equations. The first sub problem is designed so that it can be solved exactly using a Galerkin method with Hermite polynomials. The second sub problem is crafted to be solvable in closed form, once you pick a single constant parameter Ci that ties the two pieces together. Choosing Ci smartly is part of the trick; it ensures that the second sub problem integrates to the correct conditioning. In short: pick Ci so that the first equation lands in a function space where Hermite polynomials do the heavy lifting, and the second hits a neat integral that you can evaluate in closed form.

For the one dimensional case, the authors work out explicit formulas. They show how to express h as a polynomial in the Hermite basis, use a backward recursion to determine the Hermite coefficients that define Ki, and then tie everything back to the gain K through a formula that looks almost like a weighted average of local corrections. The constants Ci are computed from those Hermite coefficients in a way that guarantees the first sub problem is exactly solvable within the Hermite function space. The boundary condition then does the rest, ensuring the solution behaves properly as x goes to plus or minus infinity.

One of the striking technical moves is to replace the intractable density p t star with a Gaussian mixture. In the scalar case this is especially tractable, and the authors show that the resulting gain K inherits a clean, explicit structure. They demonstrate how the forward evolution of each particle, when augmented by this K and an accompanying drift term u, remains well behaved and faithful to the underlying filtering problem. The boundary condition and the decomposition work together to produce a solution that is both accurate and computationally lean.

The paper also provides a careful analysis of the computational cost. In the one dimensional setting, the method scales linearly with the polynomial degree p and with the number of particles Np, i.e., O(p Np). That’s a big contrast to some existing approaches that scale quadratically in Np. The practical implication is that you can run more particles or tackle higher order observation models without a prohibitive price in CPU time. The tradeoff is that the decomposition hinges on the polynomial assumption and on the quality of the Gaussian mixture approximation, but the authors show both in theory and in numerical experiments that the approach can outpace alternatives in accuracy and speed.

Moreover, the authors don’t stop at the scalar case. They outline how the same decomposition principle could, in principle, extend to higher dimensions, though the exact analytic solutions for the second sub problem in multiple dimensions remain a work in progress. They point to a path forward that would combine the Hermite based spectral method with multivariable orthogonal polynomials to handle the first sub problem, and to more intricate boundary considerations for the second. It’s a reminder that the breakthrough in one dimension is often the seed for bigger, more ambitious generalizations.

Why this matters for sensors, AI, and the real world

At its core, this work is about making a powerful idea practical rather than abstract. The feedback particle filter sits at the intersection of control theory and probabilistic inference. In many systems, you need to keep tabs on a hidden state that you can never observe directly — the position and velocity of a target, the wind field over a storm, the orientation of a drone — all while your sensors provide imperfect glimpses. The gain function K is what translates those glimpses into sensible moves for each particle, and thus into a trustworthy estimate of the hidden state. If calculating K is expensive or unstable, the whole filtering pipeline becomes fragile or slow. This new decomposition approach targets that exact choke point.

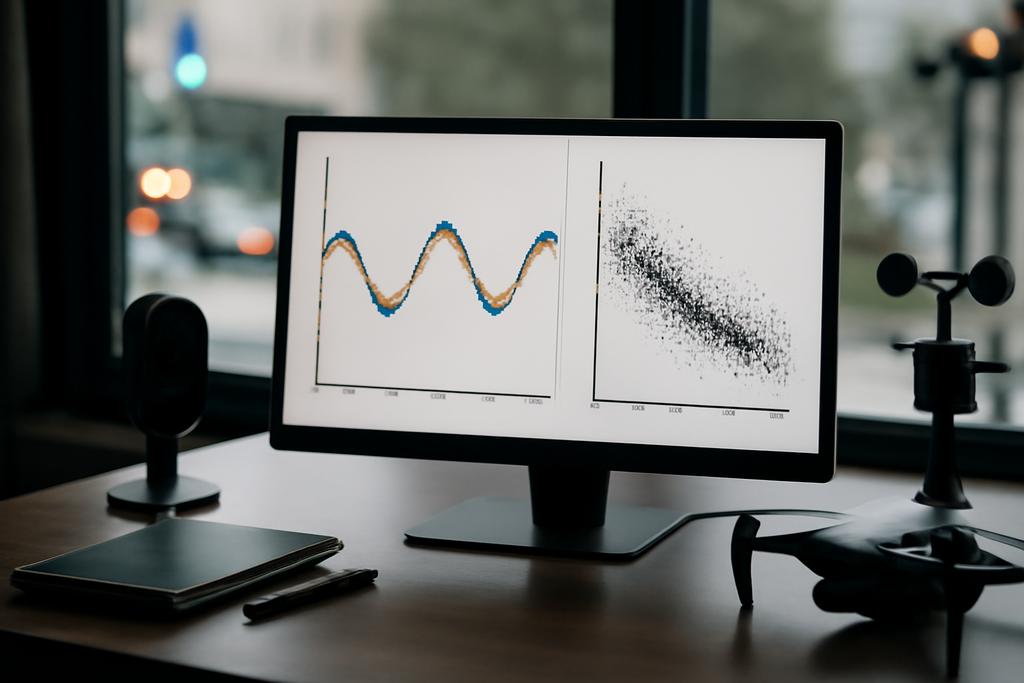

The immediate practical implications are tangible. The authors present numerical benchmarks where the decomposition method yields lower mean square errors than a standard particle filter and outperforms kernel based gain approximations under the same computational budget. In their most demanding scenario, the decomposition method achieved a substantial reduction in both error and CPU time compared with the kernel based approach and a traditional particle filter. The message is not that the method will solve every filtering problem overnight, but that it brings a new lever to pull: you can achieve more accurate filtering with less computational strain by solving two precisely crafted sub problems rather than wrestling with a single intractable equation.

Beyond the numbers, there is a larger frame to consider. The team frames their contribution as a bridge between two streams of filtering research: the classical resonance of Poisson equations in nonlinear filtering, and the modern, data driven search for efficient, scalable approximations. The decomposition technique leans on a clean mathematical idea—decompose to solve—while staying pragmatic about real world constraints like alpha level accuracy and runtime limits. That blend of rigor and practicality is where a lot of engineering progress lives, and it’s the kind of work that can influence how next generation autonomous systems reason about a noisy world.

Speaking to the broader arc of AI and sensing, the paper hints at something more transformative: if we can recast hard probabilistic updates as a pair of tractable sub problems, we may unlock better filters for higher dimensional state spaces. The current results in one dimension already point toward a future where real time, high fidelity filters could power more capable navigation, tracking, and interpretation tasks in environments that are nasty with uncertainty. This is not about a single gadget getting faster; it’s about expanding the toolkit for how machines perceive, understand, and react to the world.

From a human perspective, the study also serves as a reminder that progress in AI and robotics often comes from a patient return to core mathematical ideas, paired with a disciplined eye for implementation. The Beihang University team—Wang, Miao, and Luo—has given us a blueprint that starts with a classical equation and ends with a practical, efficient algorithm. It’s the kind of work that makes you see why a field like nonlinear filtering remains both technically demanding and deeply relevant to everyday technologies.

As for what lies next, the authors themselves are candid about the path forward. Extending the exact decomposition to multidimensional observation models remains an active area of research. The hope is that a robust, high dimensional version of the method could offer linear or near linear scaling in both the number of particles and the polynomial degree even in more complex state spaces. If such an extension lands, it could widen the set of real world filtering problems that can be tackled with high accuracy in real time, from autonomous vehicles weaving through traffic to radar systems tracking moving targets in cluttered skies.

In the end, the work is a reminder that progress in engineering often hides in the gaps between big ideas and small details. The two step decomposition does not rewrite the physics; it reframes the math in a way that is friendlier to computers and faster to run. It is a practical sort of elegance, the kind you might call a tight seam in a well tailored suit—visible only if you look closely, but unmistakably impactful when you do. The study stands as a notable milestone in the ongoing quest to make nonlinear filtering both smarter and more approachable for real devices that must think on the fly.

And if you happen to be curious about who to credit for this thoughtful piece of progress, the research comes from Beihang University in Beijing, with collaboration from Henan Polytechnic University. The lead author is Ruoyu Wang, with Huimin Miao and Xue Luo also contributing, and Luo serving as the corresponding author. Their work is a reminder that good ideas often come from vibrant, collaborative ecosystems where mathematical curiosity meets practical necessity.