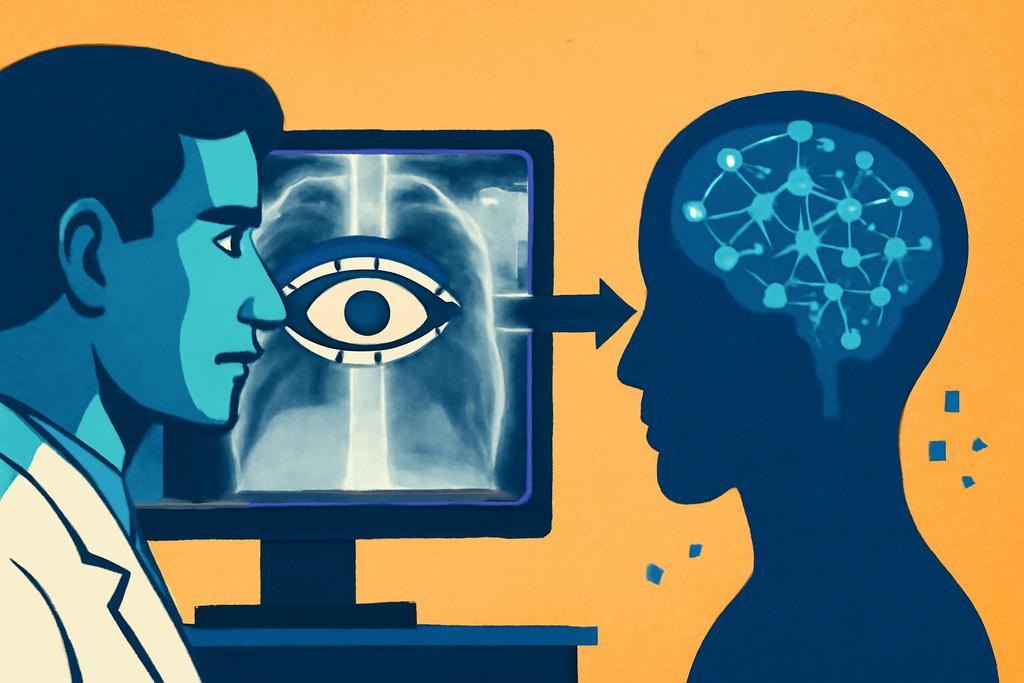

Imagine a world where artificial intelligence doesn’t just diagnose illness from medical images, but anticipates the very thought processes of the doctor reviewing them. This isn’t science fiction; a recent study from researchers at the University of Arkansas, University of Liverpool, University of Houston, and MD Anderson Cancer Center is pioneering a new frontier in AI-assisted healthcare. Their work focuses not on *what* a radiologist sees in a chest X-ray, but *how* and *why* they look at it — the very intention behind their gaze.

Decoding the Doctor’s Gaze

For years, AI has focused on mimicking the accuracy of expert radiologists. But this new approach, developed by Trong Thang Pham and colleagues, tackles a different challenge entirely: understanding the decision-making process itself. The researchers built a deep learning model called RadGazeIntent that analyzes the sequence of a radiologist’s eye movements, essentially tracing their visual journey across a chest X-ray. This isn’t about simply identifying patterns in eye movements; it’s about interpreting the *intention* behind each glance, each pause, each rapid shift of focus.

The model’s key innovation lies in its ability to differentiate between several distinct visual search strategies. The researchers categorized these strategies into three types: a systematic sequential search (RadSeq), where the radiologist methodically checks specific areas based on a mental checklist; an uncertainty-driven exploration (RadExplore), where the radiologist explores opportunistically, responding to visual cues as they emerge; and a hybrid approach (RadHybrid), combining both systematic examination and exploratory investigation. RadGazeIntent can not only distinguish between these strategies but also predict with surprising accuracy what a radiologist is looking for at any given moment during their image analysis.

Beyond Mimicry: A Deeper Understanding

Previous AI models often relied on creating “digital twins” of radiologists, essentially mimicking their visual search patterns without understanding the underlying reasoning. This new approach moves beyond simple imitation to develop a more nuanced understanding of the cognitive process. Think of it like the difference between simply copying a painting versus understanding the artist’s brushstrokes and the emotions behind them. RadGazeIntent aims for that deeper level of comprehension.

The implications are profound. Rather than simply aiding in diagnosis, this technology could offer real-time feedback to radiologists, highlighting potential areas of oversight or suggesting alternative investigative pathways. It could revolutionize medical training by providing immediate and individualized feedback on visual search strategies, accelerating the learning process. It could even help researchers understand the cognitive processes involved in medical image interpretation, potentially leading to insights that benefit both AI development and human diagnostic skills.

The Challenges of Intention

The research also acknowledges the inherent challenges in interpreting intention. Intention, after all, is an internal cognitive state that is not directly observable. The researchers cleverly sidestepped this limitation by building their datasets based on observable behaviors, inferring intention from the visual search strategies employed. While this approach is ingenious, it’s important to understand that the model’s interpretations are based on correlations, not direct access to a radiologist’s thoughts.

Furthermore, the study focused on chest X-rays, a specific imaging modality. While the principles underlying this approach could generalize to other medical imaging modalities, future work needs to investigate this further. Each modality has its own unique visual characteristics, and radiologists’ visual strategies may differ accordingly.

A Collaborative Future

The team’s research isn’t about replacing human radiologists but empowering them. RadGazeIntent offers the potential for a truly collaborative future in healthcare, where AI acts not as a replacement but as a sophisticated and insightful partner, assisting in diagnosis, refining training, and even enhancing the fundamental understanding of human cognitive processes in medicine. The implications ripple beyond radiology to other fields that rely heavily on visual expertise, including surgery, pathology, and education.

This research, a fascinating glimpse into the future of AI-assisted medicine, demonstrates that the most powerful applications of AI may not lie in simply mimicking human abilities but in understanding the very essence of human expertise and intelligence.