The Quiet Revolution in AI Chips

Large language models (LLMs) – the brains behind conversational AI like ChatGPT – are getting bigger, more powerful, and… increasingly thirsty for energy. Think of it like this: early LLMs were like sputtering gas-guzzlers, while today’s behemoths are akin to a fleet of jumbo jets constantly needing refueling. This massive energy consumption is a major hurdle to the widespread adoption of powerful AI, not just in terms of cost, but also environmental impact. Researchers at the Georgia Institute of Technology, led by Shimeng Yu and Yingyan (Celine) Lin, have developed a radical new chip architecture that promises to dramatically reduce the power demands of these AI giants.

The Problem with Current AI Chips

The core challenge lies in the architecture of LLMs. They’re built using a system called the Mixture of Experts (MoE), where different parts of the model (‘experts’) handle specific tasks. The problem is, these experts are activated on demand; some are busy all the time, while others sit idle. This sporadic activation makes it very difficult to use computer chips efficiently. It’s like having a huge orchestra where only a handful of musicians play at a time – a tremendous waste of talent and resources.

Current attempts to improve efficiency have fallen short. Some have used analog computing, which sacrifices accuracy for energy savings. Others try to move the computation closer to the memory, but this creates new bottlenecks. It’s like trying to solve traffic congestion by making each car drive itself—a good idea in theory, but execution is fraught with challenges.

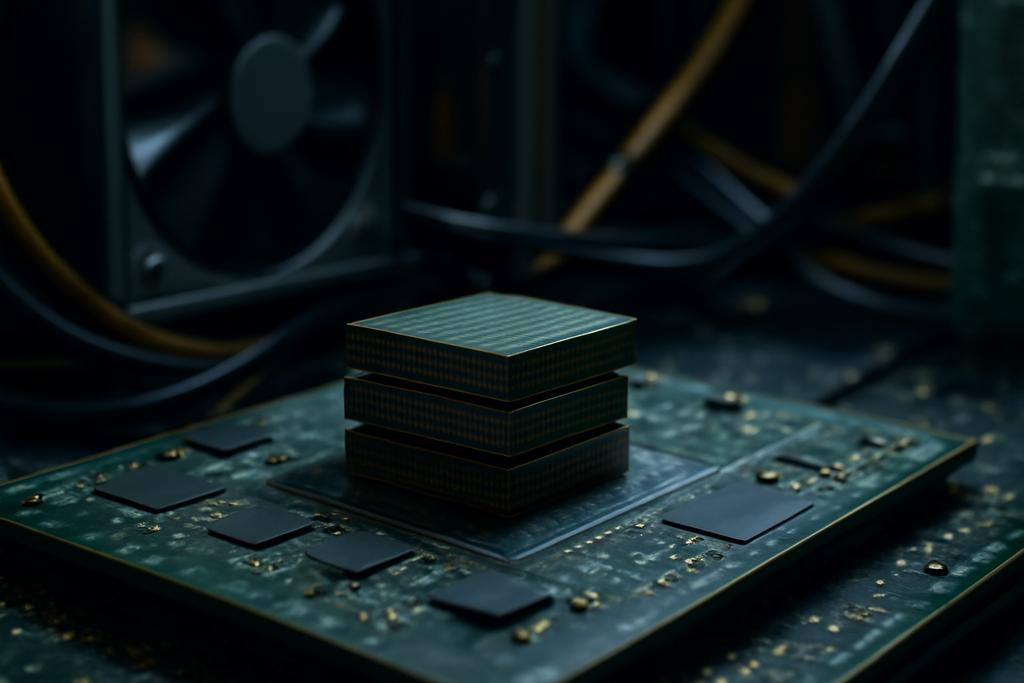

The 3D Solution: A Stacked Symphony

The Georgia Tech team’s solution is elegant in its simplicity. They’ve designed a three-dimensional chip architecture, leveraging advancements in vertical chip stacking. Instead of everything being spread out on a single chip, they’ve created a vertical stack: compute units at the bottom, high-bandwidth memory (HBM) in the middle, and even faster memory (V-Cache) at the top. This three-dimensional approach dramatically improves data transfer speeds, reducing the delays and power consumption associated with moving information around.

Imagine a well-organized orchestra, with musicians seated strategically according to their roles. The 3D chip does something similar. By intelligently organizing the experts and data flow, it avoids wasteful traffic jams and improves overall performance. The team’s approach involves three key innovations:

Adaptive Systolic Array: Fine-tuning the Orchestra

The researchers have devised a system called the “3D-Adaptive GEMV-GEMM-ratio systolic array,” which dynamically adapts to the fluctuating demands of the MoE architecture. Traditional systolic arrays are like a factory assembly line, great for repetitive tasks. The new design, however, is more like a flexible manufacturing plant, able to switch quickly between different tasks (GEMV and GEMM operations), maximizing efficiency.

Smart Scheduling: Orchestrating the Workflow

The team developed a ‘Hardware resource-aware operation fusion scheduler,’ which essentially acts as a conductor for the AI’s processing operations. This scheduler dynamically reorders tasks and efficiently combines multiple steps (like attention and MoE computations), ensuring that the different parts of the system work in concert, minimizing idle time.

Memory Management: Efficient Resource Allocation

The system also includes ‘MoE Score-Aware HBM access reduction with even-odd expert placement.’ This is a clever trick to reduce unnecessary data transfers. It prioritizes fetching the most relevant information from memory, like a librarian who quickly finds the most needed books. This approach significantly reduces the amount of data that needs to be moved around, saving energy and time.

The Results: A New Era of Efficient AI

The results are impressive. Compared to the best existing solutions, the Georgia Tech team’s 3D chip architecture demonstrates significant improvements: a 1.8 to 2 times reduction in latency, a 2 to 4 times reduction in energy consumption, and a 1.44 to 1.8 times increase in throughput. It’s like trading in your old jalopy for a high-performance electric vehicle – dramatically faster, more efficient, and better for the planet.

Beyond the Numbers: Implications for the Future

The significance of this research extends beyond mere technical improvements. The dramatic reduction in energy consumption opens the door to a more sustainable AI future. We can envision more powerful, more sophisticated AI models running on devices with smaller batteries and lower energy demands, fostering innovation in many sectors. The work offers hope of a future where AI’s immense potential is not hobbled by its current energy gluttony.

The research by the Georgia Institute of Technology team represents a significant step forward in AI hardware development. Their innovative 3D approach is a testament to the power of creative engineering solutions, bridging the gap between theoretical advancements and real-world implementation, offering a glimpse into a more efficient and sustainable AI future.