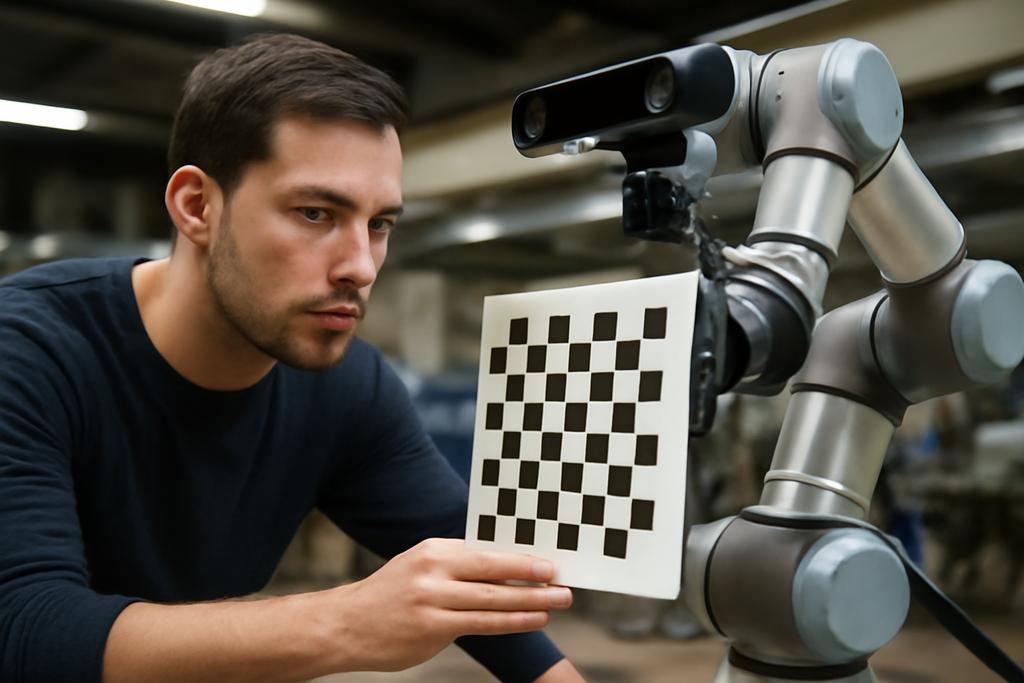

The quiet magic behind modern factory robots is the alignment of what a camera sees with where a robotic hand is allowed to reach. If those two worlds don’t line up perfectly, even the best grasping algorithm can stumble. Calibration has long been the gap between perception and action—a necessary ritual that slows everything down. You point a camera, you move the arm, you balance patterns on a board, you hope the numbers you pull out actually describe the real world. And then you do it again whenever something shifts.

This friction matters more than you might think. In bustling production lines, recalibration isn’t a one-off chore; it’s a recurring maintenance task as tools, cameras, or even the surrounding environment change. The latest work from Aarhus University in Denmark, with collaborators at Lund University in Sweden, aims to erase much of that friction. The authors—led by Li and Wan as equal contributors, with Xuping Zhang as the corresponding author—champion a 3D-vision approach that sidesteps external calibration targets altogether. Their method asks the robot to look at its own base and, from that single glance, align sight and motion in seconds rather than minutes. A project born in academia, it hints at a future where calibration becomes a routine, invisible background task rather than a tedious interruption.

The study isn’t just a theoretical exercise. It spans a broad family of collaborative arms—14 models from 9 brands, including KUKA, Universal Robots, UFACTORY, and Franka Emika—and it culminates in real-world industrial tests. The central promise is simple and powerful: you don’t need a chessboard or a calibration wand. You just need a realistic 3D view of the robot base, a robust registration system, and a dataset that teaches the computer how the base looks from many angles and poses. When the dust settles, the hand-eye calibration lands in a few seconds with accuracy that matches, or narrowly trails, traditional commercial solutions. That’s not just speed; it’s a shift in how quickly a factory can adapt to new tasks or restabilize a line after a tool change.

To readers who’ve watched automation evolve from clunky iron to flexible, near-silent teammates, the simplest line of this work is tantalizing: let the robot’s own body be the calibration target. It’s a hinge of perception and action that’s been staring us in the face for decades, but treated as a separate prop rather than a reference frame. The researchers frame their achievement not as a fix to a niche problem but as a practical rethinking of how a robot discovers its place in the world—on the fly, with 3D data and a bit of learning, no scaffolding required.

Watch the Robot Base for Calibration

At the core of the approach is a clever simplification: instead of chasing an external calibration object, the system watches the robot base itself. The camera, mounted on the end-effector or placed in the workspace, captures 3D data of the base as the robot tilts, swivels, and hums through plausible poses. From those scans, the algorithm estimates the base’s 6D pose—its location and orientation in space. With a reference model of the base in the same frame, the method then computes the transformation that ties the camera frame to the robot base frame (and, by extension, to the end-effector when needed).

In technical terms, the problem is a robust 3D- registration task. The captured base point cloud P is aligned to a reference model Q by finding the rotation R and translation t that minimize the distance between P and the transformed Q. Since the two clouds generally don’t line up in a perfectly scaled, identical way, the pipeline applies a pre-transformation to align the scales before registration. The result is a candidate camera-to-base (or camera-to-TCP) transform, which then unlocks the hand-eye relationship we care about. The elegance lies in a single, consistent frame of reference—the robot base—that all future observations can point toward.

Three practical decisions make this approach robust across many robots. First, the team built a generic dataset-generation method that doesn’t depend on a single hardware flavor. They simulate a wide range of plausible joint configurations and capture base points from around a hemispherical shell centered on the base. Second, they extend the data with realistic poses by sampling joints within their physical limits, reproducing the kinds of configurations you’d actually see in a factory. Third, they plug in a state-of-the-art registration network called Predator, designed for registering point clouds even when overlaps are sparse. The result is a training regime that teaches a model to recognize the base’s geometry from many viewpoints, even when the scan is incomplete or noisy.

The authors aren’t just playing with simulations. They test across 14 real-world arms from nine brands, using a mix of CAD models and real scans to train and validate. That breadth matters: in industry, a calibration method risks collapsing if it can’t handle the diversity of tools and shapes that populate a plant floor. Here, the hand-eye system is demonstrated to generalize beyond a single vendor, which makes the claim of practicality far stronger than a polished demo on one robot.

Faster, Simpler Calibration, Anywhere

The real payoff is time. In their most compelling real-world experiment, the team hooked up a UR10e robot with a high-precision 3D camera (the Zivid 2+ MR60) and staged a direct comparison with a commercial eye-in-hand calibration solution built around the traditional AX = XB formulation. The classic path—calibration object in view, multiple arm poses, several captures, repeated calibrations to average out noise—took roughly two minutes and forty-eight seconds per calibration, with the operator juggling board patterns and camera angles. The new method, by contrast, completes a full calibration in just a few seconds from a single pose. The entire process described in the paper shows a typical result where a complete calibration is achieved in 6 seconds per run, across one scan, and with minimal manual intervention.

The quantitative takeaway is striking. The researchers report translation offsets on the order of a few millimeters and rotational offsets on the order of a few thousandths of a radian when comparing their results to the baseline. In their mixed-robot test, the differences between the proposed method and the traditional calibration are modest—roughly 2–3 millimeters in position and around a thousandth or two in rotation in the cross-brand comparisons—yet the time savings are dramatic. They also show that even with a single frame, without stacking multiple views, the method yields stable, near-reference results. In other words, you don’t need to choreograph a full day of robot choreography to get a reliable calibration; one well-captured snapshot can do the job.

To aid in the broader industry adoption, the authors emphasize that their pipeline creates a frictionless path: no external calibration boards, no special lighting, no bespoke targets, and no complex choreography. The base becomes a consistent, intrinsic landmark that all future scans can reference. And because they trained the model across a spectrum of arms and joints, the method is less likely to stumble when a plant upgrades a tool or swaps in a different make of robot arm. The overarching implication isn’t just faster calibration; it’s a more adaptable, less brittle approach to keeping perception and action in step as a factory evolves.

Another practical win is in the data workflow. The pipeline leverages modern, machine-learning–driven 3D registration to bridge the gap between what the camera sees and where the robot believes it is. The data generation strategy—simulated poses, hemispherical viewpoints, and realistic joint constraints—helps ensure the model can cope with the real world’s imperfections: noisy depth, partial overlaps, and minor misalignments between CAD models and physical parts. The end result is a calibration technique that feels almost like a “set it and forget it” improvement, especially valuable in environments where recalibration must happen regularly due to tool changes or layout reconfigurations.

The study culminates in a broader claim about the state of practical robotics: a well-designed fusion of 3D vision, learning-based registration, and physics-grounded data generation can outpace, or at least rival, traditional calibration approaches while trimming the human and time cost. It’s a reminder that the bottleneck in automation isn’t always hardware; often it’s the pipeline that makes hardware useful in the real world. And when that pipeline is simpler, faster, and more generalizable, it multiplies the value of every robot on the floor.

In short, calibration becomes less about chasing a perfect diagram and more about letting the robot read its own geometry in 3D, quickly and reliably.

Credit where it’s due: the study is rooted in institutions that sit at the intersection of theory and shop floor pragmatism. The work was carried out by researchers from Aarhus University in Denmark, with collaboration from Lund University in Sweden, reflecting a cross-border emphasis on hands-on robotics. Li and Wan share first authorship, underscoring their equal role in steering the project, while Xuping Zhang serves as the corresponding author and the project’s senior voice. The team’s breadth—simulating 14 arms from 9 brands, validating with industrial hardware, and releasing code for public use—speaks to a culture where academic rigor meets practical manufacture.

As the paper closes, the authors sketch a future that looks less like a sequence of calibration rituals and more like a continuous, unobtrusive background process. They hint at bringing the approach into commercial products and making the workflow friendlier for workers who aren’t robotics specialists. If the promise holds, the next time a factory retools a line or swaps a gripper, the robot’s eye won’t need a special chessboard to regain its bearings — it will simply look at its base and know where it stands.