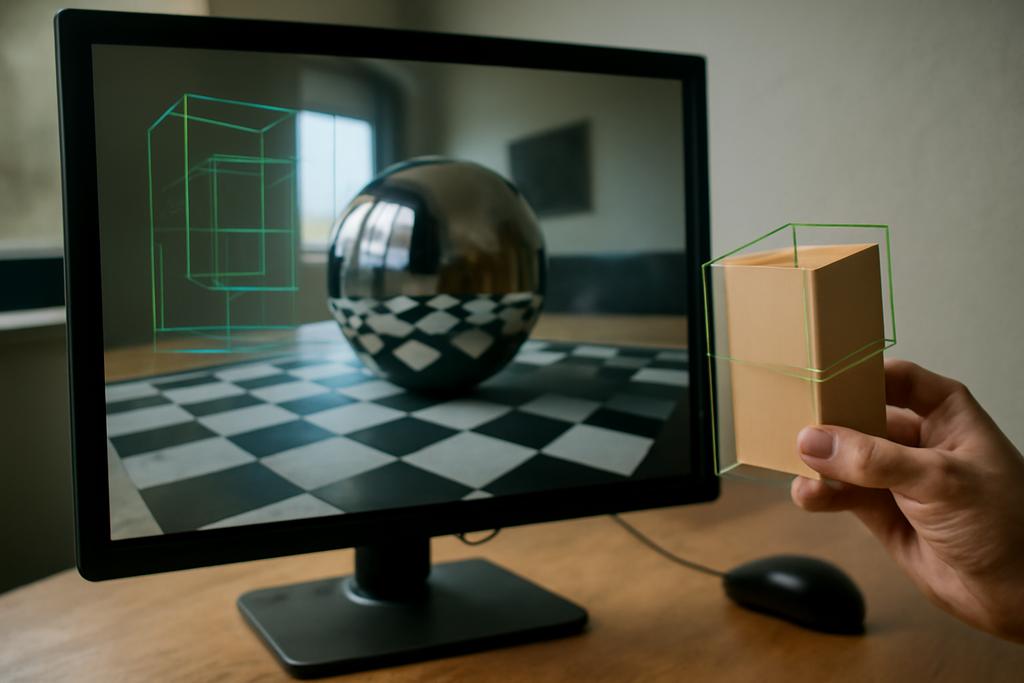

In the race to render photorealistic scenes at interactive speeds, the shape of a single bounding box can decide whether a scene feels instant or stuttery. Real-time ray tracing hinges on a data structure called a bounding volume hierarchy, or BVH, to decide quickly which objects a ray might hit. The tighter the bounding volumes, the fewer calculations the computer has to do to rule out the billions of possible intersections. But there’s a trade-off: tighter boxes usually mean more complex math and more memory, which can slow things down just as much as it speeds them up.

Researchers from Advanced Micro Devices (AMD) and Intel have proposed a clever twist on this long-standing balance. Their paper, DOBB-BVH: Efficient Ray Traversal by Transforming Wide BVHs into Oriented Bounding Box Trees using Discrete Rotations, shows how to convert standard axis-aligned bounding boxes into a family of oriented bounding boxes in a way that’s fast, memory-efficient, and friendly to modern GPUs. The core idea is simple to state and surprisingly powerful: keep all the children of a single internal BVH node aligned with a single, shared orientation, drawn from a carefully chosen, discrete set of rotations. That one constraint unlocks a cascade of performance improvements when rays are traced through the hierarchy on today’s hardware.

The work behind this idea comes from a team led by Michael A. Kern at AMD, with coauthors including Alain Galvan, David R. Oldcorn, Daniel Skinner, Rohan Mehalwal, Leo Reyes Lozano, and Matthäus G. Chajdas, alongside collaboration from Intel. It’s a blend of practical engineering and algorithmic insight, designed to fit into existing BVH pipelines as a post-processing step. The result is not a complete rethinking of ray tracing but a targeted upgrade that makes the common case faster while keeping the memory footprint modest. In a field where every millisecond matters, that balance can unlock smoother real-time rendering for games, architectural viz, film previews, and any application where interactive light transport matters.

What’s new here is less about heroic new theory and more about a pragmatic choreography of geometry, rotation, and hardware-friendly computations. The authors propose a predefined set of discrete rotations that cover the hemisphere in a balanced, uniform way. They then enforce a consistent rotation across all children of a wide internal node, which lets them store and apply the orientation with compact, SIMD-friendly data. And they extend the toolkit to multi-child nodes using discrete orientation polytopes, while keeping the overall build-time impact within real-time-friendly bounds. In other words: they give GPUs a reliable, low-cost way to align bounding volumes with the real directions geometry tends to point in, without paying a high price in memory or CPU cycles.

What problem are they solving and why it matters

To understand the significance, it helps to peek under the hood of how modern ray tracers work. A BVH is a tree where each node represents a bounding volume—often an axis-aligned bounding box, or AABB—that encloses a subset of the scene’s geometry. When a ray is shot into the scene, the tracer traverses the tree, checking for intersections with nodes’ bounding volumes. If a ray misses a node, the tracer can skip all its children. If it hits, the tracer continues deeper. The speed of this traversal depends on two things: how quickly you can test a ray against a bounding volume, and how effectively the BVH prunes away large swaths of the scene that don’t matter for a given ray.

Axis-aligned boxes are simple and cheap to test, but they don’t wrap tightly around many kinds of geometry. Think of hair strands, cables, or twisted tree branches: narrow, elongated pieces that bend and tilt away from the primary axes. In those cases, AABBs overestimate volume, leading to extra work—more nodes to visit, more tests, more memory traffic. Oriented bounding boxes (OBBs) can fit those shapes far tighter, but constructing, storing, and testing OBBs is more expensive. The prior art has shown that you can convert a prebuilt AABB BVH to an OBB BVH on the GPU as a post-processing step, but doing so efficiently—without blowing up memory and build time—remains tricky, especially for wide BVHs with many children per interior node.

Enter DOBB-BVH. The authors’ insight is to constrain the problem: fix a small, discrete set of rotations and require all wide interior nodes to share the same orientation for all their children. With that constraint, you can encode the rotation compactly, test rays using hardware-friendly OBB intersection routines, and still gain the advantages of a tighter bounding volume. It’s a bit like choreographing a choir: if every section (each child) sings in harmony (shares the same orientation), the overall performance (ray traversal) sounds tighter and cleaner, even if each individual note is simplified.

The core technique: discretized rotations and a shared orientation

The paper’s construction of discrete rotations is one of its most practical contributions. They define a finite set of rotations by combining a curated set of axes with a discretized set of angles. Specifically, they pick up to 13 orientation axes spread across the hemisphere and pair them with a small set of angles on each axis, generating a library of 104 distinct rotations. This keeps the representation compact and the decoding fast, while providing enough flexibility to cover the kinds of rotations you typically see in real scenes—think of a branch or strand tipping slightly off-axis rather than spinning wildly in every direction.

Once the discrete rotation set is fixed, the method proceeds bottom-up through the BVH. At leaf nodes, which in this work enclose two triangles, the algorithm derives a local frame from the geometry and maps that into the nearest rotation from the discrete set. The real trick happens at interior nodes: instead of letting each child carry its own OBB with its own orientation, the method selects a single rotation for the whole node and refits each child’s bounding volume in that shared rotated space. This yields a single, compact rotation index per interior node. Because all children live in the same rotated frame, the memory footprint for storing orientation data is tiny—just a few bits per node—and the decoding on the GPU becomes a simple lookup followed by a fast matrix reconstruction.

To avoid the pitfall of misaligned projections when you rotate the bounding box, the authors introduce apex point maps. These are geometric constructs that let you compute the planes that bound each OBB in the rotated space, even when your proxies (the 26-DOP projections used to guide the fit) don’t line up perfectly with the OBB’s axes. It’s a clever workaround that preserves conservative bounds without dragging along a heavy computation budget. In practice, this means you can reuse simple, fixed-projection data at leaf nodes and still get tight, robust bounds higher up in the tree.

The attention to memory and SIMD efficiency is not accidental. The team’s hardware-oriented mindset shines in the traversal design. They implement a GPU kernel that decodes the small rotation index, transforms the ray into the node’s rotated space, and tests the ray against all of the node’s children in parallel. The result is a traversal loop that plays nicely with the way modern GPUs pipeline work across many threads and SIMD lanes, preserving the performance advantages of hardware-accelerated ray-box tests while amplifying them with better-fitting volumes.

From theory to practice: how much of a win do we actually get?

The authors ran a comprehensive set of experiments across several representative scenes—Hairball (hair-like geometry), White Oak (a leafy tree with varied leaf orientations), Dragon (a mix of axis-aligned and rotated geometry), Bistro Exterior, and Powerplant. They compared baseline AABB BVHs with two DOBB variants: a CPU-based offline solver that brute-forces the best orientation, and a GPU-based post-processing pass that uses the discrete-rotation approach with shared orientation per node. The results are striking in places, modest in others, and instructive about where bounding-volume optimization really pays off.

One headline finding is that the transform-then-traverse approach can deliver meaningful speedups even when you account for the extra work of building the DOBB. On average, primary rays benefited by about 18.5% in throughput, with some scenes seeing far larger gains. Secondary rays, which typically drive global illumination and can be the bottleneck in many pipelines, improved by roughly a third, and in some cases by as much as 65%. In other words, the approach shines most where rays are more likely to weave through non-axis-aligned clutter—think foliage, threads, or tangled machinery—where axis-aligned boxes struggle to keep up.

Perhaps most important is the practical reality of the build-time cost. The GPU-based DOBB conversion adds around 10–15% overhead to the BVH construction stage. In absolute terms, that’s a handful of milliseconds for large scenes. For static content—think a video game world frame after frame—the amortized cost is negligible compared to the runtime gains during rendering. For dynamic scenes that change every frame, there’s more work to be done, but the authors also sketch a path toward integrating DOBB more tightly with dynamic BVHs in the future.

Memory usage is another critical dimension. The DOBB representation stores the rotation as an index into a small set of precomputed matrices, plus a compact encoding of the rotated bounds. The total memory overhead scales with the number of interior nodes and the width of the BVH, but the authors report that the extra bits per node are modest. In the context of modern GPUs with ample high-bandwidth memory, this is a reasonable trade-off for the promise of faster traversal and lower per-ray tests.

In the end, the paper does not claim a universal, across-the-board victory for every scene. They observe that scenes with very small, axis-aligned primitives or scenes where a single orientation coherence dominates can yield more modest gains from shared-orientation DOBBs. But in cases with pronounced non-axis-aligned geometry and complex traversal patterns, the improvements are meaningful and consistent. The results also hint at the broader potential: if a hardware-optimized method can extract this much performance from a post-processing step, it could be a practical upgrade path for existing rendering pipelines without a wholesale re-architecture of their BVHs.

Why this matters for artists, engineers, and players

For game developers and real-time renderers, the DOBB idea offers a drop-in optimization that sits at the intersection of software cleverness and hardware pragmatism. It’s not asking for new hardware or a radical rethinking of ray tracing; it’s about making better use of what you already have. The shared-orientation trick reduces the amount of memory traffic and makes the ray-OBB tests more SIMD-friendly, which matters on GPUs designed around parallel workloads. The upshot is that scenes with a lot of elongated, tilted geometry—think hair, cables, or branches—become cheaper to render with high fidelity. That, in turn, can enable richer visuals, higher frame rates, and more ambitious lighting techniques like real-time global illumination, with fewer compromises between speed and accuracy.

Beyond games, the approach could influence other domains where fast spatial queries through large, complex scenes matter. In robotics, for example, fast collision checks against cluttered environments can benefit from tighter bounding volumes without exploding memory usage. In visual effects and architectural visualization, artists could push for higher detail in the same real-time or interactive previews that drive iterative creativity. The method’s emphasis on post-processing integration also means studios can retrofit existing engines, rather than retooling the entire rendering stack from scratch.

One of the paper’s pragmatic throughlines is the idea that good engineering often travels in the gaps between theory and production. The discrete-rotation palette is a deliberately small, well-chosen toolbox. The shared-orientation rule is a simple constraint with outsized consequences for traversal efficiency. The apex-point maps are a practical trick that keeps geometry robust without drowning in computational taxes. Taken together, these ideas illustrate how progress in computer graphics doesn’t always mean a single leap forward; sometimes it’s a relay race, with each handoff shaving milliseconds off the critical path.

What the future could look like if this catches on

As the authors note, the next steps include refining the cost heuristics, exploring more sophisticated orientation selection strategies, and extending the approach to dynamic BVHs where geometry moves across frames. There’s also room to explore hybrids that combine AABBs and OBBs within a single node, or to experiment with even richer sets of discrete rotations for particular workloads. The broader implication is a shift in how we think about bounding volumes: not as static cages we toss geometry into, but as adaptable, learnable scaffolds that align with how geometry tends to move and orient itself in the real world.

From a practical standpoint, we could see game engines and real-time renderers offering optional DOBB-based acceleration paths that automatically decide when to apply the transformation based on scene content. For studios pushing toward cinematic-quality GI at interactive rates, that could translate into crisper shadows, more accurate reflections, and richer indirect lighting without ballooning render times. For researchers, the work is a reminder that even in well-worn domains like BVHs, meaningful gains can come from revisiting the basic building blocks with a new constraint or a new perspective on hardware compatibility.

The DOBB-BVH approach also underscores a broader theme in computer graphics: the value of post-processing tweaks. If you can take an existing BVH, apply a compact, hardware-friendly transformation, and realize tangible runtime gains, you unlock a practical path to improvement that doesn’t demand a completely new rendering paradigm. In an industry where every frame is a negotiation between pixels, latency, memory, and power, that kind of middle-ground progress is priceless.

In sum, the work from AMD and Intel researchers demonstrates that you can make real-time ray tracing faster by rethinking how we bound geometry, not by changing the lighting model or the ray tracer’s core, but by smartly reshaping the way we box the world. The key is a disciplined blend of discretized rotations, shared orientations, and geometry-aware proxies that keep the math lean and the results sharp. If the industry adopts these ideas widely, the next generation of real-time visuals could look a little tighter, a little more faithful, and a lot smoother—as if the rays themselves learned a more economical lane to travel in.

Lead researchers and affiliations: The work was conducted by a team at Advanced Micro Devices, Inc. (AMD) with collaboration from Intel Corporation. The lead author is Michael A. Kern, with coauthors including Alain Galvan, David R. Oldcorn, Daniel Skinner, Rohan Mehalwal, Leo Reyes Lozano, and Matthäus G. Chajdas. The study exemplifies how industry labs are pushing the envelope on practical, production-ready graphics technologies that could reshape what we see in real time on consumer hardware.