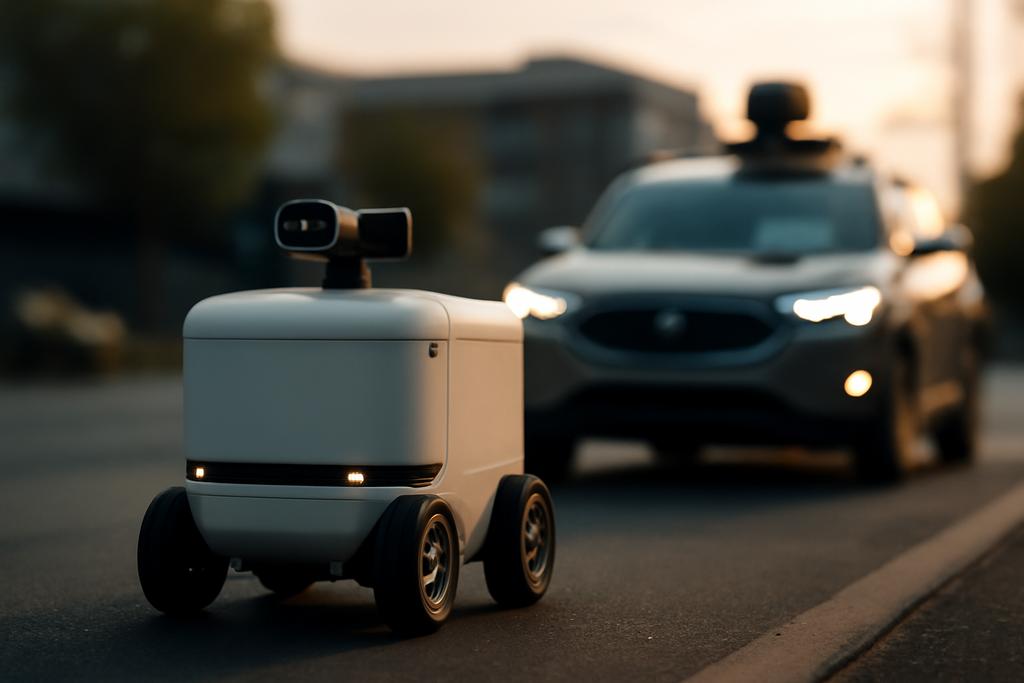

In a world racing toward smarter devices, the challenge isn’t just collecting data but turning it into quick, trustworthy decisions where they matter most. Imagine autonomous vehicles, delivery drones, or factory robots that must both feel their surroundings and decide what to do in the blink of an eye. A new study from a team led by Peng Liu at Beijing Institute of Technology and collaborators across several institutions proposes a bold blueprint for making that dual demand—sensing and computing—work together at the edge of the network. It’s not just clever engineering; it’s a new way to think about where computation happens in real time, and how we can squeeze speed, privacy, and efficiency out of the airwaves themselves.

The paper, a meticulous synthesis of wireless signaling, deep learning, and distributed computing, introduces a three-tier ISCC framework that blends Integrated Sensing, Communication, and Computing. In plain terms: devices sense the world, talk to nearby edge servers, and pass along only the most useful slices of the brainy work to cloud servers, all while keeping latency to a minimum. The core idea is to partition a deep neural network across device, edge, and cloud in a flexible way and to coordinate beamforming and computation with mathematical tricks that feel almost like a tight, choreographed dance. The authors show that by transmitting intermediate features rather than raw sensing data, the system can dramatically cut fronthaul traffic and still deliver sharp sensing and fast inferences. The work represents a rare blend of theory and practical design, and it comes with a credible claim: a three-tier architecture can outperform two-tier setups in real-world latency while staying robust as conditions change.

At the heart of the project is a collaborative inference pipeline that uses multiple antennas, smart beam steering, and a pre trained DNN model to recognize targets or objects from sensing data. The lead author, Peng Liu, and colleagues from the Beijing Institute of Technology plus partners at The Chinese University of Hong Kong Shenzhen, Shenzhen Research Institute of Big Data, Southern University of Science and Technology, and Nanyang Technological University in Singapore push a simple but powerful idea. If the cloud, the edge, and the device each run a different portion of the neural network, and if the device sends only intermediate features rather than raw measurements, you can cut both the data you transmit and the time it takes to infer. The result is an architecture that feels a bit like a relay race for computation, where each leg runs with just enough fuel to keep the baton moving quickly to the next station.

A Three-Tier Architecture That Learns

To picture the setup, picture a fleet of ISAC devices scattered around a city square. Each device isn’t just a sensor; it’s a transmitter with multiple antennas that can shape radio waves to both probe the environment and carry data to the next station. A base station with an edge computing server sits nearby, ready to perform the middle stretch of the neural network. And far away, a cloud server holds the rest of the model, ready to finish the job with even bigger compute power. This triad—devices, edge, and cloud—forms a living, breathing pipeline that can split the neural network into three chunks and run each chunk where it makes the most sense, given the current network conditions and sensing tasks.

The paper formalizes how these chunks are decided. Each device hosts the early layers of the DNN, the MEC server handles intermediate layers, and the cloud completes the final layers. A crucial design constraint says that a device’s DNN may have at most two partition points, which keeps the system flexible while not letting any single hop become a bottleneck. The system also uses a beamforming strategy that tunes how the ISAC signals are transmitted so the sensing beams hit the targets of interest while keeping interference down and the data rates up. In practice, that means shaping the beampattern so the radar-like sensing and the data communication work in harmony, rather than at cross purposes.

From the signal engineering side, the authors model the sensing channel with multiple input multiple output (MIMO) techniques. Each device’s transmission is designed to produce a beampattern that meets a precomputed target covariance, ensuring sensing quality while also enabling reliable feature transmission to the edge and cloud. The model is intentionally rich: it captures the tradeoffs between sensing beamforming gains, data transmission rates, and the computational load that will be distributed along the three tiers. This is not a toy scenario. The three tier design is meant to reflect how future networks will actually function when sensing, communication, and computation are fused into a single, data-hungry workflow.

From Raw Data to Smart Decisions

Why not just send everything to the edge or to the cloud? It’s tempting, but it’s not realistic at scale. Raw sensing data is bulky, and much of it is redundant for the downstream AI tasks. Transmitting it all would clog fronthaul links, drain batteries, and add latency at precisely the moments when speed matters. The authors’ answer is to opportunistically partition the DNN based on resource availability and channel conditions, so that each device handles the early, cheaper layers while the edge and cloud handle progressively heavier computations. This is known as intermediate feature offloading—sending the outputs of early neural network layers rather than the raw data itself. Because those intermediate features are often orders of magnitude smaller than the raw input, the system can dramatically reduce the data that must travel over the network.

The three-tier arrangement doesn’t just cut traffic; it also helps with privacy and security. By truncating data before transmission, the system reduces the exposure of raw sensing information, which might contain sensitive details about people or places. The pipeline’s privacy by design is a welcome contrast to architectures that shuttle all sensor data upstream, exposing more information than necessary. And because the partitioning is dynamic, the network can adapt if a device’s battery is low, if a network link becomes congested, or if the beampattern must be tightened to focus on a particular target.

In their experiments, the researchers test a familiar neural network architecture—AlexNet—on a scenario with multiple ISAC devices and a cloud-edge-device chain. They simulate a realistic mission: detect and classify targets from radar-like sensing streams while juggling the computational budgets of the device, the MEC server, and the cloud. The signal model uses SDMA (space-division multiple access) to separate users in space, which helps keep sensing accuracy high while maintaining decent data rates. The upshot is a coherent narrative: you can sense the world with devices, relay a compact set of features to the edge, and have the cloud finish the job, all without turning the network into a traffic jam.

A Smart Solution to a Hard Problem

Peering into the engine room of the solution, the paper tackles a very thorny optimization problem. The goal is to minimize the total inference latency for all devices, subject to a suite of constraints—how many partition points each device can use, the available computation at the MEC and at the cloud, how much energy a device can spend, and a beampattern constraint that guarantees sensing quality. In other words, it’s not just about speed; it’s about doing so without blowing through energy budgets or sacrificing sensing accuracy.

To crack this problem, the authors deploy a two-layer optimization strategy. The inner layer handles the continuous variables: how much compute to allocate to each device at the MEC, how much compute each device uses locally, and how to shape the ISAC beamforming. For the MEC and the device, the authors derive closed-form, calculation-light solutions using well-known optimization tools like Karush-Kuhn-Tucker conditions. For the beamforming, they lean on a sophisticated iterative approach that blends majorization–minimization with weighted minimum mean square error techniques. The mathematical wrangling pays off: the beamformers become a sequence of simpler, solvable problems that home in on an efficient arrangement of power and interference across devices and layers of the DNN.

The outer layer is where the top-level decision about where to partition the DNN actually gets learned. Rather than rely on brute-force search or a slow, off-the-shelf heuristic, the authors turn to a cross-entropy–based Monte Carlo learning approach. They model the partition decision as a set of binary choices, one for each potential cut point on each device. They then sample many partition patterns, solve the inner-layer problem for each pattern, and keep only the best-performing ones to shape a probability distribution over future samples. Over successive iterations, the distribution concentrates on partition patterns that minimize overall latency. It’s a bit like evolutionary selection, but guided by a principled statistical method rather than trial and error alone.

The results are telling. Compared with two-tier schemes and with a cloud-edge-device setup without DNN partitioning, the proposed three-tier approach delivers noticeably lower inference latency across a wide range of conditions. The cross-entropy–driven outer loop approaches the performance of a brute-force optimal strategy but with dramatically lower computational cost, a crucial advantage for real-time deployments. The authors also highlight a trade-off baked into the system: widening the sensing beampattern (to cover more potential targets) can reduce latency by improving the fronthaul efficiency, but it often comes at the expense of sensing gain. In short, the architecture invites you to tune a dial between how precisely you sense and how fast you infer, depending on the mission’s priorities.

The paper’s simulations are not a mere toy demonstration. They anchor the architecture in plausible hardware—multiple antennas at the devices and the base station, a high-capacity MEC server, and a cloud link with a real backhaul rate. They also acknowledge the complexity of scaling up: the inner-layer MM–WMMSE steps involve matrix calculations and singular-value decompositions, while the outer-layer CE method trades off search breadth for convergence speed. The overall message, though, is clear: a well-choreographed three-tier collaboration can achieve near-optimal latency with practical compute budgets, a win for edge AI in dynamic radio environments.

For researchers and engineers, the paper reads like a blueprint with a few knobs to tune. It shows how DNN partitioning can be integrated into a live wireless stack, how MIMO sensing and communication can be interwoven rather than treated as separate layers, and how probabilistic learning can steer design choices in the face of combinatorial complexity. It is also a reminder that the future of intelligent wireless systems will not be a single, monolithic device or a single server clouding the edge. It will be a mesh of devices, radios, and servers, each making local decisions while harmonizing with the rest of the system through carefully crafted optimization and learning strategies.

The sentence you’ll want to remember from the paper’s big-picture claim is this: when edge devices, edge servers, and the cloud share a pre learned DNN across three tiers, you unlock lower latency, better sensing, and smarter control without simply pushing more raw data into the network. It’s a design philosophy as much as a technical method—a shift from centralization to distributed intelligence, governed by how we partition work and how we talk to one another across space and time.

In the end, this work is a snapshot of how researchers are reimagining the edge not as a place for offloading raw workloads, but as a living, learning ecosystem where decisions emerge from a chorus of parts. The authors’ approach turn the three-tier ISCC framework into a practical, tunable instrument rather than a theoretical curiosity. And as devices proliferate, as sensors multiply, and as the demands for real time grow more intense, that instrument could be a cornerstone of how we build systems that feel almost prescient—aware of their surroundings and ready to act the moment it matters most.