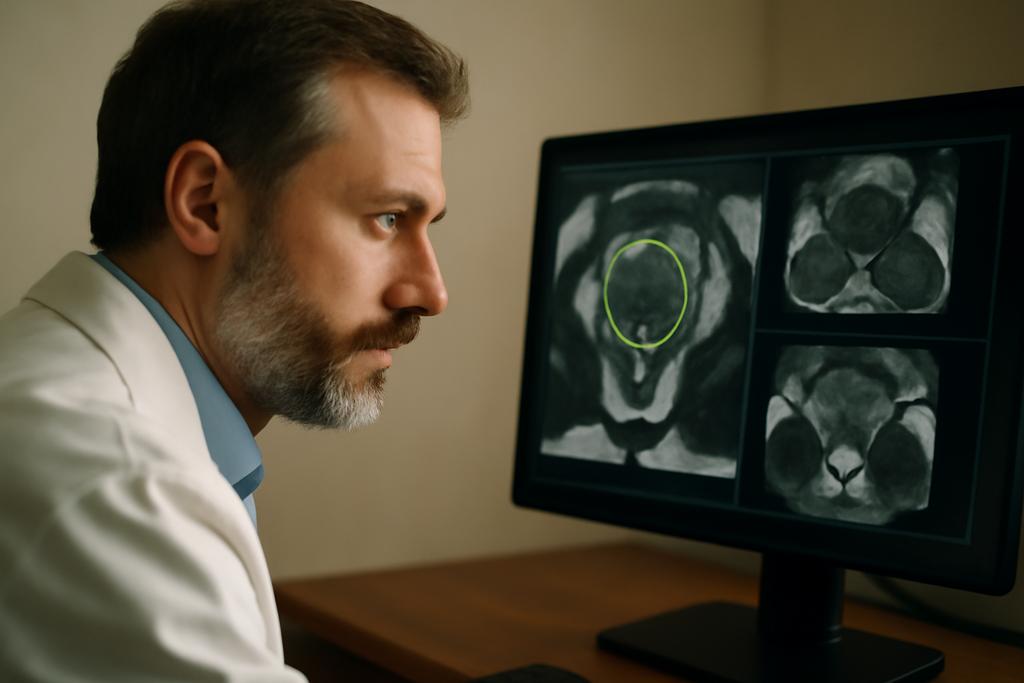

Prostate cancer remains a major health issue, and MRI is a powerful lens for spotting it. But turning those images into reliable, actionable maps—the kind a clinician can trust at a glance—has always been a human-scale challenge. Segmentation, the act of drawing the exact boundaries of the prostate on an image, is slow when done by hand and varies from doctor to doctor. AI has stepped in, yet many tools feel like black boxes: astonishingly capable, but opaque enough to raise questions in a clinical setting. A new study from the University of Southern California (USC) offers a different flavor of machine learning, one that aims to be both practical and transparent. The team, led by Jiaxin Yang, with collaboration from Inderbir S. Gill and C.-C. Jay Ku, presents Green U-shaped Learning or GUSL, a method that maps prostate structures in MRI through a sequence of simple, interpretable steps rather than through a self-contained, heavyweight neural net.

GUSL is built around a bold idea: you don’t need a labyrinth of backpropagated layers to produce precise medical segmentations. Instead, it uses a feed-forward pipeline where features are learned in linear, multi-scale stages, and predictions are refined through residue correction from coarse to fine scales. It emphasizes interpretability—each voxel’s features come from a transparent representation rather than an opaque deep network. And it does so while keeping the model small and energy-efficient, a practical advantage for real-world clinics that care about both carbon footprints and hardware budgets. In short, GUSL trades the mural of a mind-bending black box for a walkable map that clinicians can read and reason about alongside their patients.

What GUSL Really Is and Why It Sticks Out

The core novelty is not a flashy neural network triumph but a carefully engineered sequence of steps that together feel like a human-guided segmentation workflow. GUSL is a 3D U-shape architecture that operates entirely as a regression pipeline—no backpropagation, no millions of tunable parameters. It begins with a lightweight, linear multi-scale feature extractor called VoxelHop, which turns tiny 3D neighborhoods around each voxel into compact feature vectors. A channel-wise Saab transform then re-expresses local information in a spectral space, yielding features that are interpretable enough to trace back to the image content. Think of it as translating a noisy MRI neighborhood into a human-readable vocabulary of texture and structure.

From there, the method climbs the image in stages—from coarse to fine—infusing context at each level through a receptive-field expansion. This expansion helps the model distinguish subtle tissue differences by looking at surrounding voxels, rather than judging each point in isolation. The team further layers a structured reasoning step: a residual regression that refines predictions as you move from the rough outline to the precise boundary. Importantly, GUSL is designed to be transparent about where it’s looking and why, because the features stay tied to linear transformations rather than hidden activations in opaque networks.

In practice, the authors implement a two-stage, coarse-to-fine pipeline. Stage one downsamples the whole volume to a lower resolution to grab a rough prostate mask, which is then upsampled to guide a focused Stage Two analysis on a cropped, high-resolution region. The two-stage cascade is not merely a trick for speed; it directs the learning where it matters most—the boundary where segmentation errors tend to cluster. The result is a model that can deliver accurate gland delineation and prostate zonal masks (the peripheral and transition zones) with a markedly smaller footprint than traditional deep learning models. The method’s interpretability is reinforced by a design that keeps most of the “how it works” logic in linear, auditable steps rather than nested black-box computations.

The USC team’s emphasis on transparency is not cosmetic. They lean into three concrete components—VoxelHop feature extraction, Leftover supervision through LNT (Least-squares Normal Transform) and RFT (Relevant Feature Test), and a boundary-focused residual correction stage—to ensure the model’s decisions are traceable. The approach is described as a fully regression-based architecture, but with a pragmatic twist: the features distilled by the VoxelHop units are supplemented by supervised feature learning that nudges the representation toward what actually helps a radiologist decide where the boundary lies. In a field where explainability can be as important as accuracy, this is a deliberate design choice with real-world resonance.

The researchers tested GUSL across multiple datasets, including public benchmarks ISBI-2013 and PROMISE12, plus USC’s own Keck/U.S.C. collection. Across gland segmentation and the two prostate zones, GUSL not only held its own against cutting-edge deep learning models but often surpassed them, particularly on boundary-sensitive tasks. But what stands out isn’t just the numbers; it’s the sense that the model’s decisions feel explainable enough to discuss with a patient or a clinician Scout who wants to understand how the boundaries were drawn.

Two Stages, One Goal: Sharper Boundaries with Fewer Resources

The two-stage cascade is the heart of GUSL’s practical power. In stage one, a downsampled volume is processed to produce a rough map of where the prostate lies. This coarse segmentation is intentionally cheap to compute, acting as a global guide that saves precious cycles for the next step. The mask from stage one is upsampled and used to crop a region of interest (ROI) from the original, full-resolution image. Stage two then re-applies the segmentation algorithm to this smaller patch at higher resolution, delivering the final gland mask as well as the TZ and PZ masks for the prostate.

Inside each stage, the architecture stacks three crucial ideas. First, unsupervised representation learning is implemented through cascaded VoxelHop units that extract spatial-spectral features with the Saab transform, a linear, PCA-like approach. Second, receptive-field expansion accumulates context by merging neighboring voxel features, so each voxel’s decision benefits from surrounding tissue information. Third, a supervised feature-learning layer—the combination of LNT and RFT—injects task-specific guidance while keeping the representation compact and interpretable.

What makes this design especially compelling is that the model remains lean. The paper reports a model size and computational footprint that are an order of magnitude smaller than many contemporary deep networks. The result is a pipeline that can run on more modest hardware and still deliver strong performance on medical segmentation tasks. In an era where energy use and hardware cost increasingly gate the deployment of AI in healthcare, that is not a side benefit but a practical imperative.

The residual correction component is a thoughtful flourish that aligns with clinical intuition. After the initial coarse prediction, a second regressor targets the error-prone boundary regions by focusing on the residual map—the difference between the downsampled ground truth and the current prediction. This ROI-guided correction is designed to avoid wasteful re-learning of easy regions (backdrop and broad tissue) while directing learning power toward the edges where mistakes most often occur. The corrected residual is added back to the prior prediction, and the process repeats as the model moves to finer scales. This approach mirrors how a clinician might first note the rough location of the gland and then meticulously refine the outline with attention to the base and apex in subsequent passes.

Why This Could Change Medical AI, Not Just Prostates

Beyond achieving competitive or state-of-the-art segmentation scores, GUSL’s real promise may lie in how it reframes what “learning” looks like in medical imaging. The pipeline leans on a Green Learning framework: a family of ideas that prioritizes transparency, modularity, and energy efficiency. By sticking to linear, interpretable representations and a sequence of regressors rather than a single monolithic neural network, GUSL makes every step auditable. For clinicians, that can translate into a level of trust that is hard to muster from a giant black-box model.

And the energy and model-size advantages matter. GUSL’s footprint is dramatically smaller than typical DL models while still delivering top-tier performance on gland and zonal segmentation tasks. In hospital settings—where bandwidth, cooling, and electricity are real constraints—this can be the difference between a tool that stays on a clinician’s desk and one that never leaves the lab bench. The authors emphasize that their approach is not specialized to the prostate; it’s a generalizable strategy for medical image segmentation that could be adapted to other organs or imaging modalities. If this kind of transparent, efficient AI becomes a common pattern, it could catalyze broader physician collaboration, iteration, and trust in algorithmic decision-making.

The USC team has anchored their work in a clear collaboration between electrical engineering, urology, and radiology, underlining the practical, multidisciplinary spirit required to move AI from theory to bedside. The study was led by Jiaxin Yang, with involvement from Inderbir S. Gill and C.-C. Jay Ku among others. Their message is not merely that you can segment better; it’s that you can do so with a tool that a clinician can discuss, justify, and deploy without requiring a climate-controlled data center. If the future of AI in medicine is a toolkit you can pull off the shelf and tailor to a patient’s needs, GUSL’s design philosophy could be a meaningful step toward that reality.