In the world of fluid dynamics, the patterns that shape everything from aircraft wings to weather systems are written in numbers. CFD tries to read those patterns by simulating how every drop of fluid slides, swirls, and collides on a digital grid. The math is exact in principle, but in practice it demands mind-bending amounts of computing power, especially when the flow becomes turbulent or when you want to watch long time horizons. Quantum computers promise a different kind of speed—one based on the strange superposition of possibilities rather than the speed of a GPU ladder. Yet there’s a stubborn snag: even if a quantum computer solves a fluid-dynamics equation in fewer steps, how do you get useful, human-scale data out of its quantum state without drowning in measurements? This bottleneck is what researchers describe as the “output problem.” Without clever ways to translate quantum results into something engineers can act on, the theoretical speedups stay behind a glass wall.

Enter PolyQROM, a bridge built by a team at the University of Science and Technology of China (USTC) in Hefei, with collaborators at the Hefei Institute of Artificial Intelligence and Origin Quantum Computing. The study’s leading authors—Yu Fang and Cheng Xue, supported by Tai-Ping Sun and a cadre of colleagues—propose a technique that treats the quantum encoding not as a black box but as a feature-rich signature of the flow. By projecting the high-dimensional flow field onto orthogonal polynomial bases, PolyQROM creates a compact, informative fingerprint of the data. This fingerprint can then be manipulated by the quantum computer or by conventional post-processing, enabling tasks like reconstructing a full flow field from a small set of coefficients or classifying flow regimes with far fewer parameters than a classic neural net requires. It’s a little like compressing a symphony into a handful of resonant notes that still carry the tune.

Orthogonal Polynomials Power a Quantum Engine

At the heart is an idea that sounds almost old-school and new-school at once: take a problem in a high-dimensional space and describe it with a carefully chosen basis of functions that don’t overlap with each other. Orthogonal polynomials are such a basis, with a long history in approximation theory. The PolyQROM team takes that classic math and fuses it with quantum neural networks, building what they call an OPQNN—a quantum circuit that embeds trainable parameters into the projection onto Fourier-like and Chebyshev-like bases. The punchline is simple to state in a sentence: you turn a wild, sprawling set of flow data into a compact set of numbers that still remember the essential shape of the flow.

The computer realization looks a little like a stage where the performance is guided by two choreographies. One ward of the network implements a quantum Fourier transform (QFT) style basis, the other a quantum discrete cosine transform (QDCT) style basis. In each case, the input flow state is decomposed into coefficients alpha, each tied to a basis function. Those coefficients become the lungs of the analysis: they carry enough information to reconstruct the flow to a high fidelity or to feed a classifier that recognizes patterns such as a steady jet, a swirling recirculation, or a dam-break plume. The authors emphasize that by using an orthogonal basis, measurements are less noisy and the learned parameters find regions of the circuit that actually correspond to meaningful physical features, rather than chasing a moving target across a landscape of random initializations.

Key idea: by combining well-conditioned mathematical bases with quantum encoding, PolyQROM increases the expressive power of the network while taming training instabilities that plague generic quantum circuits. The authors show that with this setup, the model trains more reliably and generalizes better across different flow situations.

The Output Problem Meets a Practical Remedy

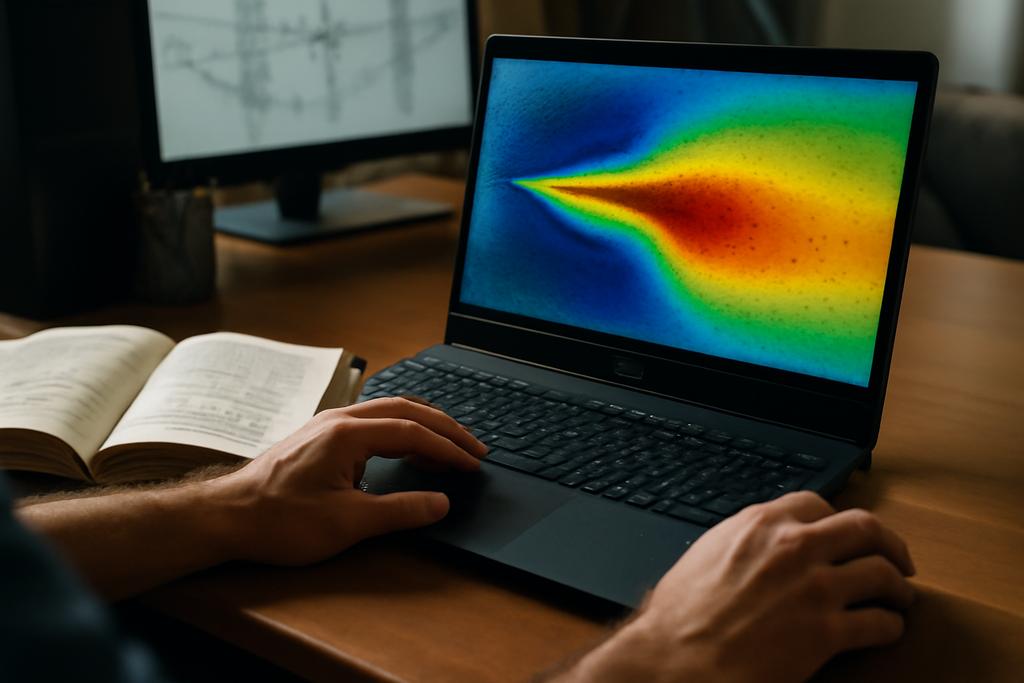

CFD researchers worry about reading quantum outputs because a full quantum-state readout of a 64×64 velocity field would require an astronomical number of measurements. You don’t need every pixel; engineers care about the dominant structures and how to classify them quickly. PolyQROM slices the problem by aiming for low-dimensional, high-signal features rather than a pixel-perfect reconstruction. The team tested their approach on four canonical flows—cavity, tube, cylinder, and dam—mapped onto a 64-by-64 grid. They used 14 qubits to compress the data into a compact representation and then ran two parallel downstream tasks: a quantum-augmented reconstruction using a linear combination of unitaries (LCU) to rebuild a target state from the coefficients, and a classical fully connected layer that handles the final classification.

The results are telling. For reconstruction, the quantum-polynomial networks achieved higher fidelity to the ground truth than a classical Chebyshev-based polynomial fit, across multiple flow types and boundary conditions. The two orthogonal-polynomial variants—QDCT- and QFT-based networks—consistently delivered the best fidelities, with the QDCT variant often edging out the others. The improvements held up as the reconstruction order increased, which means the approach scales gracefully as you look for more detailed representations of the flow.

On the topic of training, the researchers paid close attention to how the circuit is initialized. They found that a structured initialization, one that aligns with the mathematical basis being implemented, gave a notably lower initial loss and more stable learning than random initialization or a generic hardware-efficient Ansatz. In the language of optimization, it’s the difference between starting a climb from a cliff edge versus a random foothold somewhere on a cliff face—the former makes the ascent faster and less prone to getting stuck in local minima. This is not a trivial observation in quantum machine learning; it suggests that respecting the math behind the basis can dramatically help with navigating the rugged landscape of quantum parameter optimization.

What This Means for CFD and Beyond

Stepping back, what does this mean for the broader field of computational fluid dynamics? If quantum hardware continues to mature, a framework like PolyQROM could become a practical kit for speeding up the parts of CFD that matter most to engineers: extracting meaningful features from enormous datasets, reconstructing important fields with high fidelity, and classifying complex flow regimes with fewer resources than traditional neural nets require. The team’s reported scaling advantage—reducing a classification task from O(N^2) in the classical world to roughly O(m log N) on the quantum side, for a 64×64 grid—belongs in the same conversation as the classic benchmarks we use to imagine the future of computation. It’s not just a flashy claim; it points toward a future where reading the right features from data becomes the bottleneck, not the raw capability of the solver itself.

Two important caveats sit alongside this promise. First, current quantum devices are still noisy and limited in qubit count. The authors acknowledge that demonstrating full flow-physics simulations on near-term hardware remains an open challenge. PolyQROM, however, is designed with that constraint in mind: it favors structured circuit layouts and a compact set of trainable parameters that can run on today’s and tomorrow’s devices without succumbing to instability. Second, the study focuses on a specific class of polynomial bases. The authors hint at a richer future—combining multiple bases to better capture certain flow features—an upgrade that could widen the method’s reach across turbulent, multiscale, or highly three-dimensional flows.

Still, the broader implication is provocative: the path to quantum-accelerated CFD might hinge less on raw quantum speedups and more on a disciplined pairing of mathematics with hardware-aware networks. The architecture invites cross-pollination with other data-rich sciences—climate modeling, materials science, or even biomedical imaging—where high dimensionality meets the practical need for compact, robust representations. If you squint at the map of quantum computation and CFD, PolyQROM looks like a careful route that honors both the elegance of orthogonal polynomials and the messy reality of real-world data.

Behind the project is a collaboration anchored in Hefei and led by the University of Science and Technology of China, with key contributions from the Hefei Comprehensive National Science Center’s Institute of Artificial Intelligence and Origin Quantum Computing Company Limited. Lead author Yu Fang, together with Cheng Xue and Tai-Ping Sun, demonstrates how a principled fusion of classical mathematics with quantum circuits can deliver tangible gains in fidelity and efficiency. It’s a reminder that, in the end, big leaps often arrive not from a single bright idea but from a patient stitching together of ideas across disciplines. If the future of quantum CFD looks like anything, PolyQROM hints at a future where the physics we care about—the swirling of a dam-break, the shedding of a vortex behind a cylinder—can be read and understood faster, with fewer quantum resources, and with more confidence than ever before.