Robots and humans alike rely on a mental model of the world that’s coherent across time. But in the wild, the data we collect rarely behaves. A single object might be scanned by multiple sensors from different angles, at different moments, with parts constantly slipping in and out of view. The resulting point clouds are messy: sampling isn’t fixed, correspondences between frames may not exist, and occlusions loom like blind spots. Into this jumbled picture steps a fresh way to reason about articulated objects. A team led by Jun-Jee Chao and Qingyuan Jiang at the University of Minnesota, with Volkan Isler at the University of Texas at Austin, proposes a model that treats an object as a collection of moving 3D Gaussians—compact, flexible building blocks that encode both where a part sits and how it moves. The trick is to let these Gaussians do the heavy lifting: they represent rigid parts, their motion over time, and the way those parts relate to one another as a kinematic tree. The result is a method that can infer parts, poses, and even unseen configurations from a stream of raw, irregular data. It’s a rare blend of mathematical elegance and practical robustness, aimed squarely at the messiness of real-world sensing rather than neatly choreographed datasets.

In a field where many methods assume you can continuously track a fixed set of points across time, this work flips the script. Instead of chasing correspondences between specific surface points, the method models the surface as a distribution: a small number of dynamic 3D Gaussians glide over time, each one to serve as a moving part. The core insight is simple and powerful: if you know where a part’s points tend to gather in 3D space and how that gathering moves, you can separate the parts and track their motion without insisting that any particular point survives from frame to frame. The study’s authors, whose work is anchored in the University of Minnesota and the University of Texas at Austin, show that this distribution-based view not only handles partial observations and occlusions better, but also scales to a variety of object types—ranging from robot hands to everyday daily objects in Sapien’s catalog. The lead researchers are Jun-Jee Chao and Qingyuan Jiang (University of Minnesota), with Volkan Isler (UT Austin) contributing crucial expertise. They report that their Gaussians-are-parts approach outperforms prior methods that depend on point-to-point correspondences, especially when parts disappear for stretches of time.

A New Way to See Moving Parts

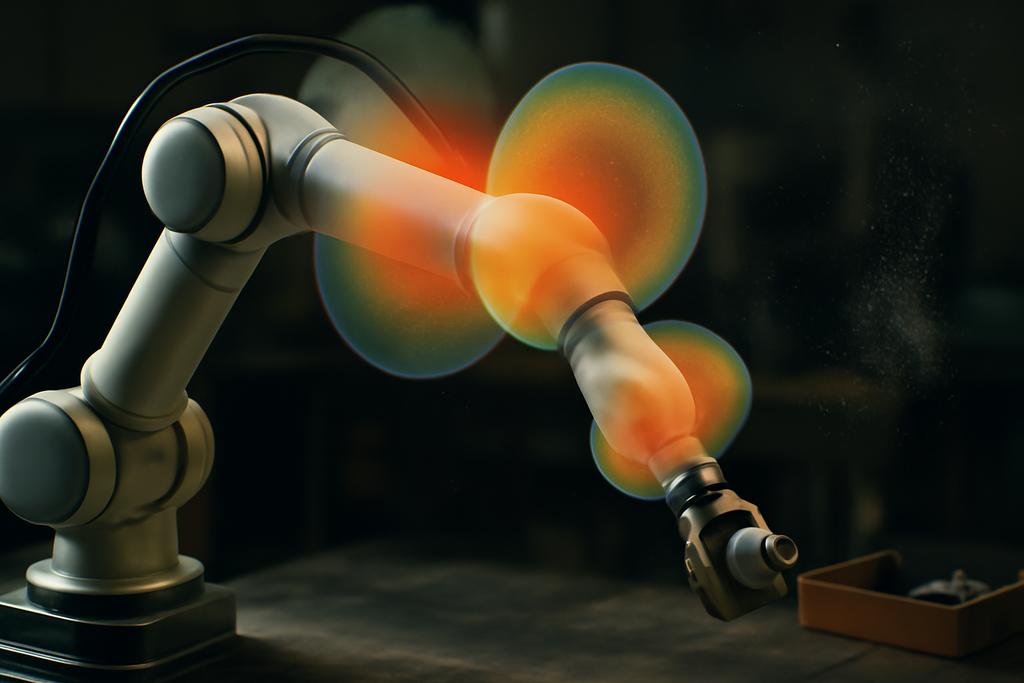

Imagine each rigid part of an articulated object as a little cloud of points, not a single bead you can trace across all frames. The authors formalize this intuition with dynamic 3D Gaussians. Each Gaussian has a center, a scale, and a rotation. The trick is to let the rotation and the center shift over time while keeping the size fixed across frames. In mathematical terms, the Gaussian is parameterized by a time-varying rotation Rk and a time-varying center µk, while the scale s remains shared. This yields a compact, time-aware representation that can move with the part as the object flexes and folds. The world-to-part transformation for a Gaussian is built from a rotation and a translation, so each Gaussian carries its own local frame that slides as the object moves.

What makes this representation so practical is how segmentation happens. For a given observed point x in a particular time step, the method assigns it to the Gaussian with the smallest Mahalanobis distance to the Gaussian’s current location and shape. In other words, a point is labeled not by a tie to a specific surface patch, but by how well it fits into one part’s moving distribution. To keep this assignment differentiable in the optimization loop, the authors use a softmax trick that avoids hard, brittle decisions during learning. This approach turns the segmentation problem into a continuous optimization over Gaussian parameters, which is then tied to the future frames by transforming points from one time step to another using each Gaussian’s pose. The key is fusion: all time steps can be fused into a single composite view, allowing information from frames with partial views to reinforce the model as a whole.

The method doesn’t just model motion; it models motion in a way that mirrors how we understand the world. The losses guiding the optimization are a mix of classic and novel ideas. A maximum-likelihood loss nudges the Gaussians to explain the observed points well; a separation loss keeps Gaussians from smearing into each other so that each one remains a distinct part. Then there are global-structure forces: Chamfer distance and Earth-mover distance ensure that the transformed points across time still resemble the actual observations, while a flow loss connects the model’s predicted motion to a pre-trained scene-flow predictor. All of this is wrapped in a single, end-to-end learning loop that can be run directly on raw 4D point clouds, without requiring a fixed, pre-labeled structure.

Several design choices are essential for practicality. The model must decide how many Gaussians to use—the number of parts. Rather than fixing a single value, the authors run multiple initializations with different m (the number of Gaussians) and pick the one that minimizes a distortion criterion after convergence. The result is a method that can adapt to objects with varying numbers of parts, from a simple hinge to a multi-fingered hand. And because the Gaussians share scale across time, the model avoids exploding complexity while still capturing how parts move in three-dimensional space. This tight budgeting of parameters is what makes a robust, real-time-capable approach possible on imperfect data.

From Surfaces to Joints: Inferring Structure

Buildings blocks are one thing; turning them into a coherent skeleton is another. Once a set of dynamic Gaussians—each representing a potential rigid part—is in place, the next challenge is to figure out how those parts relate to each other. The authors adopt a kinematic-tree approach, where edges connect parts that share a plausible one-degree-of-freedom (1-DOF) relationship. In practice, that means looking for pairs of parts whose relative poses move along a single axis, and whose spatial proximity supports a tight, meaningful connection. To decide which pairs belong together, two criteria are computed for each pair: a spatial closeness measure and a 1-DOF compatibility score across all time steps. Pairwise scores are then combined in a minimum spanning tree to yield a plausible kinematic tree that describes the object’s joint layout.

Before constructing the tree, the method merges parts that are spatially close and whose relative motion is mostly static. Once a candidate set of parts remains, the pairwise metrics are computed, and the tree is assembled to minimize a weighted sum of spatial proximity and 1-DOF alignment. The result is a skeletal model that explains how the different Gaussian parts move in concert. Root parts—those with the least motion across frames—serve as anchors, and the rest of the parts are attached in a way that respects the constraints of screw-motion theory. The final stage tightens these joints by projecting Gaussian poses onto the inferred kinematic chain and refining with forward kinematics in a way that respects the single-DOF assumption for parent-child relationships. In effect, the model learns not just where each part is and how it moves, but how those moves are orchestrated by the object’s joint structure.

The implications are subtle but powerful. Because the method is built around a data-driven, distribution-based description of parts and their motions, it can generalize to objects without relying on a fixed taxonomy or a predetermined kinematic blueprint. The same machinery that can describe a robot hand can also describe a cup on a lazy Sunday table—provided the data captures enough of its surface over time. That generality is what makes the approach appealing for real-world robotics and digital-twin applications, where objects come in all shapes and sizes and sensing conditions are rarely ideal.

Why It Matters for Robots and Real Worlds

The proof of concept lives in two well-known benchmarks: RoboArt, a Robo-artifacts-like dataset of articulated robots with up to 15 parts, and Sapien, a diverse set of daily objects. The authors run their method in scenarios that exclude perfect, continuous observation. They test under view-based occlusions—where parts vanish from one frame to the next because they’re hidden from the camera—and under partial observations where some surfaces never appear in a frame at all. The results are telling. Across a range of metrics, their Gaussian-based approach outperforms state-of-the-art methods that rely on establishing correspondences between points across frames. The improvements aren’t just incremental; in some cases, segmentation quality under occlusion improves by double digits, and the system remains robust even when several parts disappear for several frames.

Quantitatively, the method shows strong performance on RoboArt’s challenging sequences. It achieves lower reconstruction error, higher consistency in labeling parts over time, and better alignment of the inferred kinematic tree with the ground truth. When compared to other approaches that attempt to reconstruct articulated objects from 4D point clouds, the Gaussian framework consistently demonstrates that modeling how a surface distributes itself in space can be more informative than chasing the elusive “best matching point” across frames. The authors also test on Sapien, where the diversity of objects offers a stress test for category-agnostic reasoning. Here too, their approach holds its own against specialized, correspondence-driven models, showing that distribution-based motion modeling scales to a broader universe of real-world objects.

Beyond sheer accuracy, there is a practical payoff: the estimated model can re-articulate the object to unseen poses. In robotics, this is a superpower. A robot that can predict how a mechanism will look when it’s opened, closed, or reconfigured without needing new data is closer to the kind of flexible, adaptive understanding that humans display. The method also shines in the presence of occlusions. In the wild, you rarely get a clean, complete scan of a complex object. The approach’s ability to fuse information across time steps to fill in gaps makes it especially appealing for applications in robotics, virtual reality, and digital twin platforms where data is noisy, incomplete, or asynchronously acquired.

Of course, no method is perfect. The researchers acknowledge that choosing the number of Gaussians a priori—m, the count of parts—remains a design decision, even if they provide a data-driven way to pick the best among several initializations. The current framework assumes an acyclic kinematic tree with 1-DOF connections; while many objects fit this bill, others do not. The authors suggest future directions, including motion-aware generative models to better fill in missing data and a more automatic mechanism for estimating the number of parts from scratch. Still, the core idea—a compact, probabilistic representation that jointly learns segmentation, motion, and structure from messy 4D scans—feels like a stepping stone toward more robust, generalizable digital twins of the physical world.

Why does this matter beyond the academic thrill? Because a distribution-based view of surface parts aligns with a broader trend in computer vision and robotics: move away from brittle point-tracking and toward reasoning about latent structure and motion, even when data are imperfect. It’s a philosophy you can imagine applied to warehouse robots sorting a jumble of parts, to service robots manipulating household objects with partial views, or to augmented-reality systems that need to predict how a door, a chair, or a toy might move when interacted with. The approach stitches together ideas from classical statistics (Gaussian mixtures and maximum likelihood) with modern learning signals (scene flow and differentiable optimization) to deliver a method that is both theoretically grounded and practically robust.

What’s Next? A Fortuitous Turning Point

As with many innovations at the edge of theory and practice, the real payoff will come from how this idea is adopted and extended. The Gaussian-as-parts framework invites new directions: could we drop the fixed-part assumption altogether and let a neural predictor decide how many dynamic Gaussians are needed? Could we relax the 1-DOF constraint to accommodate more complex joint configurations or even non-rigid deformations while preserving the core benefit of robust, distribution-driven segmentation? And how might this approach integrate with real-time perception stacks in industrial robotics, where latency and reliability are non-negotiable?

One thing is clear: the authors have shown a compelling alternative to correspondence-centric methods, one that leverages the geometry of distributions to pull meaningful structure from noisy, incomplete data. It’s a reminder that sometimes the most honest way to understand motion is not by chasing the same surface points from frame to frame, but by watching how a small set of probabilistic building blocks drift and rotate together across time. In a world full of occlusions, misalignments, and asynchronous sensors, that perspective might be just what we need to keep our digital twins honest to their real-world counterparts.

The study’s collaboration across the University of Minnesota and the University of Texas at Austin signals a healthy cross-pollination of ideas from computer vision, robotics, and applied mathematics. The lead researchers—Jun-Jee Chao and Qingyuan Jiang at Minnesota, supported by Volkan Isler at UT Austin—have given the field a fresh lens for a longstanding problem: how to infer the hidden mechanics of an object from the imperfect snapshots we can actually collect. And while the road ahead is not without its caveats, the path forward now looks a little clearer, a little more human, and a lot more mathematical in the right way: by letting a few well-placed Gaussians carry the load of motion, we may learn the shape of what we cannot fully see.