SEPHI 2.0 rewrites the odds on habitable worlds

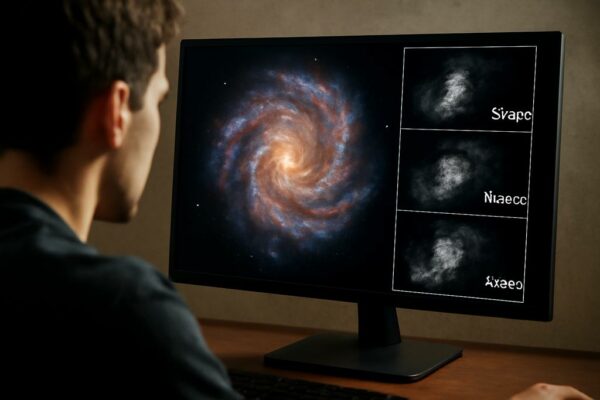

The hunt for life beyond Earth has evolved from a scavenger hunt for Earth twins to a careful weighing of distant worlds. Astronomers now wield a probabilistic compass that guides where to look next, and that compass is called SEPHI 2.0. Developed by J. M. Rodríguez-Mozos and A. Moya at the Universitat de València, this…