The Enigma of Sparse Matrices

Imagine a vast, intricate web, its threads connecting countless nodes. This network isn’t made of silk or steel, but of mathematical relationships encoded in a matrix — a grid of numbers. These matrices, the backbone of countless calculations in computer science and beyond, can be dense, bursting with information, or sparse, with a smattering of numbers amidst a sea of zeros. This sparsity, it turns out, presents a fascinating challenge for understanding the behavior of these structures, particularly when those numbers are chosen randomly.

A Question of Stability

In the world of linear algebra, the condition number of a matrix is a critical measure of its stability. Think of it like the resilience of a bridge under stress: a high condition number suggests a fragile structure, easily thrown off balance by even small errors. The condition number is essentially the ratio of a matrix’s largest singular value (its strength) to its smallest singular value (its weakness). Understanding the smallest singular value is crucial for assessing the stability of systems involving these matrices, systems used across fields like machine learning, signal processing, and algorithm stability analysis.

The Unexpected Order in Randomness

When the numbers in a matrix are selected randomly, the question of the smallest singular value becomes a delicate dance between chaos and order. For matrices with entries drawn from a standard normal distribution (think of a bell curve), the smallest singular value is reasonably well understood; but when we consider sparse matrices — those with many zeros — the behavior is far more intricate and unpredictable. The challenge lies in accurately estimating the probability that the smallest singular value falls below a certain threshold. This seemingly esoteric question has significant implications for applications that rely on the stability of these matrices.

A New Framework for Sparse Randomness

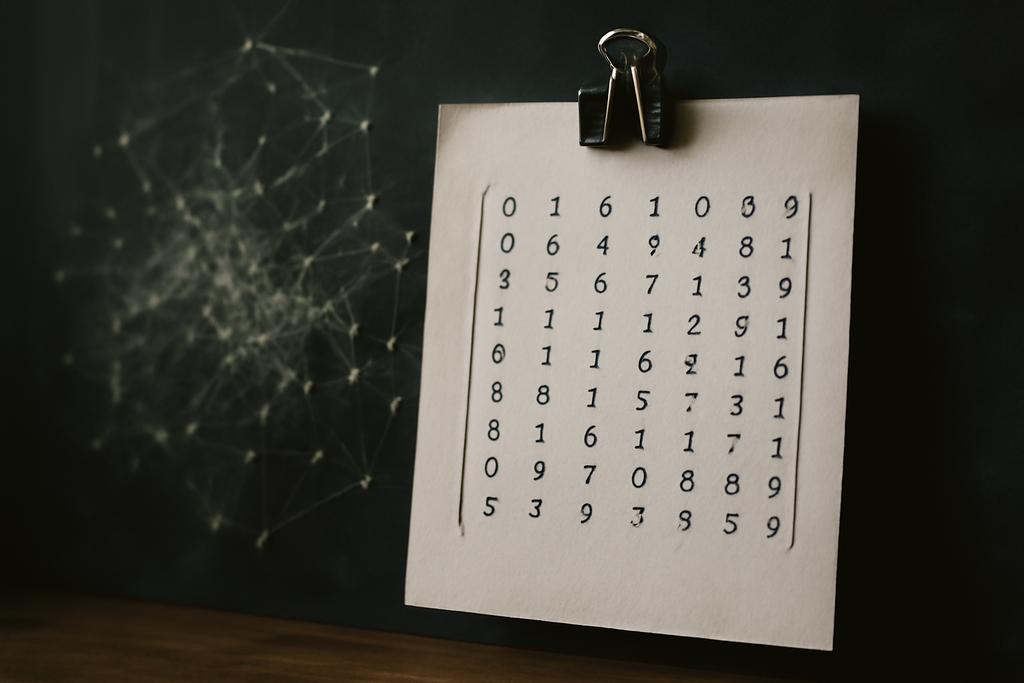

Kexin Yu of Shandong University tackles this challenge head-on in their recent paper, “The smallest singular value of sparse discrete random matrices.” Yu develops a novel mathematical framework to address this problem, specifically focusing on sparse matrices whose entries are discrete random variables, rather than continuous ones like those following a normal distribution. These discrete random variables are described as ‘µ-lazy,’ where µ dictates the probability that a given entry is non-zero. As µ approaches zero, the sparsity increases.

Beyond Fixed Sparsity: A Dynamic Approach

Previous work on the smallest singular value of random matrices often assumed a fixed level of sparsity — a constant µ. Yu’s contribution is to extend this analysis to scenarios where µ itself can change as the size of the matrix grows, allowing for a more nuanced understanding of sparsity’s effect. This dynamic approach is particularly important because the behavior of sparse random matrices can be significantly influenced by the relationship between sparsity and matrix size.

The ‘High Concentration ⇒ Strong Structure’ Principle

A central theme in this area is the interplay between concentration and structure. Intuitively, if the entries of a random matrix lead to highly concentrated sums, then the underlying coefficient vectors must possess some inherent additive structure. Yu’s work not only confirms this intuition for sparse µn-lazy random variables — where µn tends to zero as the matrix size n increases — but also reveals the specific influence of this dynamic sparsity on both the small ball probability and the smallest singular value. This builds upon the seminal work of Tao and Vu and expands it to a new realm of sparsity.

Technical Depth and Broader Significance

The mathematical details underpinning Yu’s work are quite intricate, drawing on techniques from probability theory, linear algebra, and combinatorics. These include tools like the least common denominator (LCD) of vectors, Halász’s inequality (extended here to sparse cases), and novel counting arguments for sparse integer vectors. The intricate proofs involve carefully partitioning the unit sphere and analyzing the behavior of these random matrices on different subsets of this sphere.

But the significance extends far beyond the technical intricacies of the proofs. Understanding the properties of sparse random matrices is paramount for the development of robust algorithms in numerous fields. The research provides a deeper understanding of how these seemingly chaotic systems behave and allows for a more accurate assessment of their stability and reliability. This is especially crucial for applications involving massive datasets and high-dimensional computations, where sparsity is often a defining characteristic.

Looking Ahead: Refining the Tools

Yu’s work opens up several promising avenues for future research. One key direction is to refine the mathematical tools used to analyze the sparsity’s effect, particularly concerning the partitioning of the unit sphere. Concepts like the ‘degree of unstructuredness,’ used by other researchers, might offer a more precise approach than the LCD employed in this current work. Another challenge is to extend these findings to symmetric sparse matrices, a case that introduces additional complexities due to the lack of row independence.

The Human Element in Mathematical Discovery

Ultimately, the impact of Yu’s work lies not just in its technical contribution, but in the larger narrative it adds to our understanding of randomness. It reveals subtle patterns within apparent chaos, demonstrating that even in highly disordered systems, certain structural features play a crucial role in shaping their overall behavior. It’s a testament to the power of mathematical exploration to illuminate the hidden order embedded within the seemingly random processes that govern the world around us.