The scale of modern language models can feel like watching a glacier slide: immense, intricate, and almost impossibly heavy. These giants—open or closed—are built from billions of parameters, tuned to predict the next word with uncanny fluency. But there’s a catch that scientists have wrestled with for years: the bigger the model, the heavier the hardware bill to run it. The obvious path to practicality isn’t to grow more compact designs from scratch, but to shrink the footprint of the models we already have without trimming away their smarts. That’s where the art and science of quantization come in—a way of dialing down precision to save memory and computation while keeping performance close to the original. The paper Precision Where It Matters, from a collaboration between Université Paris-Saclay’s CEA LIST and Université Grenoble Alpes along with its Inria and CNRS partners, dives into this problem with a twist: not all spikes in activations are created equal, and a tiny, architecture-specific insight can unlock big gains in how we quantize LLaMA-based models.

Led by Lucas Maisonnave and Cyril Moineau, with Olivier Bichler and Fabrice Rastello among the authors, the study asks a practical question with theoretical zing: if activation outliers—the jaw-droppingly large values that pop up in neural nets—aren’t distributed evenly, could we tailor precision where it actually matters? Their answer focuses on LLaMA-like models and a clever, targeted approach that uses mixed precision to tame the spikes without paying a heavy accuracy tax. The result isn’t just an incremental improvement; it’s a blueprint for making large language models more deployable in environments with tight memory, limited power, or strict latency constraints.

The Hidden Culprits of Quantization

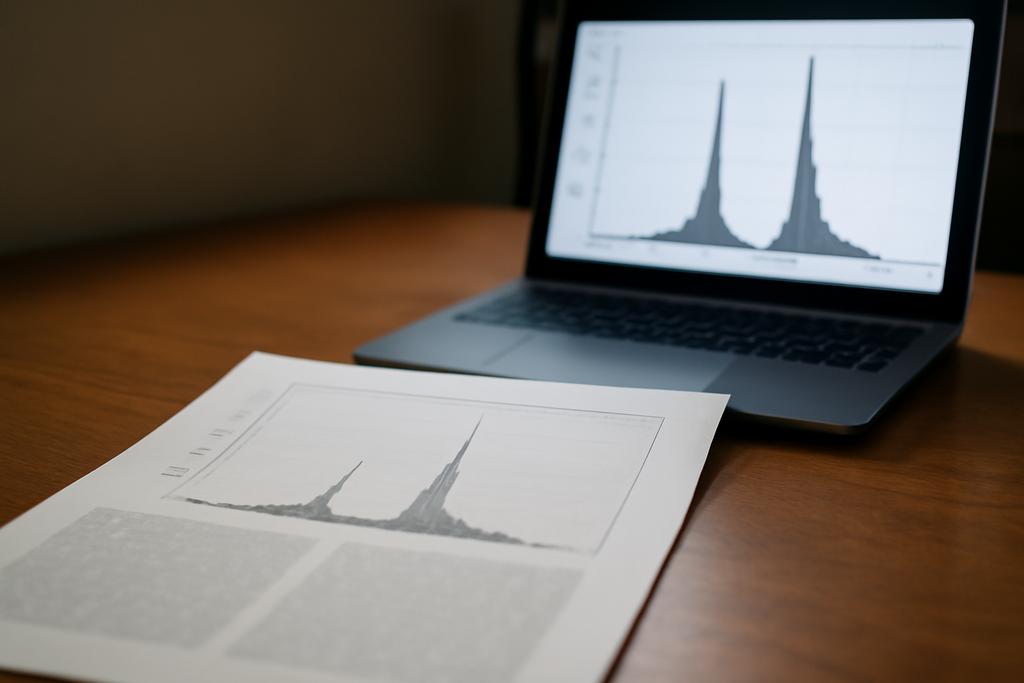

To grasp the novelty, you first have to understand the cultural friction between two stubborn truths in model quantization. On the one hand, shrinking numbers of bits dramatically reduces the memory required for weights and activations and speeds up inference. On the other hand, activations in large language models aren’t a smooth hill but a jagged skyline, full of spikes that can blow a quantization scheme off a cliff. A common shortcut has been to assume that outliers behave similarly across architectures or that a few “bad” channels carry most of the spike load. The reality, the authors argue, is messier and more architecture-specific than that—especially in LLaMA-like decoders where spikes aren’t uniformly distributed and aren’t always tied to a single place in the network.

As the paper digs into the literature and experiments, it becomes clear that activation spikes aren’t a single problem with a one-size-fits-all fix. In OPT-like architectures, some projection layers might dodge spikes; in LLaMA-style models, those spikes concentrate in a couple of projection layers and in the inputs to normalization layers, with a surprising twist: the normalization step in LLaMA can dampen spikes rather than amplify them, which runs counter to some prior expectations. The team explains that in LLaMA-like architectures, the down-projection that follows the attention block tends to be the loudest offender for spikes, and those spikes can propagate through residual connections unless checked at the right moment. That’s the key observation that makes their approach sing: if you know where to place your extra precision, you can quiet the problem without muting the model’s intelligence. It’s like tuning a guitar: you don’t need to tighten every string equally; you simply adjust the strings that actually carry the melody.

Another important nuance appears in a practical tweak: theBOT token, the special token that begins text generation, often shows a pronounced spike. The authors test what happens if they leave the BOT token unquantized while quantizing the rest, and the perplexity—an index of how uncertain the model is about its next word—improves markedly for LLaMA models. It’s a small, surgical intervention with a surprisingly big payoff, underscoring how a single architectural quirk can ripple through the whole inference pipeline. This isn’t merely a curiosity; it’s a concrete, deployable signal that the spike problem can be localized and managed rather than eliminated wholesale.

A Targeted Trick for a Big Problem

If the spike problem is localized, the obvious move is to tailor the precision where the spikes live. That’s exactly what the authors do in their mixed-precision LLaMA pipeline. They observe that in LLaMA-like models, spikes crowd into two projection layers—the down projections in the early blocks and certain output projections later on. Rather than quantizing the entire network uniformly to eight bits, they propose a two-pronged strategy: keep those spike-prone projections at higher precision (FP16 or FP8) and quantify the rest with standard lower-precision integers. The rest, in other words, can be crunched aggressively while the critical choke points keep their clarity.

The neat twist is how they implement FP8 in a way that actually helps. FP8 comes in two flavors, primarily E5M2 and E4M3, which differ in the balance of exponent and mantissa bits. The team found that FP8 with the E5M2 configuration is an especially good match for these spike values, which can reach thousands in magnitude, while still allowing fast, practical computation. By channeling FP8 to the down projections that generate spikes and leaving the rest of the network to the 8-bit per-tensor quantization, they achieve a synergy: you maintain accuracy where it counts while keeping memory and compute frugal elsewhere. It’s a precision orchestra where only a few players need a louder instrument.

But it’s not just about choosing FP8 over FP16 or FP8 across the board. The researchers also test what happens if you widen the scope to include more layers or use full FP16 quantization across more of the network. The gains aren’t linear; there’s a sweet spot in which the targeted upscaling of a small set of projections yields outsized performance gains. Their experiments across several model families—LLaMA2-7B, LLaMA2-13B, LLaMA3-8B, and Mistral-7B—show that this architecture-informed mixed-precision strategy consistently outperforms general-purpose outlier-handling methods, especially at 8-bit per-tensor quantization. In other words, there’s real empirical payoff to looking closely at the model’s internal quirks rather than applying a blunt quantization policy to everything.

To test the importance of localization, the authors run a provocative control: they apply FP16 to random down and out projections instead of the known spike-harboring ones. The results are telling—the random approach fails to buy the same performance gains as the targeted one. The conclusion is blunt and useful: the quantization challenge in LLaMA-like models isn’t a blanket crisis of outliers; it’s a map with a few red-hot corridors that matter most. If you don’t aim your precision there, you’re fighting with a headwind rather than riding a tailwind.

And there’s a practical flourish that makes this approach believable in real systems: they test not only in the abstract but against actual quantization formats that hardware accelerators love, like FP8, and with 8-bit per-tensor quantization. They also explore the possibility of pushing a few layers into FP8 or even higher-precision, to see how far the benefits extend. The takeaway is pragmatic: you don’t need a totally exotic solver to get big wins. A careful, architecture-aware mix of FP8 and lower-precision quantization can unlock meaningful improvements without retraining or specialized tooling.

What This Means for the AI Ranch, Not Just the Lab

The stakes of this line of work aren’t merely academic. Large language models have become a resource-intensive feature of modern software ecosystems. Training costs are astronomically high, and inference costs can be a bottleneck for real-time services. Compression and quantization are among the few levers we have to push these colossal models toward practical, energy-conscious deployment. The authors remind us of the environmental footprint of cutting-edge models—the kind of footprint that has drawn headlines for recent LLaMA and related releases—and they frame their contribution as a piece of the broader puzzle: smarter, architecture-specific quantization is a meaningful, implementable way to shrink models without a corresponding hit to quality.

In their narrative, the two-projection insight becomes a design principle for the next generation of quantization pipelines. If a model’s activation spikes cluster in only a handful of layers, then you don’t need a universal, one-size-fits-all outlier handler. You can ride the general, per-tensor 8-bit regime for most of the network and reserve higher-precision crunching for the exact places where the math would otherwise go off the rails. In practice, that could translate into leaner inference engines, lower power usage, and the possibility of running more capable models on devices with limited memory or on edge servers with strict latency budgets. It’s not a fantasy of tiny models; it’s a pragmatic path to run big ones smarter.

There’s also a timely reminder about the quirks of normalization in these networks. The authors report that, in LLaMA-like architectures, RMS normalization can actually dampen spike amplitudes, helping to keep the overall distribution manageable. This contrasts with some expectations from other architectures where normalization is seen as a potential amplifier of outliers. The takeaway is a humbler, more nuanced view: normalization isn’t a universal villain or hero; its effects depend on the surrounding architecture and the flow of activations. That nuance matters because it invites more careful, architecture-specific engineering rather than sweeping, cross-architecture prescriptions.

Beyond the immediate results, the study is a case study in a broader, humane engineering principle: when you resist the urge to apply a blunt fix everywhere and instead tailor your approach to the model’s quirks, you get better outcomes with less cost. The authors’ emphasis on a small set of “projections” that concentrate spikes is almost like discovering the few choke points in a system where, if you invest in precision right there, the entire system breathes easier. This is what good engineering looks like in the wild: a balance of rigor, pragmatism, and a willingness to dig into the peculiarities of a specific design rather than chase a universal algorithm that pretends to fit all models.

The work is a reminder that progress in AI hardware and systems often happens not by reinventing math, but by listening closely to how a particular family of models behaves inside the silicon. It’s a note of optimism for developers who want to deploy strong language models in places where resources are scarce. It doesn’t pretend to solve every bottleneck, but it carves out a practical pathway toward leaner, more accessible AI that doesn’t demand a data center to churn out answers. As researchers continue to push toward ever more capable language models, the lesson from this paper is clear: sometimes the secret to bigger impact lies in the smallest, most precise adjustments—the kind you can implement with a handful of lines of code in the right spots. And sometimes, those spots are two projection layers away from the rest of the network.

The study is a collaborative achievement from Université Paris-Saclay’s CEA LIST and Université Grenoble Alpes (with Inria, CNRS, Grenoble INP, and the LIG), led by Lucas Maisonnave and Cyril Moineau, with significant contributions from Olivier Bichler and Fabrice Rastello. It’s a reminder that the frontier of practical AI isn’t only about bigger models; it’s also about smarter, more surgical engineering that makes those models more usable, more affordable, and more Earth-friendly.

Looking Ahead: Where the Idea Goes from Here

While the results are encouraging, the authors are clear about the boundaries. The spike phenomenon isn’t identical across every architecture. Mistral, for example, shows a different distribution of spikes, which suggests that a universal, one-quantization-fits-all method remains out of reach. The ongoing challenge is to map the peculiar fault lines of other families and to forge similarly targeted strategies that respect the idiosyncrasies of each design. There’s room to pair this approach with complementary methods—tools like SmoothQuant or other outlier-aware techniques—creating a hybrid toolkit that can adapt to diverse models and deployment constraints.

There’s also appetite for deeper dives into the causes of spikes. Are they born during training, or do they emerge purely from architectural layout and data flow during inference? If we can unpack those root causes, we might not only quantify spikes more effectively but potentially reduce or relocate them upstream, as some other lines of research have proposed. In that sense, the current work is a practical milestone, not the final word. It shows a path, a method, and a mindset—look for architecture-specific fingerprints, treat them with precise care, and you can unlock substantial gains without abandoning what makes the model powerful in the first place.

Ultimately, the implications extend beyond academic curiosity. If engineers can routinely quantize LLaMA-like models to 8-bit per-tensor, with only a small handful of layers kept at higher precision, the cost of inference drops, latency shrinks, and the possibility of on-device or near-edge AI becomes more tangible. That could unlock applications in mobile apps, robotics, or real-time language tools where waiting for a cloud server simply isn’t acceptable. The two spikes become a doorway to a more accessible, responsible AI that still feels like the same model we came to rely on in the first place.