Imagine a world where complex problems, those that currently take hours or even days to solve using traditional computers, can be tackled in mere minutes. This isn’t science fiction; it’s the promise of a groundbreaking approach to constraint programming, a field at the heart of many technological advancements, from scheduling and logistics to artificial intelligence. Research from the University of Udine and New Mexico State University, led by Enrico Santi, Agostino Dovier, Andrea Formisano, and Fabio Tardivo, shows how harnessing the power of GPUs can dramatically accelerate the speed at which we solve these kinds of problems.

Constraint Programming: The Art of the Possible

Constraint programming is, at its core, a way of expressing problems as a set of variables and constraints. These constraints limit the possible values each variable can take, guiding the search towards solutions that satisfy all conditions simultaneously. Think of it like a sophisticated logic puzzle where each piece has to fit perfectly with others. Traditional methods, often relying on CPUs, struggle with particularly large or complex problems—those with many variables, vast amounts of possibilities, or intricate relationships between those possibilities. This is because a CPU’s sequential architecture often leads to a bottleneck as it tries to juggle countless permutations.

One critical type of constraint is the “table constraint.” This simply lists all permissible combinations of variable values—a kind of exhaustive inventory of the valid options. While flexible, table constraints can become computationally unwieldy when the number of permitted combinations explodes. This is where the researchers’ work shines.

The Compact-Table Algorithm: A Clever Optimization

The researchers focus on a highly efficient algorithm for handling table constraints called “Compact-Table” (CT). CT uses smart data structures, such as Boolean matrices and arrays, to represent and manipulate the allowed combinations in a highly compact way. It is, in its serial form, a considerable improvement over previous methods, but it still runs into problems when dealing with massive tables representing millions of permitted combinations.

The GPU Advantage: Parallel Power

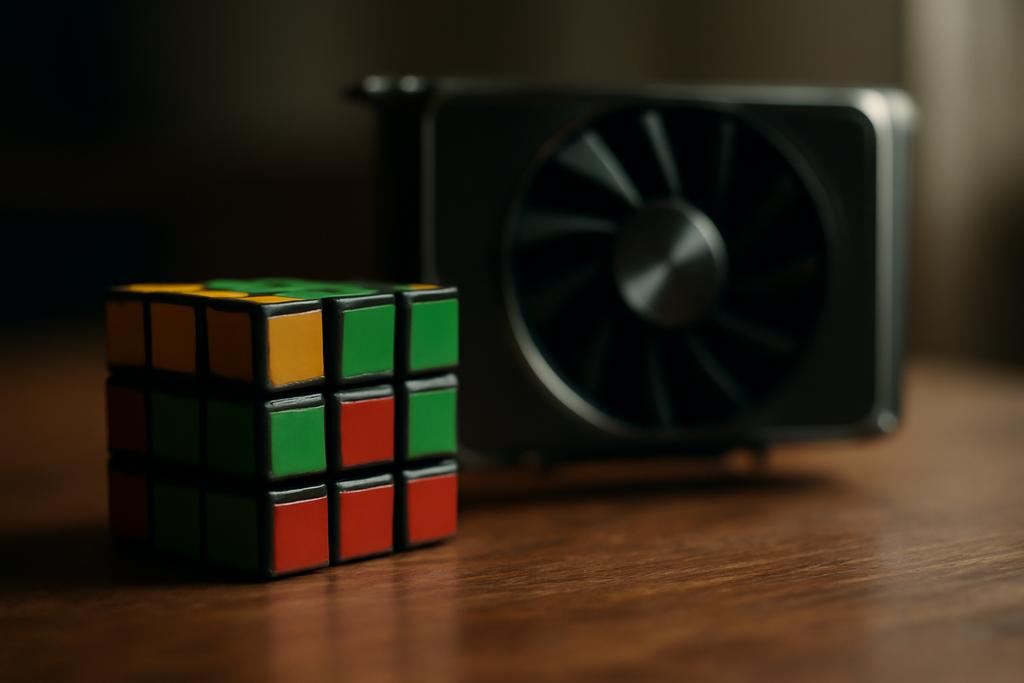

Here’s the key innovation: the researchers adapted the CT algorithm to leverage the parallel processing capabilities of modern Graphics Processing Units (GPUs). GPUs are designed for massive parallelism, exceling at performing many calculations simultaneously. Unlike a CPU, which has a relatively small number of powerful cores, a GPU boasts thousands of smaller, more energy-efficient cores. This allows GPUs to tackle tasks involving many independent computations, such as those found in constraint programming, with incredible speed.

The researchers developed three distinct GPU-accelerated versions of the CT algorithm. The first, CTuCU, offloads only the table update process to the GPU. The second, CTfCU, handles only the domain filtering on the GPU. Finally, CTufCU carries out both the update and filtering stages on the GPU, maximizing the parallel potential.

Benchmarks and Results: A Quantum Leap in Performance

The researchers rigorously tested their algorithms on various benchmark problem sets. The results were striking. The GPU-accelerated versions demonstrated significant speed improvements compared to the serial CT algorithm, and in many cases, also outperformed a state-of-the-art constraint solver (Gecode) running on a single CPU core. The CTufCU variant, which fully leverages GPU parallelism, achieved the most significant gains. In some cases, this implementation led to speedups of several orders of magnitude compared to the original serial version.

While the team found that for smaller problems, the overhead of transferring data between the CPU and GPU could sometimes negate the advantages of parallel processing, the benefits became overwhelmingly clear with larger, more complex problems. The speed gains were particularly impressive when problems required extensive backtracking, a common occurrence in complex constraint-solving scenarios.

Implications: A Broader Impact

This research has far-reaching implications. By dramatically speeding up constraint solving, this approach opens the door to tackling previously intractable problems. Areas like logistics, scheduling, and resource allocation could see major improvements in efficiency. Moreover, the enhanced speed could benefit AI applications that rely heavily on constraint satisfaction, improving the performance of various machine learning models. The findings suggest that the use of GPUs in constraint programming is not just a niche optimization, but a paradigm shift with the potential to reshape how we approach problem-solving in numerous fields.

The authors emphasize that even though the GPU they used for testing was a high-end model, the observed speed improvements suggest that similar results should be achievable on less powerful GPUs, making this technology accessible beyond the realm of high-performance computing. This research represents a significant step toward a future where complex problems are efficiently addressed, unlocking previously unimaginable possibilities.