Why Do Some Images Always Win in AI Searches?

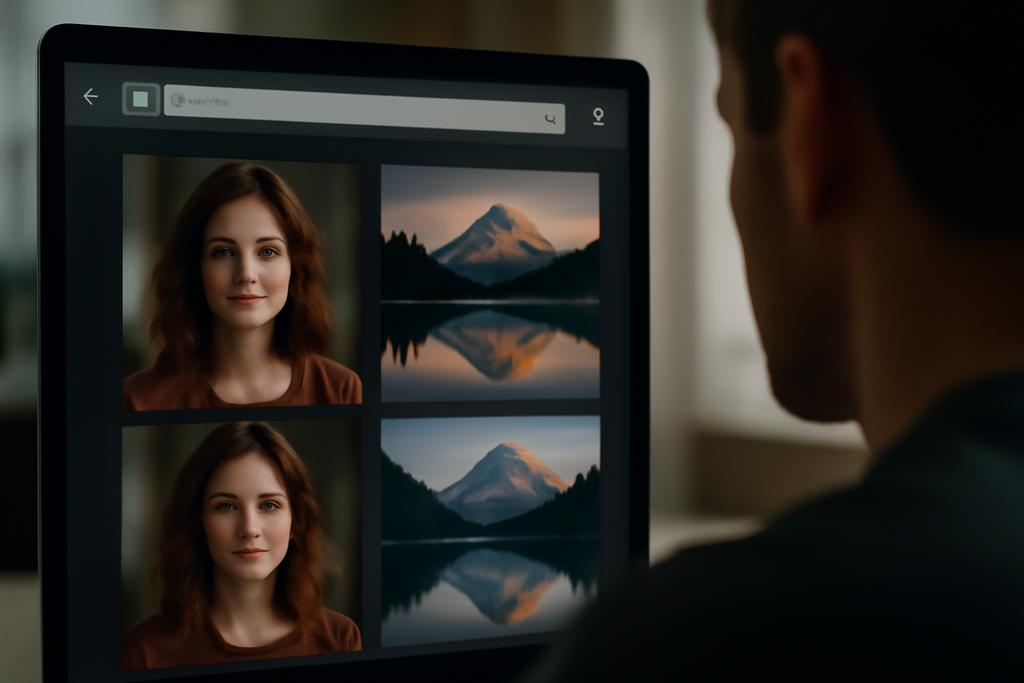

Picture this: you type a phrase into a search engine that’s supposed to find images, videos, or sounds matching your words. Instead of a diverse set of results, you keep seeing the same handful of images popping up again and again. This isn’t just a quirk of your search—it’s a deep-rooted problem in how AI systems understand and compare different types of data, known as the hubness problem.

Researchers at Zhejiang University, led by Zhengxin Pan and Haishuai Wang, have taken a fresh look at this issue in cross-modal retrieval systems—those that match data across different formats, like text to image or audio to video. Their work, presented at the 2025 ACM International Conference on Multimedia, dives into why some items become “hubs” that dominate search results and how to fix it.

The Hubness Problem: When Popularity Skews AI’s Judgment

In high-dimensional spaces where AI models operate, some data points become hubs—items that appear as the nearest neighbor to many queries. Imagine a party where a few people are so popular everyone wants to talk to them, while others are ignored. This imbalance leads to less accurate retrieval because the AI over-relies on these hubs, missing out on more relevant but less “popular” matches.

This problem is especially tricky in cross-modal retrieval, where the AI must bridge gaps between different types of data—like matching a sentence to a video clip. Despite advances in embedding techniques that map different data types into a shared space, hubness remains a stubborn obstacle.

Balancing Act: From Inverted Softmax to Sinkhorn Normalization

One existing method to tackle hubness is the Inverted Softmax (IS), which adjusts similarity scores to reduce the dominance of hubs by balancing the probability that each target (like an image or video) is retrieved. However, IS only balances the targets, ignoring the distribution of queries (the search inputs), which limits its effectiveness.

Pan and colleagues propose a more holistic approach called Sinkhorn Normalization (SN). This method simultaneously balances the probabilities of both queries and targets, ensuring a fairer, more uniform matching process. They achieve this by framing the problem as an optimal transport task—a mathematical way to find the most efficient way to transform one distribution into another—solved using the Sinkhorn-Knopp algorithm.

Think of it as organizing a dance where every dancer (query) and every partner (target) gets an equal chance to pair up, rather than letting a few popular dancers monopolize the floor.

When You Don’t Know the Queries: The Dual Bank Solution

In real-world applications, the exact distribution of queries is often unknown at search time. To estimate query distributions, systems use a query bank—a collection of example queries. But if this bank doesn’t closely match the actual queries, the hubness correction falters.

To bridge this gap, the researchers introduce Dual Bank Sinkhorn Normalization (DBSN). Instead of just relying on a query bank, DBSN also incorporates a target bank—an auxiliary set of targets aligned with the query bank. This dual-bank approach narrows the mismatch between queries and targets, improving the accuracy of hubness estimation and retrieval results.

Real Gains Across Images, Videos, and Audio

The team tested SN and DBSN on a variety of datasets spanning text-to-image, text-to-video, and text-to-audio retrieval tasks. The results were clear: SN consistently outperformed IS by balancing both sides of the retrieval equation, and DBSN further improved performance when query distributions were unknown.

For instance, on popular benchmarks like MSR-VTT (video-text) and Flickr30k (image-text), SN boosted recall rates by around 5%, a significant leap in this competitive field. Visualizations showed that SN and DBSN corrected many retrieval errors caused by hubness, returning results that better matched the query’s meaning.

Why This Matters Beyond Search Engines

Cross-modal retrieval powers many AI applications—from helping visually impaired users find images from descriptions, to enabling smarter video search, to organizing massive multimedia archives. Hubness reduction means these systems can provide more diverse, relevant, and fair results.

Moreover, the probabilistic balancing framework and the dual-bank strategy offer a new lens for understanding and improving AI systems that juggle multiple data types. It’s a reminder that fairness and balance aren’t just social ideals—they’re mathematical necessities for better AI.

Looking Ahead: A More Balanced AI Landscape

Pan, Wang, and their collaborators have open-sourced their code, inviting the community to build on their work. As AI continues to weave together text, images, sound, and video, methods like Sinkhorn Normalization will be crucial to ensure no single “hub” dominates the conversation.

In a world flooded with data, balancing the scales might just be the key to smarter, more human-centered AI.