Reading Between the Brain Scans

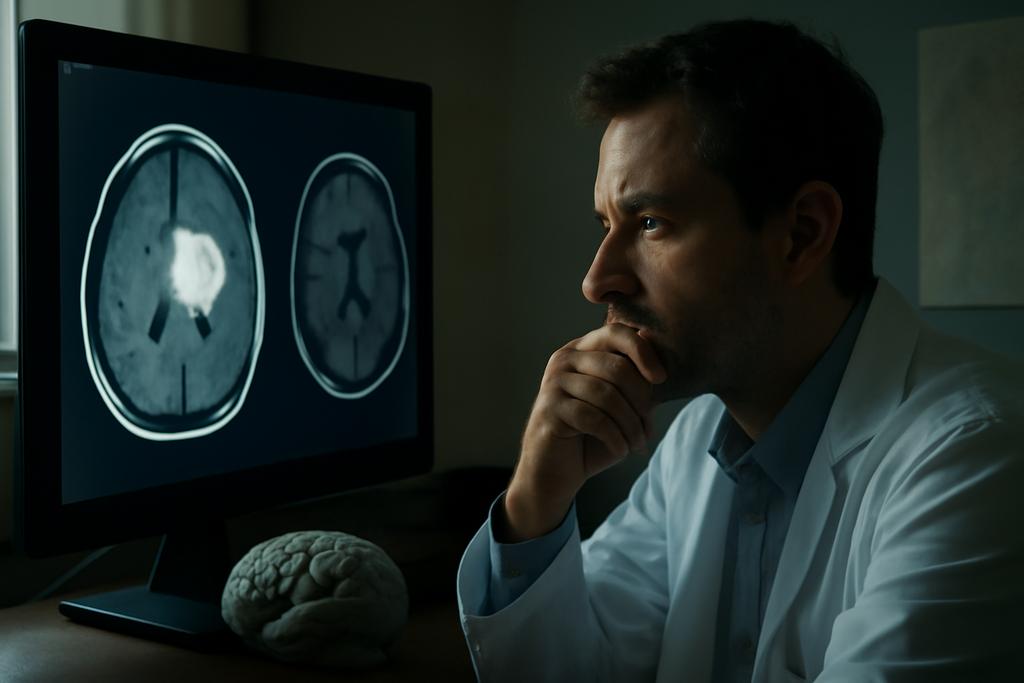

Glioblastoma is a ruthless adversary. This aggressive brain tumor often leaves patients with a grim prognosis—typically just 10 to 15 months of survival after diagnosis. For doctors and patients alike, knowing how long someone might live is more than a number; it shapes treatment choices, hope, and planning. But predicting survival has been a stubborn challenge, tangled in the complexity of brain scans and the subtle signatures tumors leave behind.

Enter a team of researchers from Politecnico di Milano and Fondazione IRCCS Istituto Neurologico Carlo Besta in Italy, led by Yin Lin and colleagues, who dared to rethink how artificial intelligence (AI) could peer into magnetic resonance imaging (MRI) scans to forecast survival — without the usual heavy lifting of tumor segmentation.

The Old Way: Mapping Tumors Like Treasure Hunts

Traditionally, AI models tasked with predicting glioblastoma survival rely on a two-step process. First, they painstakingly segment the tumor on MRI scans—essentially drawing a detailed map of the tumor’s boundaries. Then, they extract features from this map to train models that predict survival. This segmentation step is laborious, time-consuming, and often a bottleneck because it requires expert annotation. It’s like needing a detailed treasure map before you can even start guessing where the treasure might be buried.

Another approach borrows AI models trained on everyday photos—cats, cars, landscapes—and tries to apply them to MRI scans. But brain images are a different beast. Unlike natural images with red, green, and blue channels that share spatial meaning, MRI slices represent different layers of a 3D volume, each telling a unique story. This mismatch makes those pre-trained models less effective.

Vision Transformers: A New Lens on Medical Images

The breakthrough comes from adapting a cutting-edge AI architecture known as Vision Transformers (ViTs). Originally designed for natural image recognition, ViTs excel at capturing long-range relationships within images by breaking them into patches and analyzing how these pieces relate to each other. Think of it as understanding a novel not just by individual sentences but by how themes weave across chapters.

Yin Lin’s team modified ViTs to handle 3D MRI data directly, slicing the brain scans into volumetric patches and feeding them into the model without any prior tumor segmentation. This approach simplifies the workflow dramatically—no more drawing tumor boundaries, no more complex feature engineering. The AI learns directly from the raw images, spotting patterns invisible to the naked eye.

Less Detail, More Insight

One might worry that reducing the resolution of MRI images to ease computational demands would sacrifice critical information. But the researchers cleverly downsampled the images from their original high resolution to a smaller size, preserving essential structural details while making the data manageable for the AI. This is akin to reading a book summary that keeps the plot intact without drowning in every minor detail.

Surprisingly, this resolution reduction did not degrade the model’s performance. The Vision Transformer-based model, dubbed OS_ViT, achieved an accuracy of 62.5% in predicting whether patients would survive short, medium, or long terms—comparable to the best existing methods that rely on segmentation.

Why This Matters

OS_ViT’s segmentation-free approach is a game-changer for clinical workflows. It means radiologists and oncologists can potentially get survival predictions directly from standard MRI scans without extra manual steps. This could speed up decision-making and personalize treatment plans more efficiently.

Moreover, the model’s balanced accuracy across different survival groups means it doesn’t just excel at predicting long-term survivors but also identifies those at risk of short-term survival—patients who might need urgent, aggressive care.

The Road Ahead

Despite its promise, OS_ViT isn’t perfect. The model was trained and tested on a single dataset (BRATS), which limits how well it might perform on new, unseen clinical data. Vision Transformers typically crave vast amounts of data to shine, and medical imaging datasets are often limited in size.

Future research will need to validate and refine this approach across multiple hospitals and patient populations. But the foundation is laid: AI can learn to read brain scans like a seasoned clinician, without needing a map of the tumor first.

From Pixels to Prognosis

This study from Politecnico di Milano and Fondazione IRCCS Istituto Neurologico Carlo Besta reminds us that sometimes, less is more. By stripping away the cumbersome step of tumor segmentation and embracing a novel AI architecture, the researchers have opened a new window into glioblastoma prognosis. It’s a step toward AI tools that fit seamlessly into clinical practice—tools that see the forest, not just the trees, in the complex landscape of brain tumors.

In the battle against glioblastoma, every insight counts. And with Vision Transformers, the future of survival prediction might just be a little clearer.