In the era of data deluges and thousands, even millions, of statistical tests, a single line can decide what counts as a discovery and what stays in the quiet corners of the data. The Benjamini–Hochberg procedure, BH, is a trusted workhorse for controlling the false discovery rate, the expected proportion of false alarms among all claims of a finding. It’s fast, it’s simple, and it’s become a default in fields from genomics to neuroscience. Yet BH makes a bold assumption: it acts as if all the p-values could be behaving like independent coins, or at least like a well-behaved crowd where dependencies don’t derail the overall error rate. Real-world data rarely cooperate that nicely. In genetics, for example, nearby genetic variants tend to move together through inheritance; in brain imaging, nearby voxels and timepoints show tangled correlations. What then?

A team led by Drew T. Nguyen and William Fithian, at the University of California, Berkeley, proposes a practical reply to that knot: what if we map the dependencies among p-values not as a single, unwieldy mass but as a graph—the nodes are tests, and edges tell us which tests are directly dependent on each other? If we know, or can reasonably assume, independence beyond a test’s neighborhood, we can tailor false discovery control to this graph. The result isn’t a new universal rule but a family of graph-aware procedures that retain the power of BH when dependencies are mild, and gracefully degrade toward more conservative approaches when dependencies get denser. It’s a way to acknowledge the data’s social network without overfitting or overcorrecting.

Crucial idea: the core insight is to treat dependence as a local phenomenon and to design thresholds that adapt to the local structure around each test. The authors’ flagship method, Independent Set BH, or IndBH, harnesses the graph to identify independent groups of tests, within which BH can operate more freely. The philosophy is simple but powerful: your p-values aren’t all equally tangled; some clusters can be treated as almost independent, while others demand caution. This reframes how we think about “how dependent is this data?” from a blunt global question to a precise, graph-guided reading of the data’s neighborhoods. The study’s authors—Drew T. Nguyen and William Fithian—brought this idea to life from their work at UC Berkeley, showing both theory and practical algorithms that work on real-scale problems, including a million-hypothesis simulation and a real genome-wide association study dataset.

What a dependency graph means for p-values

The authors formalize a dependency graph D that links p-values p1, p2, …, pm. A node i in this graph represents the i-th hypothesis, and its neighborhood Ni contains those other hypotheses whose p-values are not assumed to be independent of pi. The key twist is that dependence is only assumed within neighborhoods; tests that are not connected by edges are treated as independent. This is a practical middle ground: we don’t pretend we know every correlation in the data, but we do leverage the knowledge that some tests are only loosely coupled with others. This local view lets us prove finite-sample FDR control under what they call a dependency-graph model.

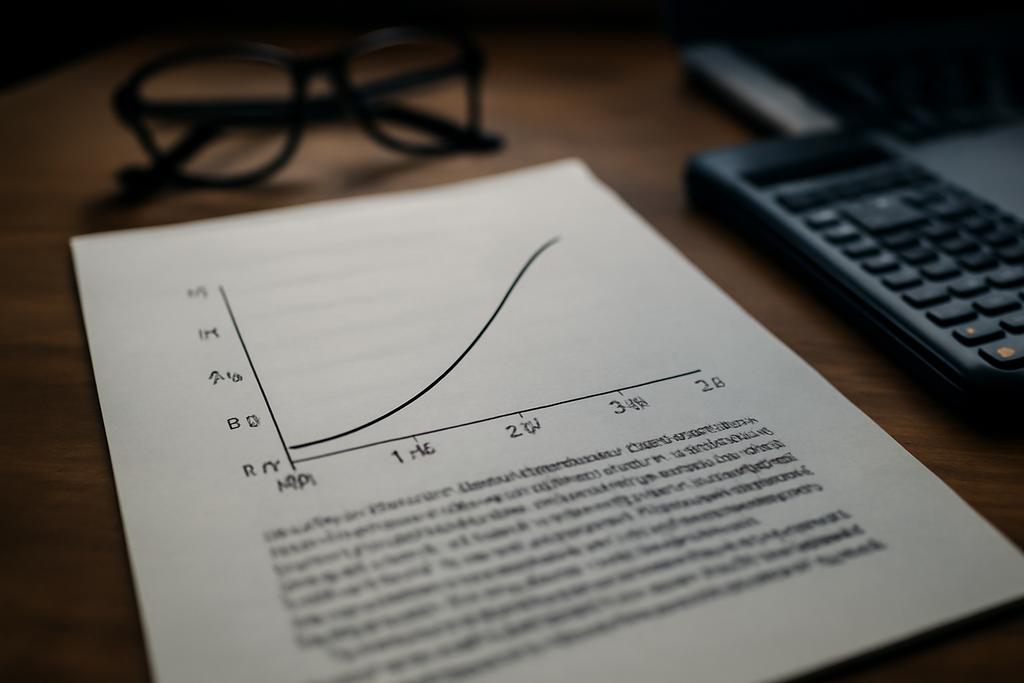

In their setup, a p-value pi is assumed to be superuniform under the true nulls, which is a technical way of saying it’s stochastically larger than a uniform distribution. The mathematics is careful, but the message is intuitive: as long as a test’s neighbors don’t conspire against it, we can bound the chance that it slips through when it shouldn’t. The graph isn’t just a diagram—it’s a hyper-parameter that captures what you know about the data’s structure. You can imagine the genome arranged into blocks by chromosomes, or time-series measurements partitioned into spatial or temporal neighborhoods. The sparser the graph, the closer you are to BH’s classic, free-wlying power; the denser the graph, the more cautious we must be, but still with principled guarantees rather than blunt conservatism.

The paper shows two clean, practical results. First, any D-adapted procedure that satisfies a handful of conditions—self-consistency, monotonicity, and a neighbor-blindness property—controls the FDR at level α |H0|/m, where H0 are the true null hypotheses. Second, when the data meet a broader, weaker assumption called partial positive dependence with respect to the graph, the same control holds. In short: you can keep a rigorous handle on false discoveries even when the full independence across all tests is off the table, as long as you’ve got a trustworthy dependency graph to guide you.

IndBH and friends how they work

The heart of Nguyen and Fithian’s proposal is the Independent Set BH, IndBH. The idea is elegant in its modularity: identify independent sets in the dependency graph and run BH separately on masked versions of the p-values that erase the influence of a test’s neighbors. If a test’s neighbors could have inflated its chance of rejection, masking them prevents that inflation from polluting the threshold that pi must beat. The results are then united across all independent sets. Because the independent sets are, by definition, non-interacting, the union preserves FDR control. When the graph is sparse, those independent sets are large and IndBH behaves much like BH, preserving power. When the graph is denser, the method becomes more conservative, but with a clear, finite-sample guarantee that BH and BY don’t automatically provide in the same setting.

Nguyen and Fithian don’t stop at a single algorithm. They introduce IndBH(k), an iterative refinement that masks neighborhoods several steps and recomputes thresholds in a way that reduces the so-called self-consistency gap—the slack between what the method promises and what it actually delivers—without sacrificing the FDR guarantee. In practice, a small k, like k=3, already yields noticeable improvements in power compared to a naive graph-blind adaptation, while remaining computationally practical for data sets with hundreds of thousands to a million tests. They even formalize a fixed-point variant, SU D, which is the most liberal D-adapted procedure one could hope for under this framework, though they stop short of recommending it for routine use because of computational complexity in the worst cases.

Two other threads in the paper are worth highlighting. First, they show how to make these procedures fast in practice by trimming the problem to the BH rejection set, because IndBH only needs to consider tests that BH itself would reject. In large-scale problems, the BH rejection set is often a small slice of the whole array, which means the heavy graph computations live on a much smaller subgraph. Second, they show an especially slick parity-of-blocks scenario: when the data form blocks that behave like cliques—dense pockets of tests that are inter-dependent within but independent across blocks—the IndBH computation can be nearly as fast as BH itself. This is a practical hint that the method is not merely a theoretical curiosity but can be run on real genomic datasets, like the schizophrenia GWAS example they discuss, where LD patterns naturally impose block-like dependence within chromosomes.

From theory to the bench: a real-world genomics example

To demonstrate the method’s bite, the authors walk through a genome-wide association study—one of the most testing grounds for false discoveries. They start with p-values from a large schizophrenia GWAS and prune or, more precisely, build a dependency graph based on linkage disequilibrium (LD) on a per-chromosome basis. In effect, SNP p-values on the same chromosome that are in LD are connected, while those on different chromosomes are treated as independent. It’s a clean, realistic graph that captures the essence of the genetic data’s dependence structure without pretending we know every subtle correlation among millions of tests.

The results are telling. In many GWAS-style settings, the graph-aware IndBH method retains nearly the same power as BH when the dependence graph is sparse, but it tames false discoveries more robustly when the LD structure risks inflating the false discovery rate. In particular, for the schizophrenia dataset, the dependency graph reveals blocks and haplotype-like patterns that confirm a modular structure in the genome. The IndBH family can operate within those blocks with the kind of efficiency that BH never could in a naïve, fully dependent world, delivering a practical tool for researchers who want robust FDR control without turning their whole analysis into a moralistic black-and-white conservatism.

In the end, the work is not a single magical bullet but a practical framework that asks a few candid questions: How are my tests connected? Can I isolate independent neighborhoods where I can safely apply the standard BH logic? If I mask a neighborhood’s tests, can I still learn something meaningful about the rest? The paper’s authors answer with a toolbox that respects the data’s social graph, preserves rigorous error control, and stays tethered to computational reality. It’s the kind of methodological advance that feels obvious in hindsight and exciting in practice—an antidote to the overzealous conservatism that sometimes shadows large-scale science.

Beyond the numbers, this work carries a human dimension: it acknowledges that data come with structure, that structure can be learned and exploited, and that honest science rewards both caution and cleverness. By embracing the graph—the data’s invisible spine—scientists gain a more faithful read on what counts as a discovery and what might be a mirage produced by correlation alone. As Nguyen and Fithian remind us, the goal is not to demand total independence where it doesn’t exist, but to map the real dependencies and harness them to improve our confidence in discoveries. The approach offers a principled middle path between the unguarded power of BH and the blunt stringency of BY, a path that could reshape how we tackle false discoveries in genomics, neuroimaging, and any field where tests live inside a tangled web of relationships.

In short, the paper lays down a new way to think about p-values: not as a single wandering crowd, but as a constellation where some stars are tightly linked and others glow independently. When we know where to look, we can let the bright ones shine and keep the dim ones from lighting up the night sky too often. It’s a practical, human-centered advance in the science of discovery, one that could quietly accelerate what we learn from data while keeping our trust in those discoveries intact. The study’s authors and their team at UC Berkeley have given researchers a concrete path to implement this approach, supported by a computational framework and a clear eye on real-world datasets. If you’ve ever worried that the sheer scale of modern testing would drown out true signals, this is a breath of clarity—an invitation to map the data’s social graph and listen more carefully to what it’s really saying.